As digital applications grow in complexity, containerization has become a key approach for efficient product development and deployment. One of the leading container orchestration platforms is Kubernetes. According to the 2026 Cloud Native Computing Foundation survey, an impressive 94% of organizations have adopted Kubernetes, with 82% already using it in production and 12% actively piloting or evaluating it in test environments.

So, what makes this platform stand out? What are the main Kubernetes use cases? We outlined common applications to help you understand how it helps streamline operations, scale, and accelerate deployment cycles.

Top 20 Kubernetes applications and use cases

Kubernetes (K8s) supports continuous improvement and delivery by giving organizations an infrastructure foundation that enables self-healing, scaling, quick rollbacks, and more reliable release strategies. Furthermore, it can be integrated into various environments to automate daily operations and make workloads infrastructure-agnostic.

We have grouped K8s use cases into five categories: application architecture, DevOps, infrastructure and scaling, multi-tenant networking and orchestration, and specialized workflows.

Application architecture

1. Microservices management

Microservices architecture helps break applications into small, independently deployable services. Kubernetes allows for orchestrating and managing these workloads. Using K8s helps automate deployment, simplify service discovery and recovery, and support rolling updates without downtime. Thus, engineering teams gain consistency when adding or updating services. Kubernetes also handles inter-service communication through built-in load balancing and allows services to scale at the component level rather than the entire application.

In practice, this approach is especially effective for data-intensive and distributed platforms. N-iX applied Kubernetes to help a global logistics company modernize its microservices ecosystem and scale core services that handled data ingestion, processing, and analytics. By standardizing deployment and runtime behavior, Kubernetes enabled our engineers to reduce operational complexity, improve service reliability, and enable independent scaling of microservices. As a result, our client gained faster iteration and stronger alignment between engineering teams and business goals, enabled by multi-tenant, fully isolated deployments and an Istio-based service mesh.

Read the full use case here: Driving logistics efficiency with industrial Machine Learning

2. Legacy modernization

Many organizations run monolithic applications that are hard to scale or alter. One of Kubernetes’ main use cases is legacy modernization, as it enables a phased modernization approach. Instead of rebuilding everything at once, teams can containerize components of existing applications and migrate them incrementally to Kubernetes. At the same time, K8s also allows organizations to containerize an entire monolith and run it, providing a stable environment that simplifies operations and gradually retires legacy infrastructure.

Containerization reduces risk. Legacy and modern components can coexist, share infrastructure, and evolve separately. Over time, the platform emerges, with new features deployed through modern practices while core business logic remains stable. Gradual decomposition also helps optimize costs and minimize operational disruption during migration.

3. Cloud-native application development

Cloud-native applications are designed to leverage a distributed and dynamic infrastructure. Kubernetes supports this, providing self-healing (automatic restart of failing containers), horizontal scaling, and declarative updates. These capabilities align closely with cloud-native design principles, making them some of the most prevalent Kubernetes use cases.

Additionally, many managed cloud services for containers, applications, and serverless workloads run on K8s using extensions such as Knative or event-driven autoscaling. Although users may not interact with Kubernetes directly, it helps improve the performance and reliability of many cloud-native platforms.

4. Multi-tenant SaaS orchestration

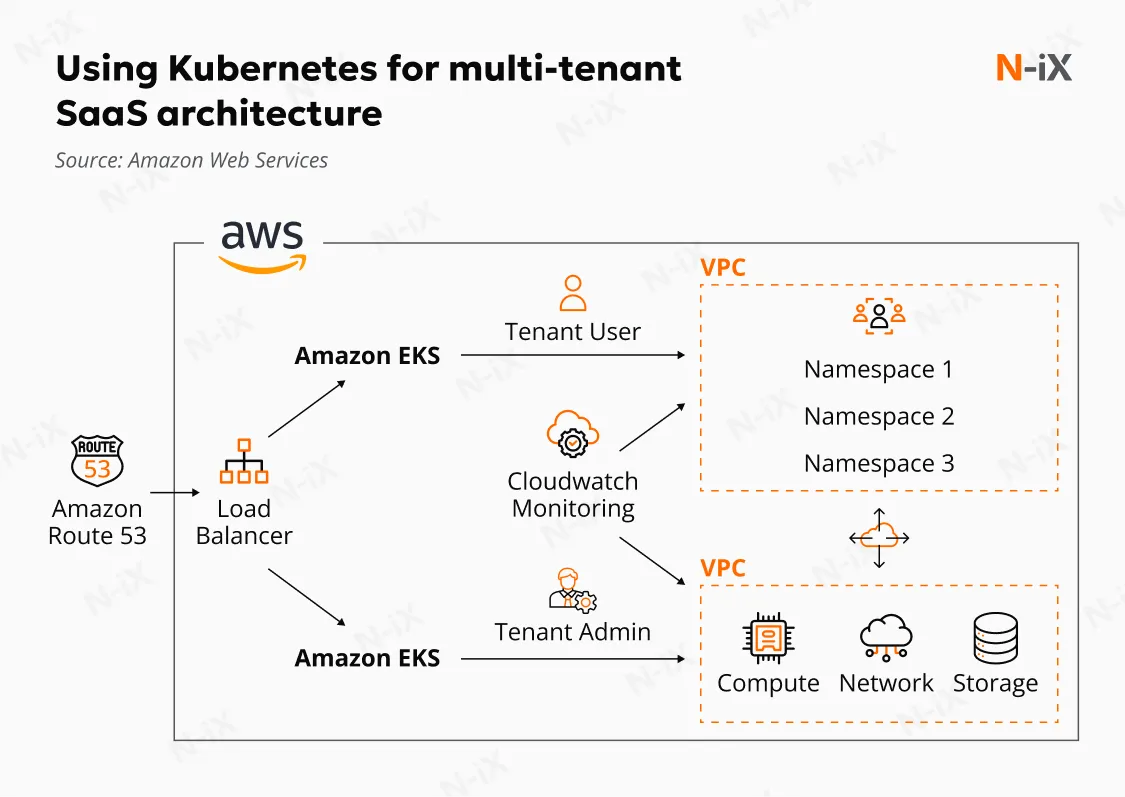

Software-as-a-Service (SaaS) platforms rely on application-level multi-tenancy. A single software instance serves multiple customers, while application logic and database controls ensure data isolation. Many SaaS providers also depend on infrastructure-level multi-tenancy to operate efficiently. Kubernetes enables this by allowing multiple teams or services to share a single cluster while remaining logically isolated.

At the infrastructure level, Kubernetes uses namespaces and resource quotas to separate workloads and control resource usage. True multi-tenancy is achieved through a service mesh that enforces security protocols, strengthening governance, security boundaries, and cost efficiency.

This approach was applied by N-iX while building a scalable SaaS platform for a global automotive technology leader. Our client aimed to unify and standardize internal digital tools. Kubernetes was used here to containerize and orchestrate platform services, simplifying deployment and improving consistency across teams. Thus, our client reduced manual setup effort, improved component reuse, and laid the foundation for cost-efficient scaling of SaaS applications.

Read the full case here: Helping automotive leader scale their digital transformation journey

Software development lifecycle automation

5. CI/CD pipeline automation and execution

Continuous integration and continuous delivery are essential for fast, reliable releases, but traditional pipelines are often slowed by environment drift and manual steps. Kubernetes addresses this by serving as a unified execution and orchestration layer for CI/CD workflows. Pipeline jobs, temporary environments, and production workloads can all run on the same platform, using container images identical to production.

To modernize our client’s CI/CD pipelines, N-iX DevOps engineers implemented Kubernetes as the core execution layer. This enabled consistent build and deployment environments across SDLC stages. This shift reduced manual deployment steps, improved release velocity, and strengthened automation across the delivery process. As a result, our client achieved faster developer feedback loops, reduced manual errors, and gained more precise operational control over software delivery.

Read the full case here: Streamlining operations and optimizing costs in energy

6. Developer environments standardization

Inconsistent local environments can cause bugs that only surface in production. Kubernetes helps standardize environments across development, staging, and production. Thus, engineers work against predictable configurations. This uniformity accelerates onboarding and reduces debugging time, and enables security and vulnerability updates to be applied early in staging environments. Kubernetes also supports remote team collaboration, where everyone runs similar infrastructure locally or in shared development clusters.

7. DevSecOps automation

Kubernetes enables DevSecOps by automating governance and security controls across the software lifecycle. Thus, protection automation is among the most common use cases for Kubernetes adoption. By defining the desired state of clusters declaratively, teams can apply standardized templates, policies, and stacks consistently across environments. This model aligns naturally with Infrastructure as Code (IaC) and GitOps practices, enabling changes to be reviewed, versioned, and automatically enforced.

Using Kubernetes as the orchestration backbone, DevOps teams can embed security and compliance controls directly into delivery workflows. Automated builds, tests, and deployments run against predictable configurations, while standardized policies help maintain consistency across teams. This approach strengthens automation, improves operational discipline, and enables security to scale alongside delivery speed rather than slowing it down.

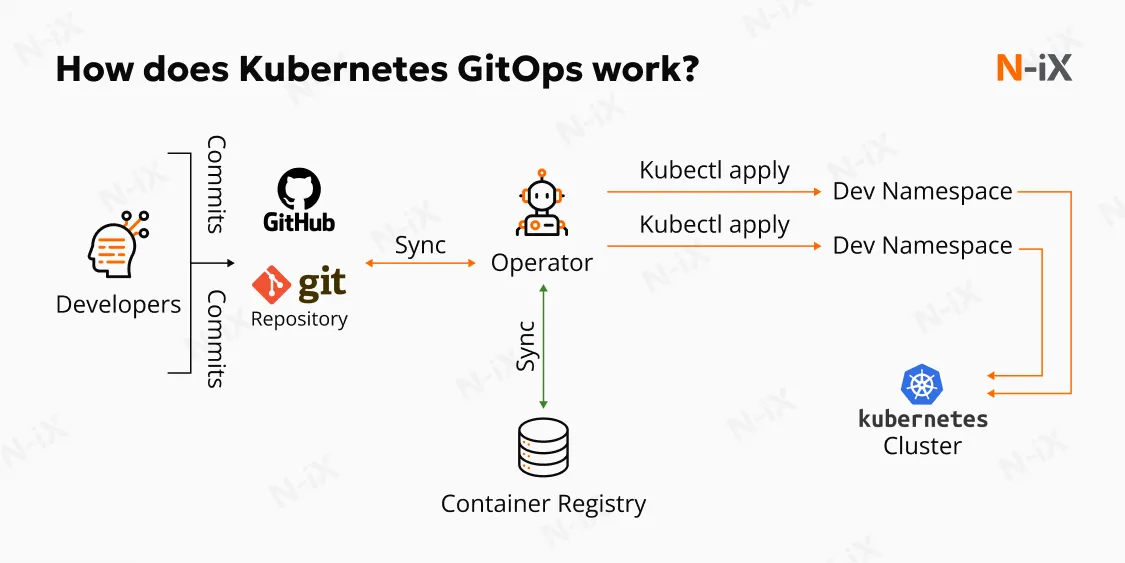

8. GitOps workflows implementation

GitOps extends Git as the source of truth for infrastructure and application configurations. Deployment changes are committed directly to declarative files, such as deployment manifests, and Kubernetes continuously reconciles the cluster state with what is defined in the repository. This approach simplifies delivery by separating continuous integration from deployment, shifting release responsibility to development teams. As a result, organizations gain clearer ownership, a complete change history, easier rollbacks, and consistent policy enforcement driven by the repository.

Infrastructure and scaling

9. Autoscaling

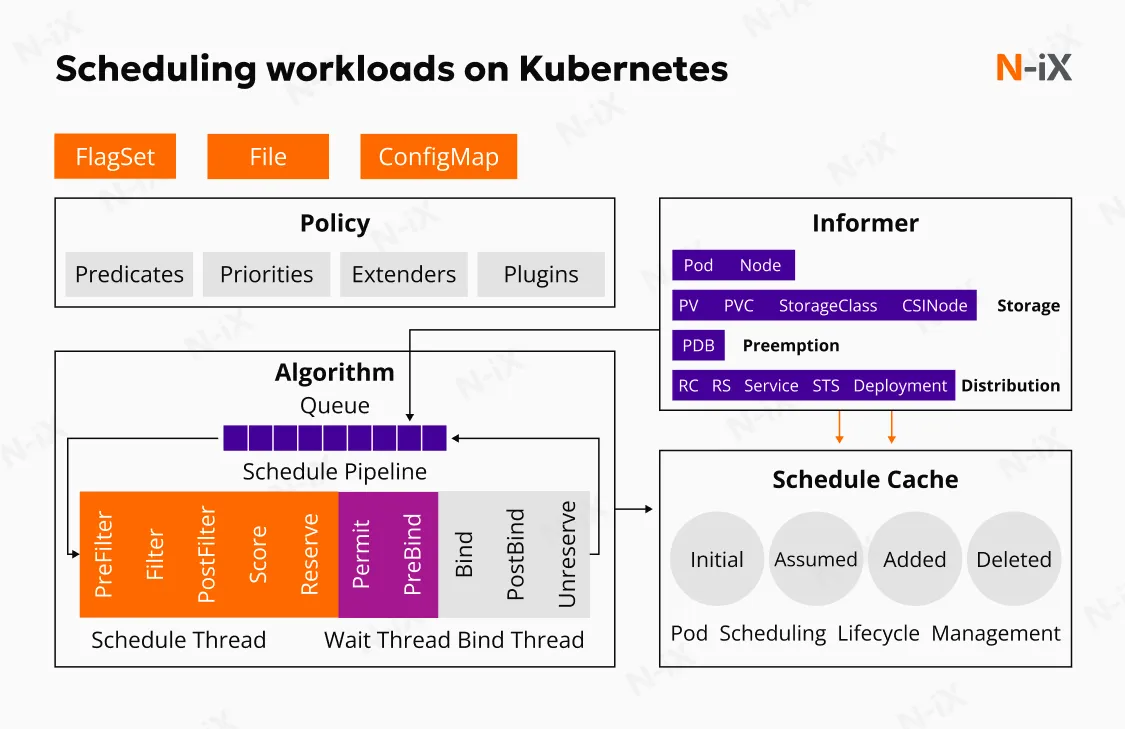

Kubernetes supports multiple autoscaling dimensions: horizontal pod scaling, vertical resource scaling, and cluster autoscaling. This means workloads can dynamically adjust based on metrics such as CPU utilization, memory usage, or custom business indicators. At the same time, cluster autoscaling accounts for infrastructure updates, as adding new nodes can take up to five minutes before they are ready to handle traffic.

Autoscaling is one of the most important Kubernetes use cases as it enables a true pay-as-you-go approach. During off-peak hours, your cluster runs minimal resources. During periods of high demand, Kubernetes automatically increases capacity. This flexibility is essential for applications with unpredictable traffic.

10. High availability (HA) and disaster recovery (DR) maintenance

High availability and recovery requirements are defined by clear service-level and resilience objectives. Kubernetes helps organizations meet these objectives by maintaining desired replica counts and automatically restarting failing components. Thus, organizations can spread workloads across multiple zones, regions, or clusters.

In disaster recovery scenarios, engineering teams replicate clusters and use backup tools to quickly restore the desired cluster state. Kubernetes supports scheduled snapshots and external storage integration to meet strict recovery objectives. The main complexity lies in application data, as databases and storage must be replicated and made available across regions to meet recovery objectives.

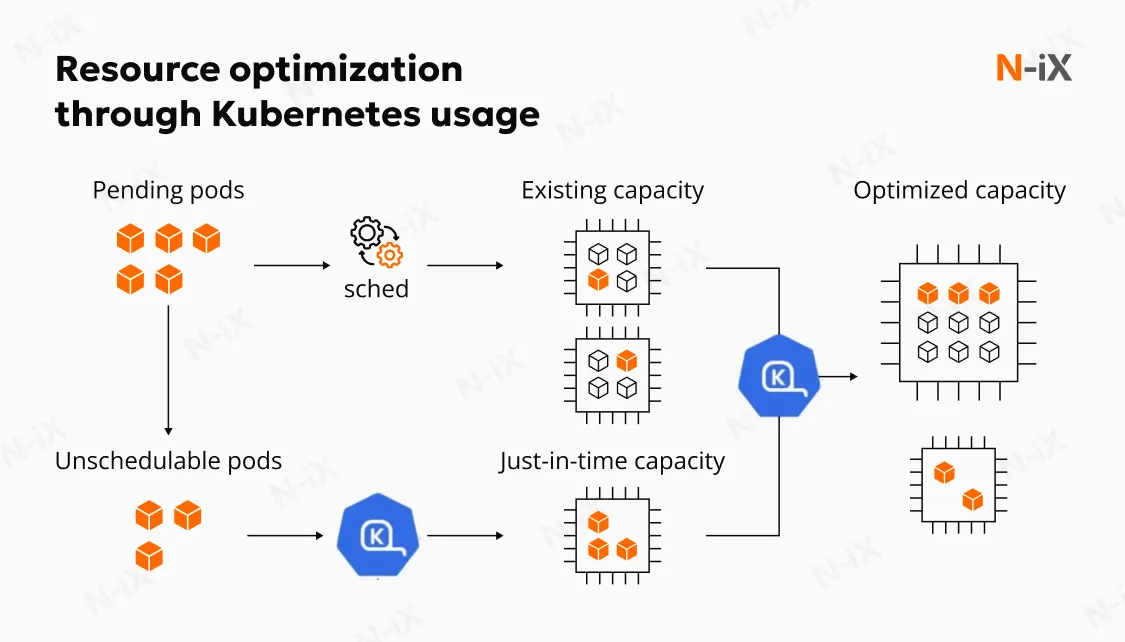

11. Resource optimization

Effective resource utilization helps reduce costs and improve performance. Kubernetes allows defining explicit resource requests and limits, guiding the scheduler on the CPU and memory needs for each container. This ensures workloads receive the necessary resources without overconsuming or underutilizing node capacity.

Our infrastructure engineers also highlight that Kubernetes controls help mitigate performance degradation when one workload affects others. Over time, engineering teams can adjust resource allocations based on real usage data, minimizing waste and improving predictability.

12. Multi- and hybrid cloud environments management

One of the most common Kubernetes use cases is its ability to standardize operations across different environments. Whether you run workloads in the cloud or on-premises, Kubernetes provides the same operational model. This consistency supports hybrid and multi-cloud strategies. Engineering teams can make environments cloud-agnostic and optimize for cost or compliance. Sensitive workloads can run on-premises while less critical workloads reside in public clouds.

Our cloud engineers used Kubernetes for an on-premises project with a leading telecom company to support fintech integration services. They required strict data controls and low latency while also aiming to modernize the on-premises platform. Using K8s, our engineers provided a consistent orchestration layer across the client’s internal infrastructure. This enabled automated deployment, scaling, and monitoring of containerized workloads on-premises. Thus, our client improved operational reliability, reduced manual maintenance, and gained greater control over compliance and data residency. At the same time, this allowed the company to maintain cloud-like agility within its own data centers.

Read the full case here: Gaining a competitive edge in telecom with fintech integration

Advanced networking and orchestration

13. Edge computing and IoT management

Edge computing requires processing deployments closer to users or data sources to reduce latency and support intermittent connectivity. Kubernetes supports this by enabling consistent workload management across distributed edge locations and regions. With centralized control planes and fleet management capabilities, organizations can operate edge and regional clusters as part of a unified multi-region strategy.

IoT also benefits from this pattern. Instead of managing thousands of devices individually, K8s orchestrates workloads across edge nodes and aggregates data for centralized processing. Platforms such as Anthos extend this model by providing centralized governance, policy enforcement, and lifecycle management across on-premises, edge, and cloud regions. This approach simplifies updates and monitoring across dispersed infrastructure.

14. Network automation and software-defined connectivity

Modern networking models treat network policies and traffic behavior as code. Kubernetes integrates with network service meshes (like Istio) and plugins to automate policies, observability, and routing.

One of the prominent Kubernetes use cases here is the ability for engineering teams to set security policies centrally and enforce them uniformly. This supports traffic shaping, rate limiting, and detailed observability. These capabilities are essential for distributed systems spanning regions or multiple cloud providers.

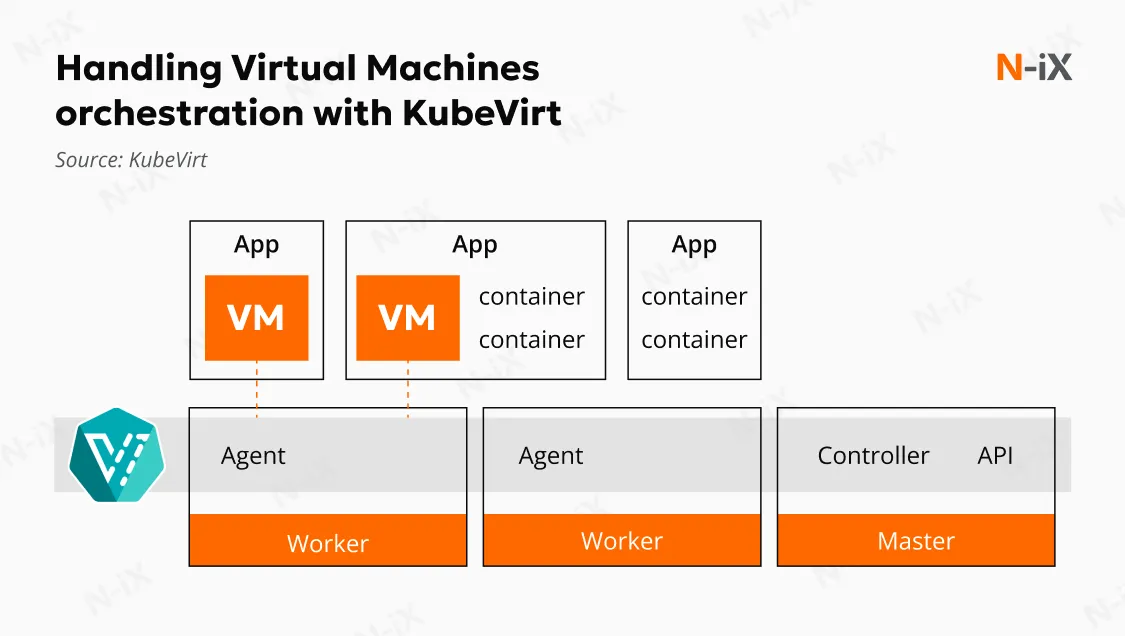

15. Orchestrating virtual machines with KubeVirt

Not all workloads can be moved to containers. With KubeVirt, Kubernetes can orchestrate entire virtual machines alongside containers, allowing teams to run existing VM-based applications unchanged on Kubernetes. This enables organizations to move current workloads into a K8s-managed environment without refactoring, using a single control plane. As a result, teams can simplify hybrid environments where legacy virtual workloads coexist with modern services.

16. Serverless and PaaS foundation development

Serverless and Platform as a Service (PaaS) often run on Kubernetes. The platform handles scaling, isolation, and event routing, and provides simple interfaces for developers. This setup balances ease of use with operational control. Our infrastructure engineers recommend this approach when developing complex platform products to maintain strict governance and performance.

Specialized workflows

17. AI and machine learning workloads orchestration

AI and machine learning workloads require training on large datasets and serving models at scale. One of the most innovative use cases of Kubernetes is its ability to support AI/ML development, testing, deployment, and destruction. It helps efficiently manage GPU resources, schedule jobs, and ensure consistency across clusters.

Frameworks such as Kubeflow and custom operators enable engineers to build reproducible training pipelines. Autoscaling and monitoring integration also helps manage AI/ML workloads reliably.

18. Big data processing

Big data platforms require significant resources, including distributed computing and elastic scaling. Kubernetes makes large-scale data processing easier by allowing teams to schedule batch and streaming jobs and rely on automatic scaling. Tools such as Apache Spark run natively on Kubernetes. This reduces the operational burden compared with traditional Hadoop or standalone clusters. Deployment is simpler, resources are shared efficiently, and cloud storage integrates easily. This is one of the most notable Kubernetes use cases, demonstrating its ability to handle large-scale data processing efficiently.

19. High-performance computing (HPC) orchestration

HPC workloads demand high throughput and often specialized hardware. Kubernetes extends to HPC with custom scheduling policies and device plugins. This enables engineering and research teams to run compute-intensive jobs using the same orchestration layer as other workloads. K8s adoption also reduces context switching and enables unified resource management across scales.

20. Batch jobs and scheduled workflows management

Batch tasks such as nightly data processing, backups, or report generation fit naturally into Kubernetes jobs and cron jobs. These workloads can be scheduled during off-peak hours, when clusters already maintain a minimum number of nodes to keep services running.

By running batch workloads declaratively on Kubernetes, teams can schedule, monitor, retry, and scale jobs consistently using the same observability stack as the rest of the platform. This approach reduces reliance on standalone schedulers and aligns with CO₂ efficiency blueprints by improving infrastructure utilization and lowering unnecessary energy consumption.

How can N-iX help you implement Kubernetes?

At N-iX, we help organizations design, build, and operate K8s for real business projects. Over the past five years, we completed more than 150 DevOps projects, assisting clients with Kubernetes to build efficient, optimised, and resilient infrastructures. N-iX team includes 400+ cloud professionals and over 70 DevOps experts, who help implement Kubernetes projects of various sizes and industries.

Whether you are modernizing systems or scaling your platforms, N-iX helps you navigate the complexity and derive measurable impact from your Kubernetes initiatives.

FAQs

1. What is the basic use of Kubernetes?

The basic use case of Kubernetes is container orchestration: it manages the application lifecycle through automated reconciliation. K8s handles service discovery, automated rollouts and rollbacks, and horizontal autoscaling. Its primary value is "self-healing", which allows for continuous monitoring of the cluster state that matches the desired state defined by the engineer.

2. What are some common use cases for Kubernetes?

Common Kubernetes use cases include managing complex networking, enabling service-to-service communication at scale, standardizing deployment pipelines across environments, optimizing infrastructure spend for variable-load workloads, supporting high-velocity CI/CD pipelines, etc.

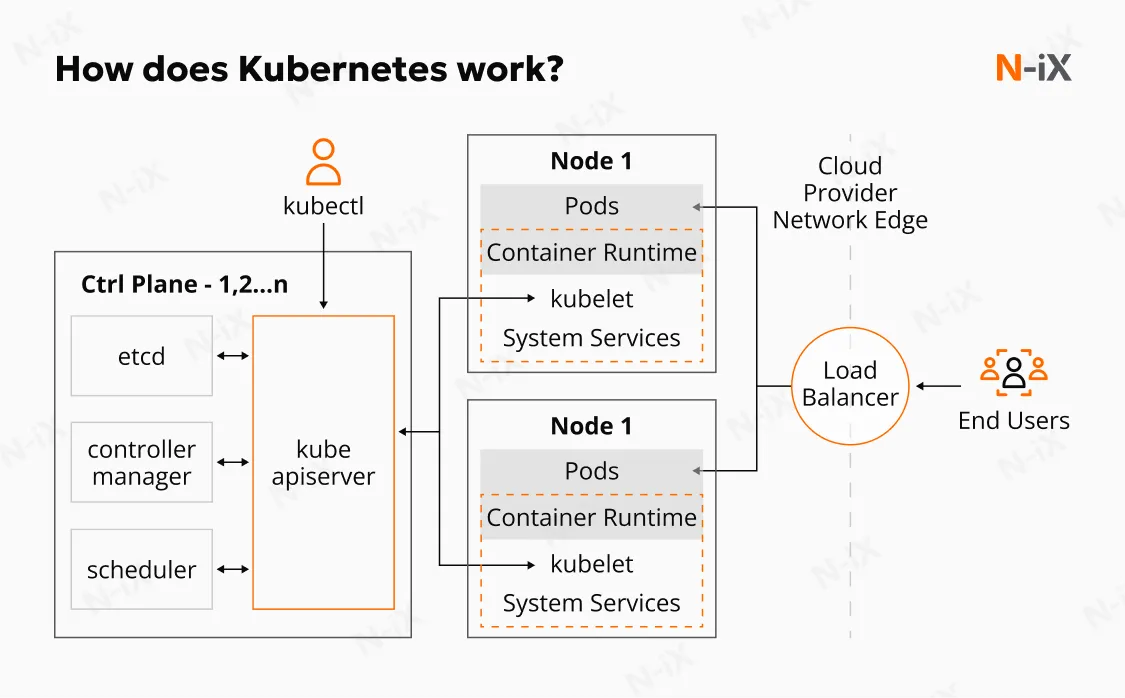

3. What are the two main components of Kubernetes?

The two main components of Kubernetes are the control plane and worker nodes. The control plane is the "brain" of the system. It includes key components such as the API server, scheduler, and etcd. The worker nodes are the “muscles” running containers. This is where the real work happens, as the kubelet and kube-proxy handle the lifecycle and networking of pods.

4. Is Kubernetes suitable for all types of applications?

No. Kubernetes is best suited for distributed, scalable, or frequently changing workloads. For monolithic applications with static resource needs or small-scale deployments, K8s introduces significant operational overhead. The complexity, learning curve, and management costs, often called "K8s tax", can outweigh the benefits. In such cases, serverless solutions or managed PaaS may be more cost-effective alternatives for lower-complexity workloads.

5. Can Kubernetes run on-premises?

Yes, Kubernetes can run on-premises, in the cloud, or in hybrid environments. Due to its open-source nature, Kubernetes provides a unified API that works across on-premises data centers, edge locations, and public cloud providers. This flexibility supports sovereign cloud strategies, enabling organizations to keep sensitive data on-premises while using the same deployment logic for public-facing components in the cloud.

Have a question?

Speak to an expert