Generative AI has moved from pilots to enterprise scale. According to Capgemini, 80% of enterprises have increased their investment in generative AI since 2023, while another 20% have maintained their spending [1]. The same research shows that 24% of organizations have now integrated generative AI into some or most functions, compared to just 6% a year earlier.

For many enterprises, generative AI adoption often means choosing a small (SLM) or large language model (LLM). The selection between SLM vs LLM directly affects costs, performance, governance, and integration with existing processes. A trusted partner with expertise in AI and ML development can help with this choice as well as reliable data pipelines, cost-efficient infrastructure, and strong compliance practices for smooth deployment.

To guide an informed decision, we will outline the key differences between LLM vs SLM, examine the situations where each delivers the most value, and present a practical framework to support model selection.

What is an SLM?

A small language model is an AI model built on the transformer architecture, which helps it to process text by looking at how words relate to each other and turning that into contextual meaning. Its parameter count (the number of learned weights that capture these relationships) usually ranges from tens of millions to a few billion. SLMs are lightweight models that run on modest hardware, deliver quick responses, and are often deployed in controlled environments for domain-specific tasks.

Examples: DistilBERT, Mistral-7B, Phi-3 Mini.

What is an LLM?

A large language model is also built on the transformer architecture, but with tens or even hundreds of billions of parameters. This scale allows it to handle broad knowledge, nuanced language, and complex reasoning. Running such models requires advanced infrastructure, often clusters of GPUs hosted in the cloud.

LLMs are general-purpose by design: they support open-ended tasks such as writing content, answering ambiguous queries, or analyzing diverse datasets. Updating them is resource-intensive, which is why they are most often accessed through APIs or managed platforms.

Examples: GPT-5, Claude, Gemini, Deepseek, LLaMA-70B.

Read more: LLMOps vs MLOps: Key differences, use cases, and success stories

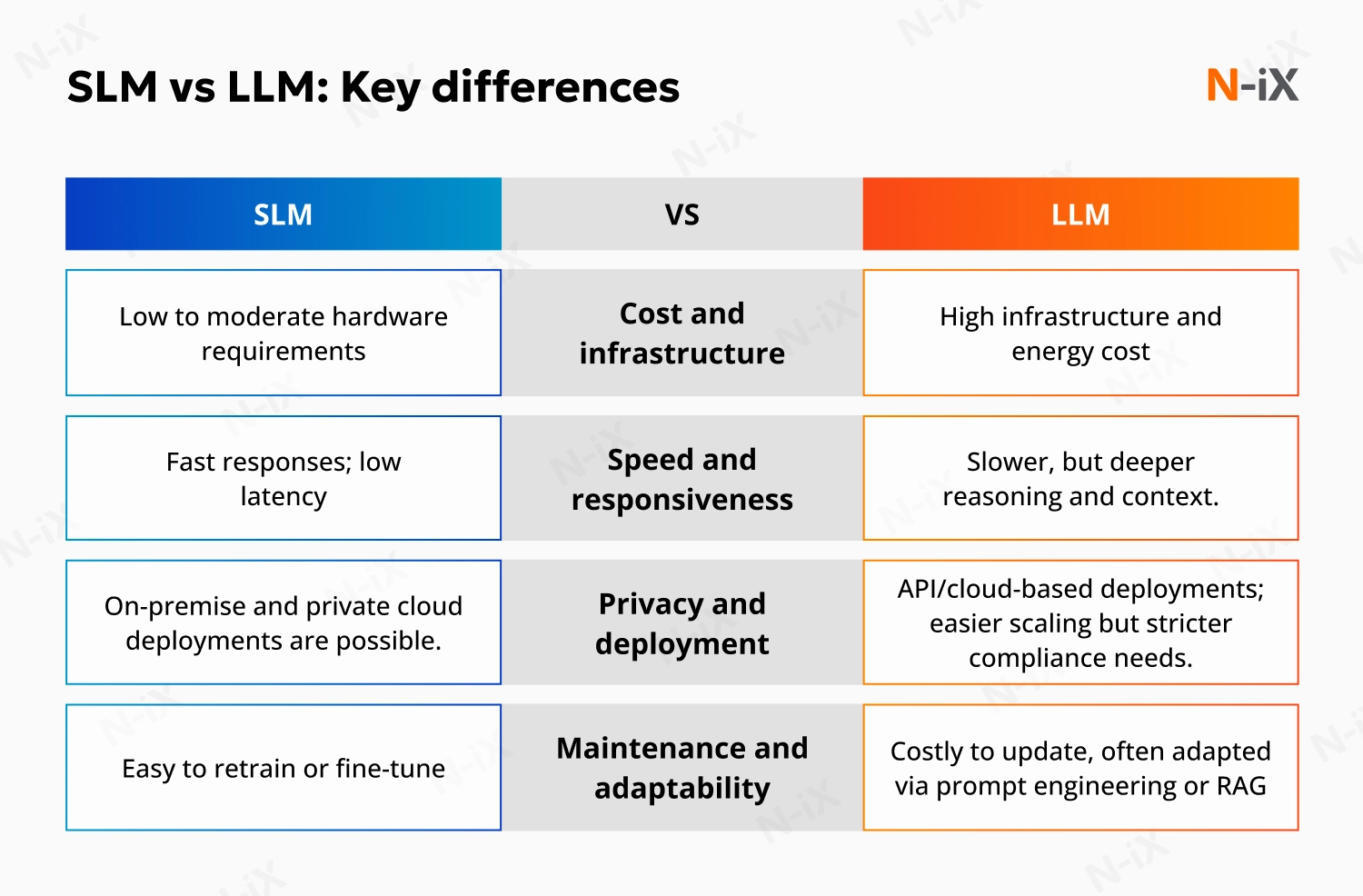

Key differences between SLM vs LLM

Enterprises choosing between SLM and LLM must understand how scale affects practical outcomes across several dimensions. Below are four dimensions where the differences matter most.

Cost and infrastructure

SLMs are lightweight and operate with modest computing requirements. They can run on standard servers, mid-tier GPUs, or even mobile hardware, making them cost-efficient to deploy and scale. For enterprises handling routine, high-volume tasks, this translates into predictable expenses and lower total cost of ownership. However, their smaller size limits reasoning ability, restricts coverage across domains, and makes them less reliable for handling ambiguous or complex queries compared to LLMs.

LLMs require advanced infrastructure, often clusters of high-performance GPUs hosted in the cloud. These requirements increase infrastructure and energy costs, which must be factored into long-term planning. For organizations pursuing enterprise-wide applications, the financial commitment is significantly higher.

Speed and responsiveness

SLMs deliver fast response times thanks to their smaller parameter count. They are well-suited for high-frequency, time-sensitive tasks such as answering standard customer queries or ranking search results.

LLMs provide deeper reasoning and richer contextual understanding, but their scale introduces latency. In real-time or high-volume environments, slower responses can impact user experience and service reliability.

Privacy and deployment options

SLMs can often be deployed on-premises or in private cloud environments, keeping sensitive data under enterprise control. These deployment options make them attractive for regulated sectors such as healthcare or finance, where data residency and compliance are critical.

LLMs are usually accessed through APIs or managed platforms, which is simpler regarding scaling across business units without building and maintaining complex infrastructure. The trade-off is that data may need to leave the organization. While compliance measures and vendor oversight can reduce risk, some sectors prohibit external transfers altogether, which limits where LLMs can be used.

Maintenance and adaptability

SLMs are easier and cheaper to retrain or fine-tune. Enterprises can update them frequently with new product data or regulatory requirements, ensuring outputs stay relevant.

LLMs are costly and complex to update. Because retraining is rarely feasible, enterprises often rely on techniques such as prompt engineering or retrieval-augmented generation to adapt behavior. As a result, maintaining accuracy demands more time, resources, and specialized expertise.

Learn more about open source generative AI

Build a comprehensive generative AI strategy—get the guide!

Success!

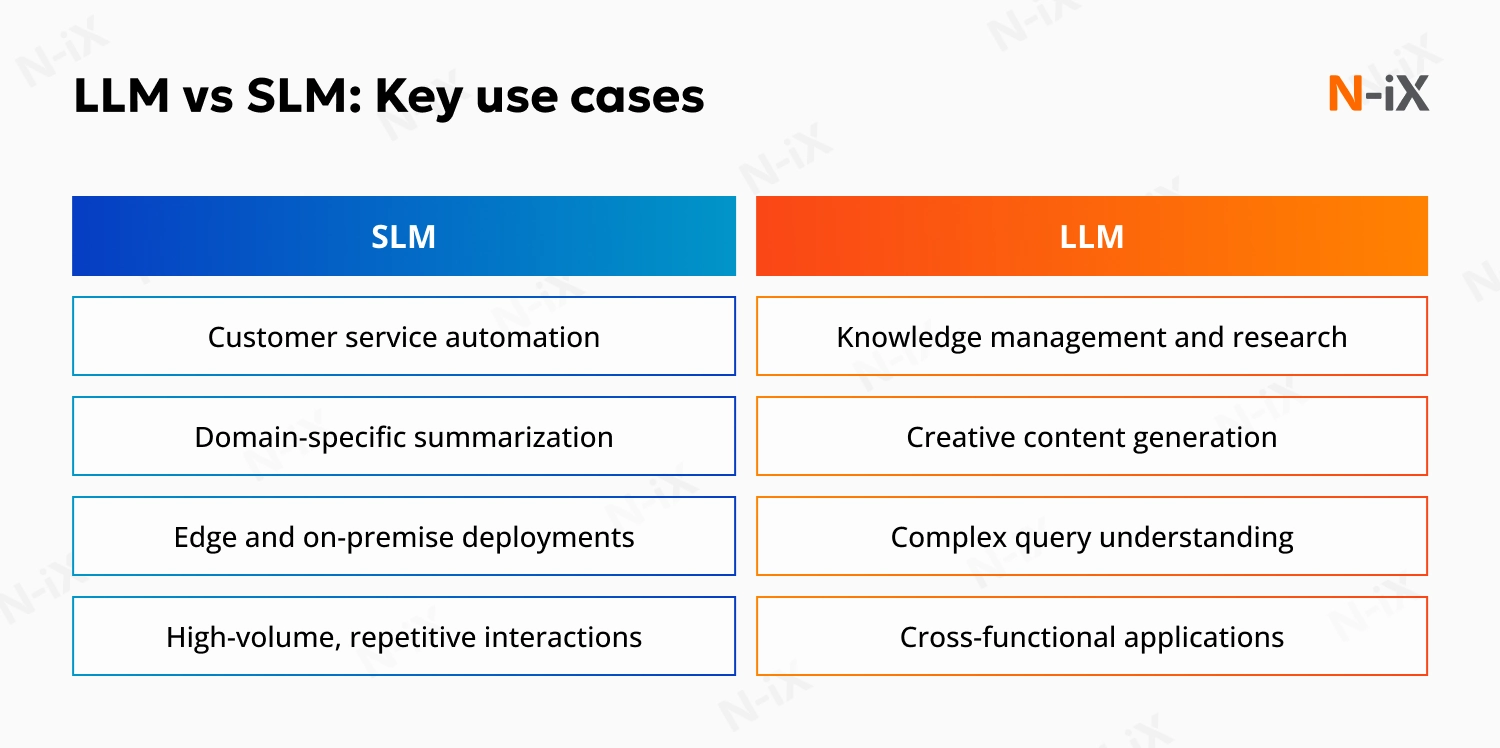

The use cases of SLM vs LLM

When comparing SLM vs LLM, the key difference is how each contributes to business value. Smaller models help optimize cost and latency, while larger models enable advanced reasoning and broader functionality.

Deloitte reports that up to 70% of organizations are already exploring or applying LLM use cases, highlighting the scale of adoption [2]. At the same time, enterprises are increasingly turning to smaller models for efficiency in targeted applications. Databricks data shows that 77% of open-source LLaMA and Mistral model users select versions with 13B parameters or fewer, reflecting a clear preference for compact models when fine-tuning domain-specific tasks [3].

Where SLMs are most effective

SLMs are a good choice for tasks where speed, efficiency, and strict data boundaries are essential. They are often deployed in environments that need reliable performance without the large-scale infrastructure's cost and governance burden.

- Customer service automation: SLMs can power task-specific chatbots that answer FAQs, handle billing inquiries, or provide product information with low latency.

- Domain-specific summarization: They can digest legal contracts, compliance guidelines, or medical notes, ensuring that content is condensed accurately within a narrow domain.

- Edge and on-premise deployments: Their smaller footprint allows SLMs to run in secure environments where data must remain on-premise or where infrastructure budgets are limited.

- High-volume, repetitive interactions: They can efficiently process millions of predictable requests, such as ranking search results or categorizing support tickets.

Where LLMs excel

LLMs bring the most value when enterprises need context-rich answers, cross-domain reasoning, or original content generation. Their broader knowledge and advanced reasoning make them suitable for strategic and knowledge-intensive functions.

- Knowledge management and research: LLMs can retrieve and synthesize information across diverse knowledge bases, making them useful for enterprise knowledge portals or R&D teams.

- Creative content generation: They can produce marketing copy, product descriptions, or campaign messaging with high fluency and nuance.

- Complex query understanding: LLMs effectively interpret ambiguous or multi-layered questions in healthcare diagnostics or financial analysis.

- Cross-functional applications: Their general-purpose design makes them adaptable across HR, sales, and operations without the need to build multiple specialized models.

One of the examples of an LLM application is automation in finance for a brokerage firm by N-iX. Our team has integrated a corporate knowledge base with generative models to assist employees in drafting emails, creating detailed Jira tickets, and retrieving internal policy documents through natural language queries. The platform combined LLM capabilities with secure multi-tenant data storage, Single sign-on authentication, and internal authorization workflows to meet the firm's strict compliance requirements. As a result, the firm improved employee efficiency, reduced manual effort, and maintained control over sensitive financial data.

You may find it interesting to read about the difference between generative AI vs LLM

Hybrid strategies

For many enterprises, the most practical approach is not an either-or decision, but a combination of SLM and LLM. For example, an SLM might handle routine support requests, while an LLM escalates complex cases. Similarly, retrieval-augmented generation (RAG) can combine a smaller model with a larger one to balance cost, accuracy, and coverage.

You may also be interested in GenAI use cases and applications

How to choose the right model for your business

Selecting the right model type is less about the technology and more about aligning it with business objectives, constraints, and workflows. Below is a practical framework to guide the decision.

1. Define the use case

- Task-specific, structured problems such as text classification, search ranking, or summarization in a narrow domain generally benefit from SLMs.

- Complex, open-ended tasks like drafting detailed reports, supporting customer service at scale, or analyzing multimodal data usually require LLMs.

2. Evaluate infrastructure and cost

- SLMs are feasible to run on mid-tier GPUs, CPUs, or edge devices, reducing infrastructure cost and energy consumption.

- LLMs demand high-performance hardware, distributed clusters, and significantly more energy, which increases the total cost of ownership.

3. Consider data sensitivity and privacy

- SLMs can often be fine-tuned and deployed fully on-premise, which is advantageous for regulated industries where data cannot leave the enterprise environment.

- LLMs often involve API-based access or heavy cloud infrastructure, which may raise compliance concerns but offer managed scalability.

4. Assess speed and scalability needs

- SLMs offer lower latency and higher throughput per dollar, making them better for high-volume, real-time applications.

- LLMs provide richer reasoning and contextual understanding but have higher latency, which can be a bottleneck under heavy concurrency.

5. Plan for maintenance and adaptability

- SLMs are easier and cheaper to retrain and update, enabling more frequent adaptation to changing business rules or regulations.

- LLMs are costly to retrain, so organizations often rely on prompt engineering or retrieval-augmented generation (RAG) rather than full model updates.

Discover more: AI vs generative AI

Conclusion

The choice between SLM vs LLM is ultimately a question of aligning technology with business objectives. Smaller models provide speed, efficiency, and tighter data control, while larger models deliver advanced reasoning and versatility across diverse functions. In practice, many enterprises will adopt a mix of both, balancing cost and performance according to the demands of each use case.

At N-iX, we help enterprises make these decisions with confidence. With over 2,400 specialists on board, including 200 data and AI experts, we have successfully delivered more than 60 data projects worldwide. Our AI and ML development teams design solutions that combine the right model choice with robust data pipelines, scalable infrastructure, and strong governance. From deploying compact SLMs for secure, high-volume processes to integrating enterprise-grade LLMs for complex reasoning and knowledge management, we enable organizations to capture value from AI integration.

References

- Capgemini. Generative AI in organizations 2024

- Deloitte. What’s next for AI?

- Databricks. State of Data + AI

Have a question?

Speak to an expert