As generative AI moves from experimentation to enterprise scale, the ability to turn human language into actionable business insight has become a pivotal capability. At the center of this shift are Natural Language Processing (NLP) and Large Language Models (LLM), two closely related, yet fundamentally different approaches to enabling machines to understand, interpret, and generate language.

While NLP and LLM share a common goal, they address business challenges in distinct ways. Understanding where they differ, where they overlap, and how they work together is essential for organizations investing in AI and ML development. This helps to improve customer engagement, automate internal workflows, enhance SEO performance, or scale data-driven decision-making. The choice between NLP vs LLM directly affects cost, performance, and integration with existing systems.

In this article, we explore the difference between NLP and LLM. We also show how a trusted partner with relevant expertise can support this choice through reliable data pipelines, cost-efficient infrastructure, and strong compliance practices.

What is natural language processing?

Natural language processing is a branch of AI that enables computers to understand, interpret, and generate human language. In simple terms, NLP bridges the gap between how people communicate and how machines process information.

Modern NLP uses Machine Learning to analyze text or speech, recognize meaning, and respond in ways that feel more natural to humans. This technology is already embedded in everyday tools, from search engines and chatbots to voice assistants and document processing systems.

Focusing on the interaction between computers and human natural languages, NLP combines a range of techniques to make computers produce human language:

- Parsing and syntax analysis help understand the structure of sentences and the relationships between words;

- Semantic analysis interprets meaning, intent, and context, enabling a more accurate understanding of human language;

- Named entity recognition identifies important information, such as people, organizations, locations, and dates, within text;

- Text classification organizes large volumes of content by automatically assigning labels to documents, messages, or requests;

- Sentiment analysis detects the emotional tone of written content to help organizations interpret customer or user feedback;

- Machine translation converts text from one language to another to support multilingual communication at scale;

- Speech recognition transforms spoken language into text for transcription, command processing, and voice-enabled interfaces.

In enterprise environments, where deterministic behavior, explainability, and predictable performance are required, N-iX applies NLP to deliver reliable, production-ready language intelligence at scale. Our experts often combine classical NLP models with ML pipelines optimized for speed, accuracy, and cost efficiency.

What are large language models?

LLMs are advanced AI systems trained on large amounts of text to understand, reason about, and generate human-like language. LLMs can be used for a wide range of tasks, from summarizing documents and writing code to supporting customer service and analyzing complex information.

At their core, LLMs predict the next word in a sequence, but the scale of their training enables them to capture deeper patterns, including context, intent, relationships, domain knowledge, and even elements of reasoning. This makes them the first AI systems capable of interacting with people in a natural, flexible way at enterprise scale.

Here are the main components of an LLM:

- Tokenization that breaks text down into smaller units (tokens);

- Embeddings are representations of tokens that capture semantic information and encode relationships among tokens, providing context for the model;

- Attention mechanisms analyze inter-token relationships to determine the relevance and importance of different words;

- Pretraining provides LLMs with knowledge and language samples, enabling them to learn grammar and retain facts.

- Fine-tuning is targeted training on specific tasks or datasets that improves an LLM's performance in a particular context.

In practice, N-iX engineers focus on making LLMs production-ready by combining them with retrieval pipelines, access controls, prompt optimization, and monitoring layers that ensure reliability, security, and cost predictability at scale.

Read more: SLM vs LLM: Key differences, use cases, and model selection

NLP vs LLM: Key differences

Understanding the difference between LLM and NLP is essential, as both address language but operate at very different scales and levels of complexity. NLP is a broader term focused on how machines analyze, interpret, and generate human language, while LLMs are large, deep-learning models that perform many of these tasks in a more advanced and flexible way.

To clarify the difference between LLMs and NLP in real-world scenarios, the comparison below outlines how each approach differs across key technical and operational dimensions.

Techniques and architecture

- NLP uses rule-based systems, statistical approaches, and classical Machine Learning models designed for narrow, task-specific use cases. These methods are effective for structured, task-specific applications like sentiment analysis or entity extraction.

- LLMs learn complex patterns and context from massive datasets rather than from predefined rules. This makes them more flexible and better able to handle unstructured, open-ended tasks.

Scope and purpose

- NLP encompasses a range of methods for understanding text, including speech recognition, classification, tokenization, summarization, and rule-based parsing.

- LLMs are a subset of NLP: powerful general-purpose models capable of performing multiple language tasks: summarization, translation, and content generation, often without additional training.

Understanding vs prediction

- Traditional NLP techniques explicitly extract linguistic patterns: syntax, semantics, grammar, named entities, and relationships between words. They analyze structure but do not "understand" meaning in a human sense.

- LLMs function as advanced prediction engines, generating the most likely next word or phrase based on learned patterns. They don't explicitly parse language but implicitly learn structure through scale.

Training data and context

- NLP models are often trained on smaller, curated datasets tailored to specific tasks. As a result, they handle sentence-level or paragraph-level context.

- LLMs are trained on massive, diverse datasets and can maintain context across long passages, making their outputs more cohesive and context-aware.

Computational requirements and scalability

- NLP models are lightweight, fast to train, and easy to deploy on limited hardware. They work well in environments that require efficiency and clear constraints.

- LLMs comprise billions of parameters and require substantial computational resources for both training and inference. While they deliver superior performance and versatility, they demand specialized hardware and more complex infrastructure.

Performance across languages

- NLP models depend heavily on the availability of labeled datasets for each language. They often struggle with low-resource languages or dialects.

- LLMs are usually pretrained on multilingual datasets, providing broader coverage out of the box; however, performance may still vary.

You may find it interesting to read about the difference between generative AI vs LLM

LLM and NLP in action: Practical use cases

In the NLP vs LLM comparison, practical use cases highlight where each technology delivers the most value.

LLM use cases

LLMs shine when the task requires understanding intent, generating language, or adapting to complex, ambiguous input. Here are the applications where LLMs deliver the most value in enterprise environments.

-

Conversational experiences: LLMs enable natural, human-like interaction in chatbots and virtual assistants. They interpret tone, clarify intent, and adjust responses dynamically (something rule-based NLP struggles to match).

-

Content generation and summarization: LLMs produce drafts, summaries, reports, and knowledge articles at scale. Newsrooms, customer support teams, and operations teams use them to accelerate internal content pipelines.

-

Translation with context: Beyond direct word substitution, LLMs understand cultural nuance and domain-specific terminology, resulting in more readable and contextually aligned translations.

-

Support for engineers: Code generation, refactoring, and debugging capabilities make LLMs valuable to engineering teams, reducing repetitive work and accelerating delivery.

-

Data and metadata automation: LLMs can analyze datasets and automatically generate descriptive metadata, supporting cataloging, compliance, and BI team preparation.

An example of LLM implementation carried out by N-iX is the automation of financial processes for a leading brokerage firm. We integrated a corporate knowledge base with generative models to help employees draft emails, create Jira tickets, and retrieve internal policy documents via natural language queries. This platform combined LLM capabilities with secure multi-tenant data storage, single sign-on authentication, and internal authorization workflows to meet the firm's strict compliance requirements. As a result, the firm improved employee efficiency, reduced manual effort, and maintained control over sensitive financial data.

NLP use cases

NLP remains the best choice for tasks that require speed, precision, structure, and predictable results. Here are the most common applications.

- Text classification and monitoring: NLP powers spam detection, social listening, and feedback analysis by reliably identifying keywords, patterns, and intent categories in large volumes of text.

- Search relevance and query understanding: Search engines and enterprise search tools use NLP to interpret user queries and return more accurate, intent-based results.

- Speech-to-text workflows: NLP converts audio to text and supports transcription, accessibility tools, and voice-driven interfaces.

- Document summarization and extraction: NLP can extract key information (e.g., names, dates, amounts, categories) and produce concise summaries for compliance, legal, or document-heavy processes.

- Virtual assistants: Modern assistants such as Siri and Google Assistant rely on NLP to interpret commands, structure user requests, and enable seamless interaction.

- Data quality and normalization: NLP parses unstructured data, resolves inconsistencies (e.g., naming or formatting), and prepares information for downstream analytics.

One prominent example of NLP in practice is our success story of enhancing the user experience on a peer-to-peer (P2P) review platform. N-iX helped the client implement a Pros and Cons feature using NLP and Machine Learning (ML), enabling users to easily find product details by summarizing the advantages and disadvantages of software products. This feature analyzed user reviews, extracted keywords, and identified topics to improve product discovery. As a result, the platform attracted more users, increased marketplace traffic, and improved its search engine rankings.

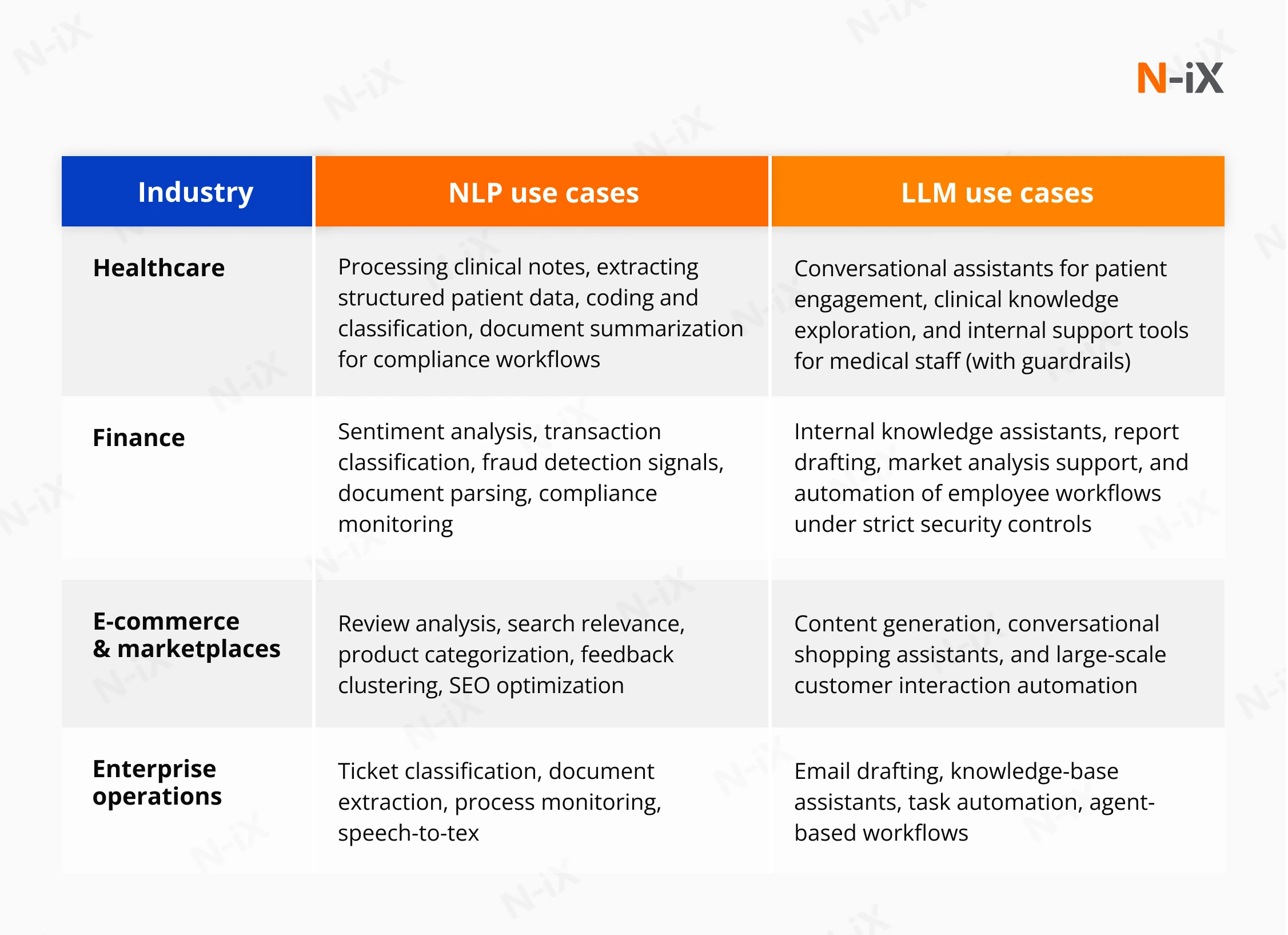

LLM vs NLP: Applications by industry

While the examples above illustrate how NLP and LLM solve specific business problems, enterprises often ask how these technologies apply across different industries. Based on N-iX experience, the table below summarizes where LLM and NLP create the most value.

You may also be interested in LLMOps vs MLOps: Key differences, use cases, and success stories

Choosing the right approach for NLP and LLM adoption

The difference between NLP vs LLM is not about replacing one technology with another, but about understanding how and where each delivers the most value. NLP remains essential for enterprise use cases that require speed, structure, explainability, and predictable outcomes, such as classification, extraction, search relevance, and large-scale text processing. LLMs extend these capabilities by enabling contextual understanding, reasoning, and generative workflows that require flexibility and adaptability.

As NLP and LLM technologies evolve, future progress will focus less on raw model size and more on efficiency, controllability, and production readiness. Advances in model optimization, embeddings, and deployment architectures will reduce computational costs, enable hybrid and edge deployments, improve semantic understanding, and strengthen governance around bias and compliance. Organizations that prepare for these shifts now will be better positioned to scale AI responsibly and cost-effectively.

At N-iX, we help enterprises make these decisions with confidence. With 200 data and AI specialists, we have delivered more than 60 data and AI projects to leading businesses worldwide. Our AI and ML teams design production-ready solutions that combine the right model choice with robust data pipelines, scalable infrastructure, and enterprise-grade governance. From deploying NLP-based systems for high-volume workflows to integrating enterprise LLMs for advanced reasoning and knowledge management, we deliver production-ready language AI at scale.

Have a question?

Speak to an expert