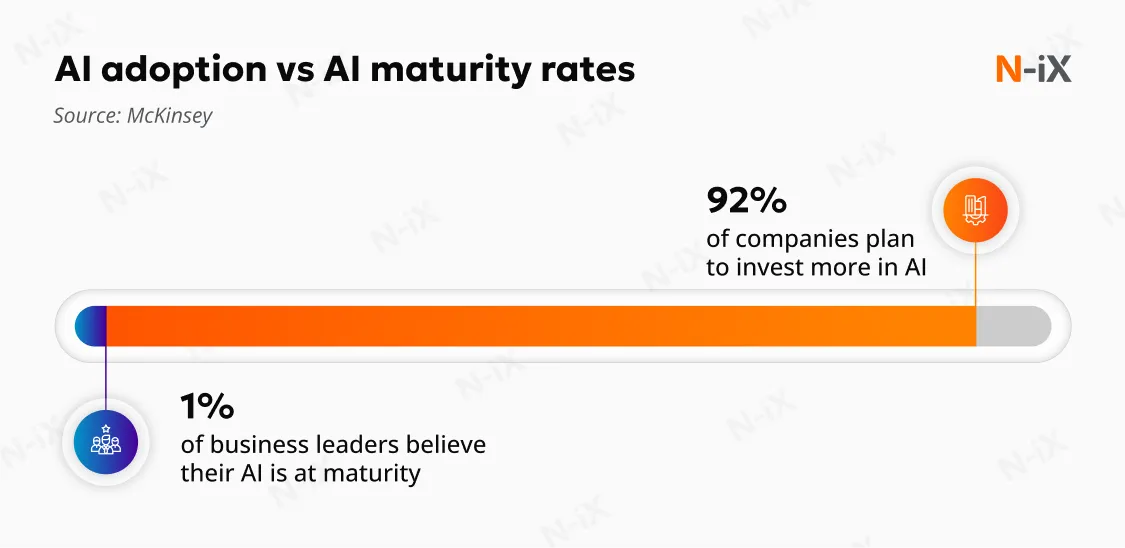

Artificial Intelligence has an immense potential in helping businesses gain a competitive edge, elevate automation, and advance decision-making. Its adoption rates are skyrocketing: McKinsey reports that 92% of companies plan to scale their AI development by 2028. In fact, over 78% of businesses have already deployed AI in some capacity. [1] However, as the trend grows, many organizations discover that simply bolting AI to their old systems doesn’t propel them forward.

To deliver meaningful business outcomes, Artificial Intelligence requires a robust AI-ready infrastructure to run on. It depends on a cloud environment where data platforms, networking, security, and governance work together to support continuous training and deployment.

So, how can you build a solid foundation for your AI initiatives? Explore our guide and discover:

- What AI-ready infrastructure systems are;

- What architectural and operational steps you can take to make AI sustainable and effective;

- What vendors support AI-ready IT infrastructure.

What is AI-ready infrastructure?

AI-ready infrastructure is the foundation that allows Artificial Intelligence to move from isolated use cases into reliable and scalable business operations. It’s a coordinated system where data, compute, deployment, security, and governance work together. When this foundation is in place, AI workloads can be trained, deployed, and scaled without constant rework or unexpected operational risk.

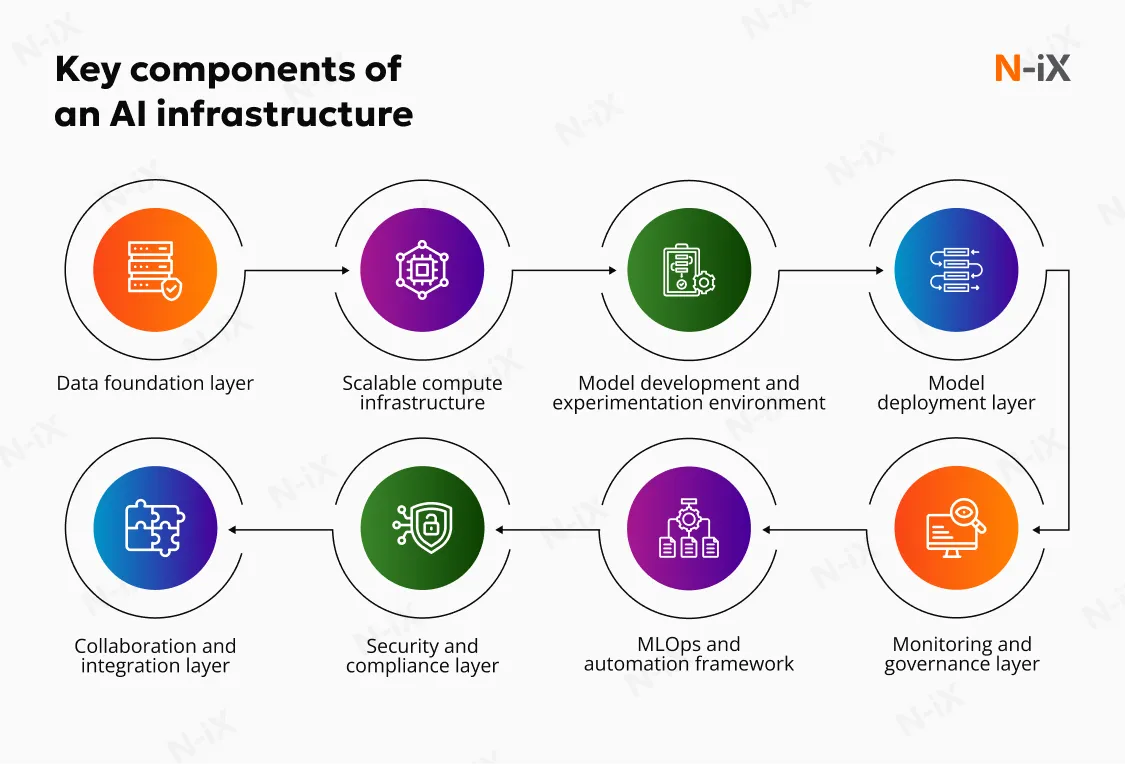

In practice, such an infrastructure brings together several core modules, including:

- Data foundation layer: Ensures data is accessible, reliable, and prepared for training, inference, and analytics;

- Scalable compute infrastructure: Provides elastic access to CPUs, GPUs, and accelerators as workload demand changes;

- Model development and experimentation environment: Supports iterative model building, testing, and validation;

- Model deployment layer: Enables stable, low-latency inference in production environments;

- Monitoring and governance layer: Tracks performance, usage, and compliance across AI workloads;

- MLOps and automation framework: Automates pipelines for training, deployment, and updates;

- Security and compliance layer: Protects data, models, and access while meeting regulatory requirements;

- Collaboration and integration layer: Connects AI systems with existing applications and workflows.

It’s important to recognize that the capability to support AI workloads is a moving target. AI technologies evolve at breakneck speed, and the goal is not to lock in a fixed architecture but to build an infrastructure that can adapt as requirements, risks, and opportunities change.

8 key practices to build an AI-ready infrastructure

The path to building a solid foundation differs depending on your existing systems, industry requirements, and business goals. Still, there are several proven steps you can take to get more value from AI, regardless of where you start.

1. Modernize your digital core to reduce technical debt

Many AI initiatives stumble early. They simply crash into a wall of massive accumulated technical debt. Legacy code, aging platforms, and delayed updates often stay in place as teams prioritize continuity, speed, or short-term delivery goals. While this approach staves off disruptive modernization for a while, it limits experimentation and makes AI workloads unreliable.

Modern AI pipelines have the power of a sports car engine. Running them on patchwork infrastructure is like putting that engine into a car with worn brakes and a fragile frame. It exposes hidden dependencies and scaling limits instead of delivering insight.

Modernizing your digital core creates the foundation AI needs to operate consistently at scale. Instead of layering fixes on top of brittle systems, redesign platforms around modular architectures, clean data flows, and automation.

This is the approach N-iX took to enable Machine Learning and Computer Vision for a Global Fortune 100 engineering and technology company. Our team modernized the client’s legacy platform by migrating it to a microservices-based architecture and building a new cloud-native foundation. We implemented DevOps practices to support continuous delivery and introduced new SaaS services to enable anomaly detection, delivery prediction, and sensor data processing. We also designed a Computer Vision solution tailored to industrial logistics environments and the client’s needs.

As a result, our partner was able to embed AI in day-to-day operations across their global network of over 400 warehouses. The solution automated manual processes, reduced paperwork, supported better capacity planning, reduced downtime, and helped detect damaged goods early.

Explore the full case study: Driving logistics efficiency with industrial Machine Learning

2. Adopt a fit-for-purpose cloud architecture

Where does AI usually start? For most businesses, in the cloud. The cloud gives you fast access to compute, elastic scaling, and managed services that simplify early-stage development. This is why platforms like AWS, Microsoft Azure, and Google Cloud are often the first choice for AI initiatives. These vendors supporting AI-ready IT infrastructure offer broad ecosystems of data, AI, and infrastructure services, making them strong starting points for experimentation and early scaling.

As AI matures, different workloads pull infrastructure in different directions. Training, analytics, and inference have distinct cost, performance, and governance requirements. A fit-for-purpose architecture lets you place workloads strategically and reassess choices over time, rather than committing to a single pattern. Understanding the strengths of different cloud platforms for AI workloads helps you design an AI-ready infrastructure that evolves with your use cases instead of constraining them.

When making AI infrastructure decisions, make sure to align them with the following business impact tiers:

- Experimental: For early-stage development and proof of concept;

- Operational: For day-to-day processes that support your business;

- Mission-critical: For the core systems that drive business value and require high performance and reliability.

This approach helps you allocate resources effectively and determine where additional complexity is justified. For example, mission-critical workloads may require more robust governance and higher security, while experimental workloads can be more flexible and cost-efficient.

A good example is how N-iX helped a global media company expand its audience using cloud-based data analytics. We built a scalable AWS-based platform that consolidated fragmented data sources and enabled advanced analytics without disrupting existing systems. This foundation improved data accessibility, supported personalization initiatives, and created a flexible base for introducing AI-driven capabilities as the company’s digital strategy evolved.

Explore the full case study: Facilitating the growth of news platform audience with cloud-based data analytics

3. Implement secure networking

AI workloads depend on fast and secure data movement. Your Machine Learning models will require continuous data streams from on-premises systems, cloud platforms, and edge environments, often at high volume and low latency. Building an AI-ready network infrastructure ensures your data pipelines remain steady, training is fast, and real-time inference is possible.

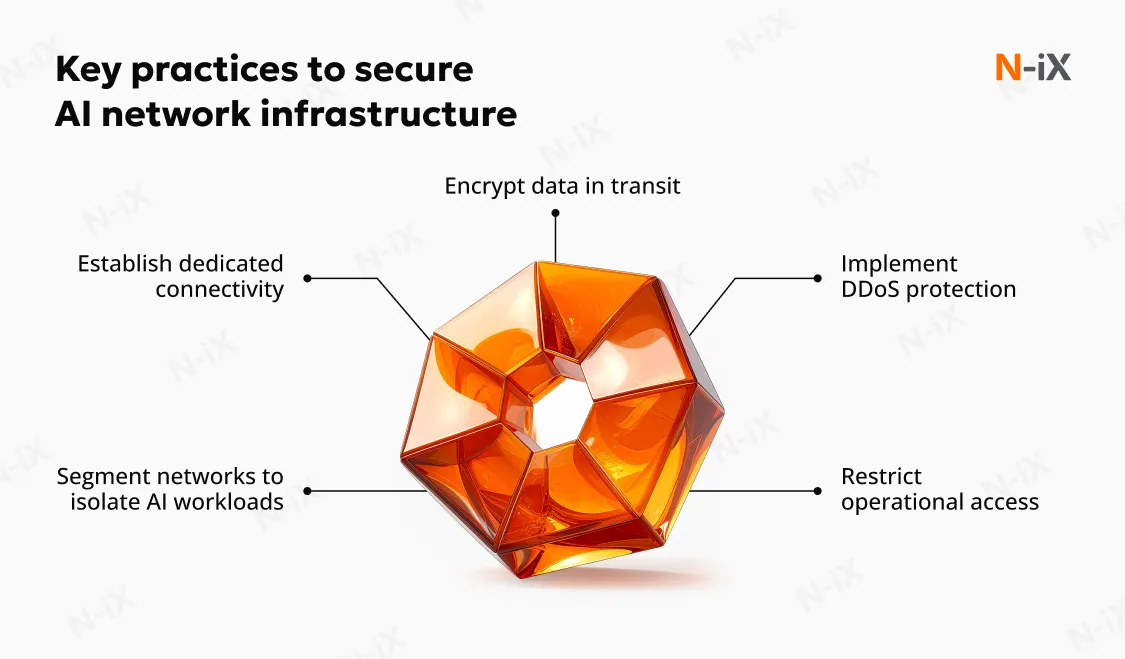

Our experts also emphasize that connectivity and security decisions must be made together. AI workloads are high-value targets, and network weaknesses can expose sensitive data and disrupt operations when exploited. Here are several key safeguards to implement:

- Implement DDoS protection for internet-facing AI services to maintain availability during traffic floods;

- Restrict operational access through secure jump servers to avoid exposing management interfaces;

- Establish dedicated connectivity for large or continuous data transfers with services like ExpressRoute for Azure or Direct Connect for AWS;

- Segment networks to isolate AI workloads from unrelated systems and limit the impact of security incidents;

- Encrypt data in transit across all network paths, including hybrid and multi-cloud connections.

4. Continuously monitor cloud costs

AI initiatives often start in the cloud because it gives you speed and flexibility early on. As usage grows and GPU-intensive workloads enter daily operations, costs can rise faster than expected. Without continuous oversight, you may end up paying for underutilized capacity, idle resources, and inefficient data flows. To implement effective cloud cost optimization, track usage against workload maturity and performance needs. Evaluate the following:

- Is a particular workload still evolving, or is it stable?

- Are you paying cloud premiums for experimentation or for something that now runs every day?

- Does the current deployment model still match how each workload is used?

Answering these questions helps you decide where each workload should run across public clouds, private environments, or dedicated on-premises infrastructure, keeping AI spending under control.

5. Establish clear governance boundaries

As your AI adoption scales, clear ownership becomes essential. You need defined boundaries that clarify who owns data, models, and risk decisions across teams. Within an AI-ready infrastructure, these boundaries prevent shadow deployments, inconsistent controls, and fragmented accountability that can otherwise undermine security and delivery speed.

Governance should be applied at the workload level, not as a one-size-fits-all framework. Experimental models, customer-facing AI services, and internal decision-support systems all carry different risk profiles and compliance needs. Treating them all the same can leave high-impact workloads vulnerable while slowing innovation in low-risk areas.

Strong governance also enables controlled autonomy. Policy-driven guardrails embedded in platforms and pipelines allow teams to move fast within clearly defined limits. This approach reduces manual oversight, keeps security and compliance consistent across all environments, and ensures AI initiatives scale without creating bottlenecks.

6. Ensure reliability and business continuity

Production-grade AI should not depend on a single cloud region. Outages, capacity constraints, or regional disruptions can take critical systems offline in minutes. To maintain availability, our experts recommend hosting AI endpoints across multiple cloud regions. At the same time, they warn that resilience does not mean replicating everything everywhere. Spreading sensitive assets indiscriminately can introduce security, compliance, and governance risks rather than reducing them.

As AI adoption scales, data sovereignty becomes a defining design constraint. Sensitive data, fine-tuned models, and proprietary knowledge must remain within approved legal and geographic boundaries, especially in regulated industries. This requires keeping core AI assets anchored to specific regions, while access to AI services can be exposed more broadly.

In practice, this means separating global availability from data placement. AI endpoints and traffic routing can be distributed, while data, models, and Retrieval-Augmented Generation (RAG) datasets stay within sovereign environments. Designing failover and recovery plans around these boundaries is a core part of AI-ready infrastructure management, ensuring continuity without violating regulatory obligations.

Tip from N-iX: Before deployment, verify that required AI features and capacity quotas are supported in each target region, as cloud service availability and limits can vary by location. Map which AI assets can be deployed globally and which must remain region-bound before defining failover paths.

7. Consider edge AI for optimal performance

Sometimes, you need to run AI workloads closer to where data is generated, rather than sending everything from devices, machines, or local gateways to the cloud. This approach is called edge AI. Within an AI-ready infrastructure, running workloads at the edge can reduce latency and dependency on constant connectivity. It becomes a deliberate execution layer, not a replacement for cloud or private platforms.

Edge AI makes sense when speed, resilience, or data locality are decisive factors. It enables real-time responses, reduces data transfer costs, and limits exposure of sensitive data in transit. Here are several common use cases across industries:

- Manufacturing: Detecting defects on production lines, monitoring equipment health, triggering safety responses in real time;

- Automotive: Processing sensor data for driver assistance, supporting autonomous functions, enabling predictive maintenance in vehicles;

- Retail: Powering in-store analytics, such as shelf monitoring, detecting theft or loss, and personalizing digital displays without cloud latency;

- Energy and utilities: Monitoring assets in remote locations, detecting faults in real time, supporting grid stability with limited connectivity.

Read more: Bringing real-time intelligence to connected devices with edge AI

8. Bring in external expertise to navigate complexity

Building an AI-ready foundation is rarely a linear journey from point A to point B. You need to assess legacy constraints, data readiness, security gaps, and cost implications before deciding whether to modernize or rebuild parts of the technology stack. For many organizations, knowing where to start is the hardest part.

An experienced technology partner like N-iX can help you structure these decisions, validate trade-offs, and avoid costly missteps. We bring cross-industry expertise in cloud, data, security, and AI engineering, supporting organizations throughout:

- Assessing their existing systems and deciding what to modernize vs replace;

- Making an AI-ready infrastructure comparison to understand which architectural patterns and platforms best align with their AI workload maturity;

- Planning phased implementation to avoid disruption;

- Designing target architectures aligned with AI workload needs;

- Prioritizing changes based on risk, cost, and business impact;

- Establishing guardrails for security, governance, and cost control.

Why build an AI-ready infrastructure with N-iX?

At N-iX, we help organizations design and evolve infrastructure that can support AI in real business conditions. Our services span AI development, cloud architecture design, infrastructure management, and data platform engineering. We focus on building environments that remain reliable as your AI workloads scale and business objectives change.

With 23 years of experience in technology consulting, N-iX supports organizations across finance, manufacturing, healthcare, retail, and other data-intensive industries. As a Microsoft AI Cloud Partner and trusted partner of AWS and Google Cloud, we help organizations adopt hyperscaler technologies with confidence. Certified under ISO 27001, SOC 2, PCI DSS, and GDPR, we ensure AI platforms are built on a secure and well-governed foundation.

References

1. Artificial Intelligence Index Report 2025—The Stanford Institute for Human-Centered AI

Have a question?

Speak to an expert