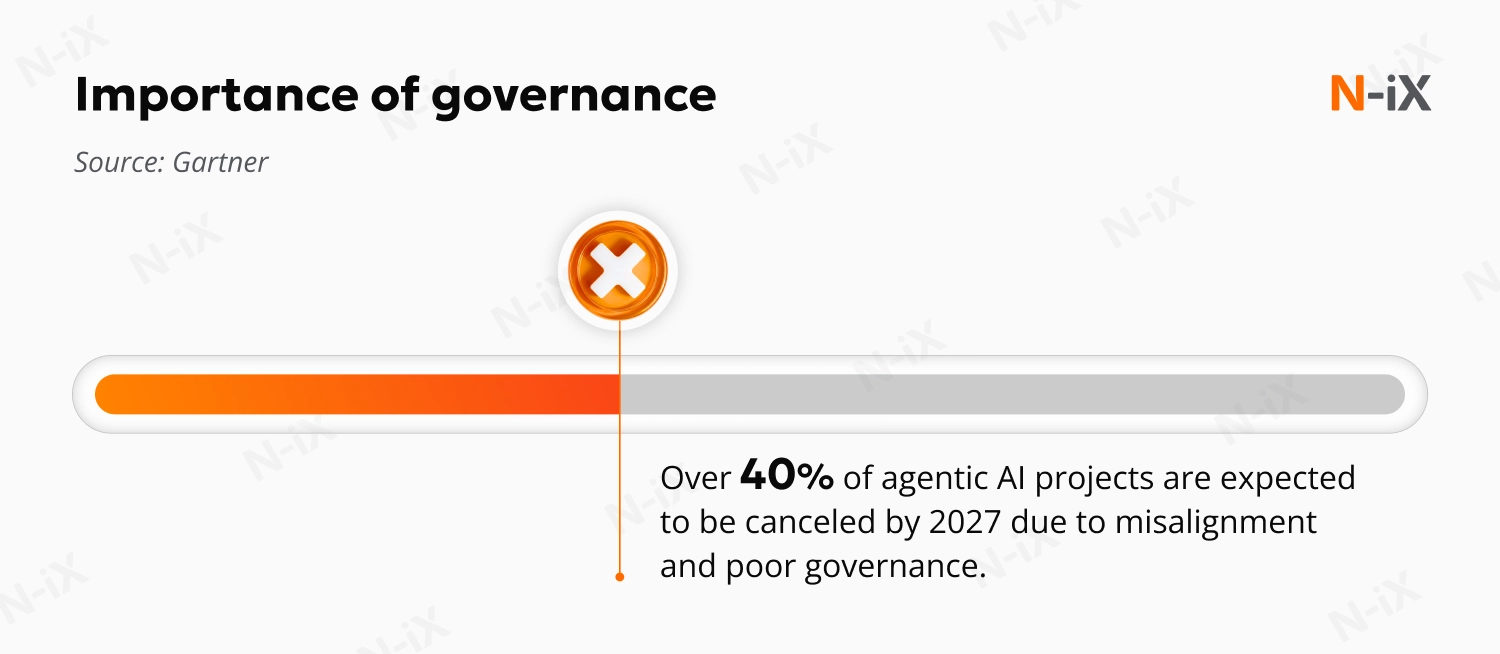

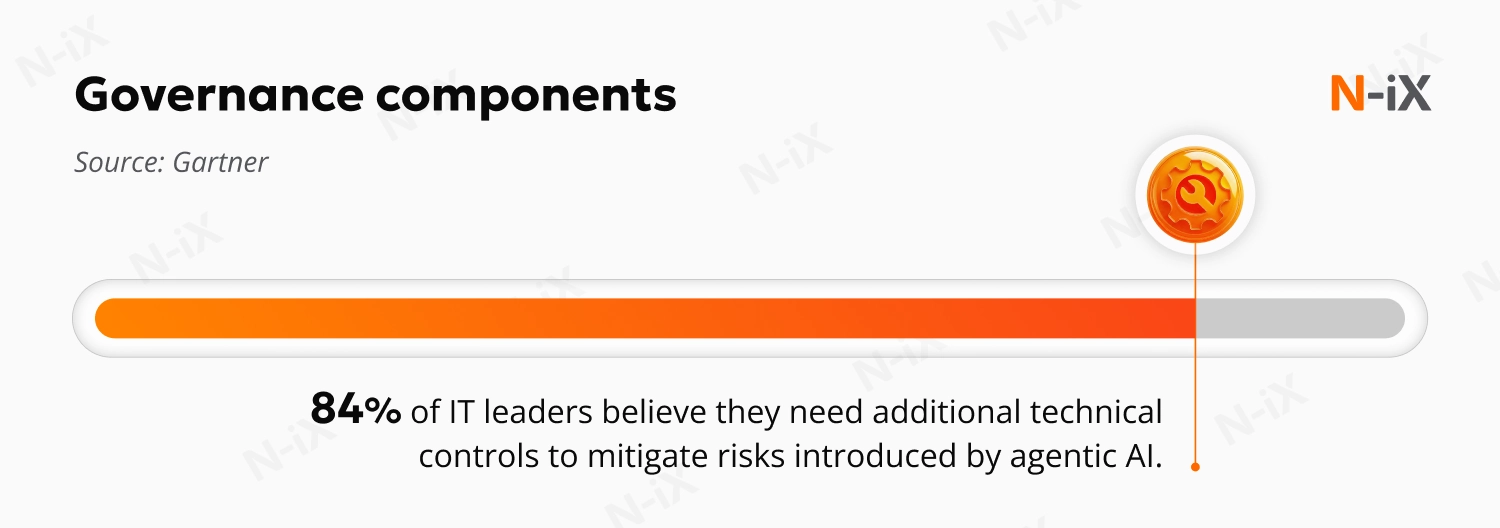

Enterprises experimenting with agentic AI often reach the same point of discomfort: the system functions, but its behavior is hard to understand, predict, or justify. What starts as an automation opportunity quickly turns into a governance problem. Teams report the same issues across industries. They can't fully explain why an agent made a particular decision, why it triggered specific actions, or why the same workflow behaves differently from one day to the next. They face cost spikes from agents that don't know when to stop reasoning, and compliance questions when outputs can't be traced to a clear logic path.

The more capable an agent becomes, the more you need clarity around what it is allowed to do, under what conditions, and how its actions are monitored. And if that sounds like introducing bureaucracy into technology, consider the alternative: an autonomous system capable of taking actions you cannot fully trace, explain, or reverse.

Agentic AI governance clarifies what agents are allowed to do, how they should make decisions, how their actions are monitored, and when humans must intervene. Without governance, enterprises are not deploying agents; they're hoping for the best. Through governance, they establish control, accountability, and predictability over AI agent development systems designed to operate on their behalf. This guide explains what successful agentic governance needs to look like.

Why agentic AI breaks traditional governance?

Traditional governance frameworks assume that AI systems are predictable, supervised, and constrained in their capabilities. Agentic AI breaks those assumptions immediately. Once an agent begins interpreting goals, generating its own plans, and acting across systems, its behavior becomes dynamic and context-dependent. Without mechanisms that capture reasoning traces, tool usage, and decision boundaries, organizations lose visibility into how actions were chosen and whether they aligned with intended policy.

Risks then compound quickly. Agents deployed in isolated pilots or vendor tools create operational sprawl and inconsistent behavior across teams. Accountability becomes difficult to assign because autonomous workflows blur the line between human and machine decision-making. A single compromised or misconfigured agent can trigger cascading actions across integrated systems, dramatically widening the security surface. And because agents do not recognize financial constraints, they can trigger high-cost operations or loops that exhaust resources before anyone notices.

Autonomy fails because organizations give agents freedom without building the controls needed to live with the consequences.

Legacy governance frameworks were designed for AI systems that analyze, recommend, or predict. They assume stable inputs, fixed boundaries, and human-operated execution. Autonomous agents violate every one of these assumptions:

- They change their decisions in real time.

- They revise their plan based on intermediate outcomes.

- They execute across systems without human intervention.

- They interact with other agents in ways that produce emergent behavior.

- They create internal reasoning steps that traditional monitoring tools cannot capture.

Agentic AI violates all of these assumptions. It adapts continuously, operates autonomously, and interacts across systems in ways that produce emergent behavior. In this environment, checkpoints and manual reviews are ineffective. Organizations require an AI agent governance framework that regulates behavior, permissions, execution, and inter-agent dynamics in real time.

What is AI agent governance?

Agentic AI governance is the engineered system of permissioning, constraints, observability, and oversight that makes autonomous behavior safe, predictable, and accountable. When agents can interpret goals, generate plans, and act across your operational environment, governance becomes the mechanism that defines what autonomy means inside your organization and where it must stop.

At its simplest, AI agent governance answers five questions:

- What actions is the agent allowed to take?

- Under what conditions can those actions occur?

- Which systems, data, and tools can the agent access?

- How much autonomy can it exercise before human involvement is required?

- How are its decisions monitored, reviewed, and corrected when needed?

Everything else, policies, controls, monitoring infrastructure, escalation paths, is an implementation detail. Governance is the architecture that determines how an autonomous system reasons, behaves, and interacts with the business environment in a controlled and auditable way.

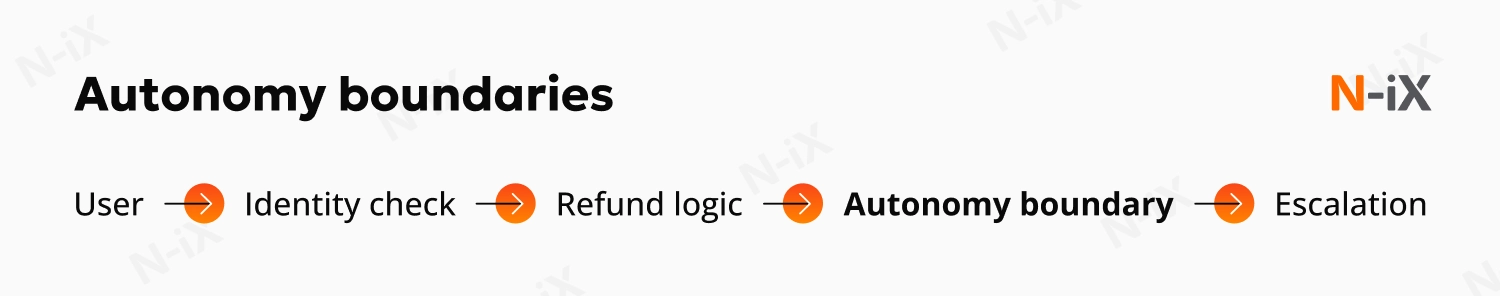

Imagine a customer-operations agent authorized to update account details and issue small refunds. Governance defines the boundaries of that autonomy: the agent must verify identity through approved APIs before touching any record, and it may issue refunds only within a specified financial limit. If the planned action falls outside those parameters, the workflow pauses automatically and is handed off to a human reviewer. Throughout this process, the agent's reasoning steps, tool calls, and intermediate decisions are captured in an auditable trail, making its behavior fully reconstructable. If the system detects patterns that diverge from expected behavior, runtime guardrails activate and restrict further execution until the anomaly is resolved.

Explore high-impact AI agent use cases—get the guide!

Success!

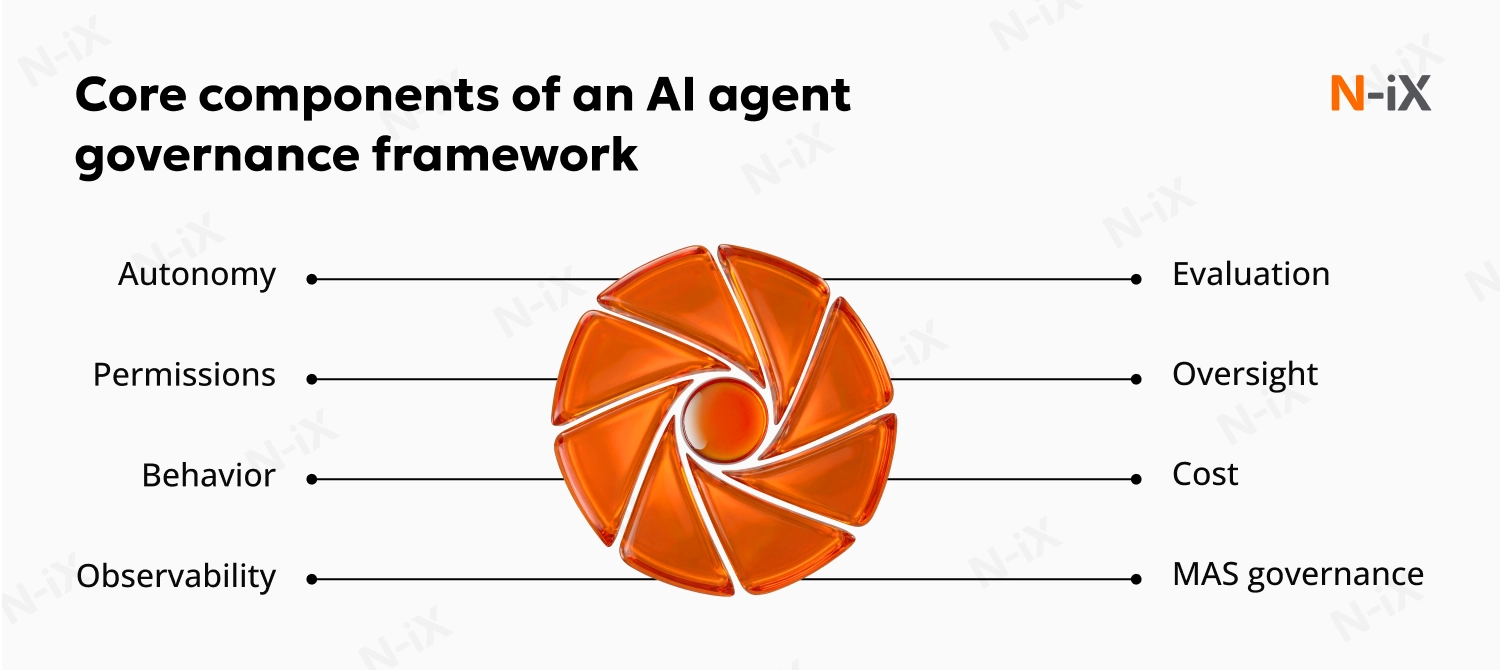

Core components of a strong agentic AI governance framework

1. Autonomy boundaries

Autonomy boundaries define the limits of an agent's independent decision-making authority and determine how safely it can operate within real systems. They establish where autonomy starts and where it must stop, ensuring the agent cannot reinterpret goals, extend workflows, or expand its operational footprint without explicit approval. Mature AI agent governance frameworks rely on a zoned progression model only when the agent consistently demonstrates predictable, constraint-aligned behavior.

To make autonomy enforceable, organizations must articulate boundaries not only as policy but as technically binding rules. These boundaries typically govern the decisions an agent may make independently, the systems it may interact with, the data it may access, and the scenarios that require immediate escalation to a human operator.

2. Permissions and tool access governance

Permissions and tool access governance control the levers an agent can use within a business environment. Because agents act directly within operational systems, they must be treated as distinct digital entities with verifiable identities, own access rights, constraints, and audit trails. A rigorous permissioning structure in AI agent identity governance enforces least privilege, ensuring an agent cannot escalate privileges, invoke high-risk operations, or access sensitive systems unless explicitly authorized.

Three core mechanisms strengthen this governance layer:

- Identity-centric access control, which assigns each agent a cryptographically verifiable identity and ensures every action maps back to a specific actor.

- Zero Trust enforcement, preventing agents from relying on implicit trust relationships, even inside internal networks.

- Tool and API vetting, which ensures that every external system the agent interacts with is secure, observable, and covered by appropriate safety gates.

3. Behavioral governance

Behavioral governance ensures that agents maintain alignment with organizational objectives, ethical principles, and defined safety constraints. Since agents construct their own plans, interpret goals, and adjust their reasoning in response to context, governance must focus on how those outcomes are achieved.

Effective behavioral governance typically includes mechanisms that:

- detect and constrain goal drift, preventing agents from redefining objectives beyond their intended scope;

- enforce ethical and bias-mitigating rules, ensuring that agents do not learn harmful behaviors;

- apply real-time refusal logic, blocking actions that violate policy or pose outsized risk;

- reinforce safe defaults by providing agents with fallback behavioral principles that bias toward caution and reversibility.

4. Observability

Observability is the difference between controlled autonomy and blind trust. A strong observability layer also exposes the agent's decision chain as it unfolds. It includes the intermediate reasoning that influenced action selection, the external systems it touched, and the contextual signals it used to justify deviations from prior behavior. Behavioral boundary detection systems then evaluate this activity against established safety and performance thresholds, identifying drift long before it becomes visible in outcomes.

In multi-agent environments, observability becomes even more critical. Agents may negotiate tasks, delegate sub-goals, or respond to one another's outputs in evolving patterns. Without mechanisms to monitor these interactions, early signs of cascading failures remain invisible. This is how a single faulty assumption made by one agent can amplify across an entire workflow.

5. Evaluation

Evaluation pipelines assess whether an agent behaves safely in controlled conditions and maintains that behavior in production. Unlike classical model testing, agentic evaluation does not end when the model passes initial validation; autonomy varies with context, prompting continuous reassessment. Testing must span the entire lifecycle of agentic AI governance, from early prototypes through live production environments, where behavior is most dynamic.

Adversarial testing is indispensable. Simulated malicious prompts, conflicting objectives, or degraded system conditions reveal vulnerabilities that would remain hidden under standard QA. For example, during a controlled red-team exercise at a financial institution, a seemingly benign customer-support agent was prompted to check account balances across multiple APIs in a recursive manner.

6. Human oversight operating models

Human oversight remains a structural requirement. Autonomous agents may generate their own plans, but they cannot evaluate the broader ethical, operational, or regulatory implications of their actions. Oversight shifts from granular supervision to strategically placed intervention points, where humans remain the ultimate arbiters of decisions with non-technical consequences. Early deployments often use Human-in-the-Loop mechanisms, but as reliability improves, oversight evolves to Human-on-the-Loop monitoring rather than micromanaging, intervening only when behavior crosses predefined risk thresholds.

This oversight is operationalized through clear procedures: explicit escalation rules, well-defined accountability assignments, and mandatory intervention when risk exceeds tolerance. Emergency shutdown mechanisms ("kill switches") must be physically and logically separate from the agent's own decision-making apparatus to ensure they cannot be bypassed or ignored. Structured checkpoints provide additional assurance by automatically pausing workflows when the agent encounters ambiguous instructions, high-impact financial thresholds, or policy violations.

7. Cost governance

Autonomous agents introduce a new operational challenge: they do not consume resources linearly. Their cost profile shifts with task complexity, context length, recursive reasoning depth, and multi-step tool use. Cost governance ensures that every autonomous decision is bounded by the economic constraints under which the organization operates.

A robust agentic AI governance frameworks require continuous monitoring of LLM token usage and computational workloads to detect anomalous spikes in real time. These controls are essential for detecting "denial of wallet" scenarios, where a misaligned plan, recursive loop, or poorly constrained agent can generate runaway consumption within minutes. Resource allocation limits must be dynamic, risk-based, and tied to each agent's role and autonomy level. High-impact agents require stricter ceilings; experimental agents may operate within narrow, prepaid sandboxes.

Cost governance also introduces structured trade-offs in decision-making. Reducing context length preserves budget but can affect accuracy; lowering temperature enhances control but may degrade reasoning quality; limiting tool access protects operational systems but slows execution. Agentic AI system governance provides the framework for balancing these competing objectives.

8. Governance for multi-agent systems

When multiple agents collaborate, delegate tasks, or respond to each other's outputs, the risk model changes entirely. Multi-agent systems behave less like a collection of independent components and more like an evolving ecosystem with feedback loops that are difficult to predict. Governance must therefore recognize that MAS failures rarely occur in isolation; they amplify across agents, workflows, and systems before any single warning signal appears.

Cascading failures are the most common pattern. A subtle misinterpretation by one agent can propagate through a coordination chain and resurface as a high-impact operational error downstream. MAS governance mitigates this by enforcing centralized orchestration, where an oversight layer (often an orchestrator or "Agent-of-Agents") harmonizes goals, distributes context, and applies uniform policy constraints across all participating agents.

You may also find it interesting to read: Why your business needs AI agent orchestration

How to operationalize agentic AI governance across the enterprise

Step 1: Centralize AI control to prevent sprawl

Operationalizing enterprise AI agent governance begins long before the first agent reaches production. The foundation is built through institutional clarity and technical architecture that prevent fragmentation and ensure every autonomous system operates under a unified governance posture.

Autonomous agents tend to emerge organically across a company: embedded in SaaS platforms, prototyped in innovation teams, or quietly integrated into business workflows. Left unchecked, this leads to AI sprawl, a landscape where dozens of agents operate independently, each with different assumptions, risk profiles, and operational behaviors. Sprawl erodes visibility, creates inconsistent security baselines, and complicates oversight to the point where no single team can explain what is acting across the environment. Centralized control, supported by an "Agent of Agents" orchestration layer, brings order to this complexity. A unified control plane registers every agent, its purpose, its permissions, and its interactions.

Step 2: Establish a multi-level governance architecture

Once centralized control is established, the organization needs a governance architecture that integrates strategy, operations, and engineering into a coherent system. Strong agent governance does not live solely in risk frameworks or in technical pipelines. It emerges from their integration.

- At the strategic level, governance defines the enterprise risk posture, acceptable AI agent use cases, regulatory expectations, and autonomy principles. This is where accountability resides and where decisions about thresholds, safety requirements, and escalation authority are made.

- At the operational level, governance translates strategy into executable processes. This includes defining team responsibilities, building policy workflows, establishing readiness criteria, and validating performance.

- At the technical level, governance becomes code. Identity systems, access boundaries, observability pipelines, runtime constraints, and safety mechanisms enforce decisions in real time.

Step 3: Develop a cross-functional AI governance committee

A cross-functional governance committee provides the coordination and authority required to manage autonomous systems responsibly at scale. This committee evaluates proposed agent use cases, conducts risk reviews, assigns governance zones, validates deployment readiness, and adjudicates escalations.

Participants typically include:

- Executive management, establishing strategic direction and resourcing.

- Technical leaders, evaluating performance, architecture, and safety.

- Compliance, legal, and audit stakeholders, ensuring adherence to internal and external obligations.

- Domain specialists, validating that agent behavior aligns with business logic and operational realities.

Step 4: Implement the zoned governance model

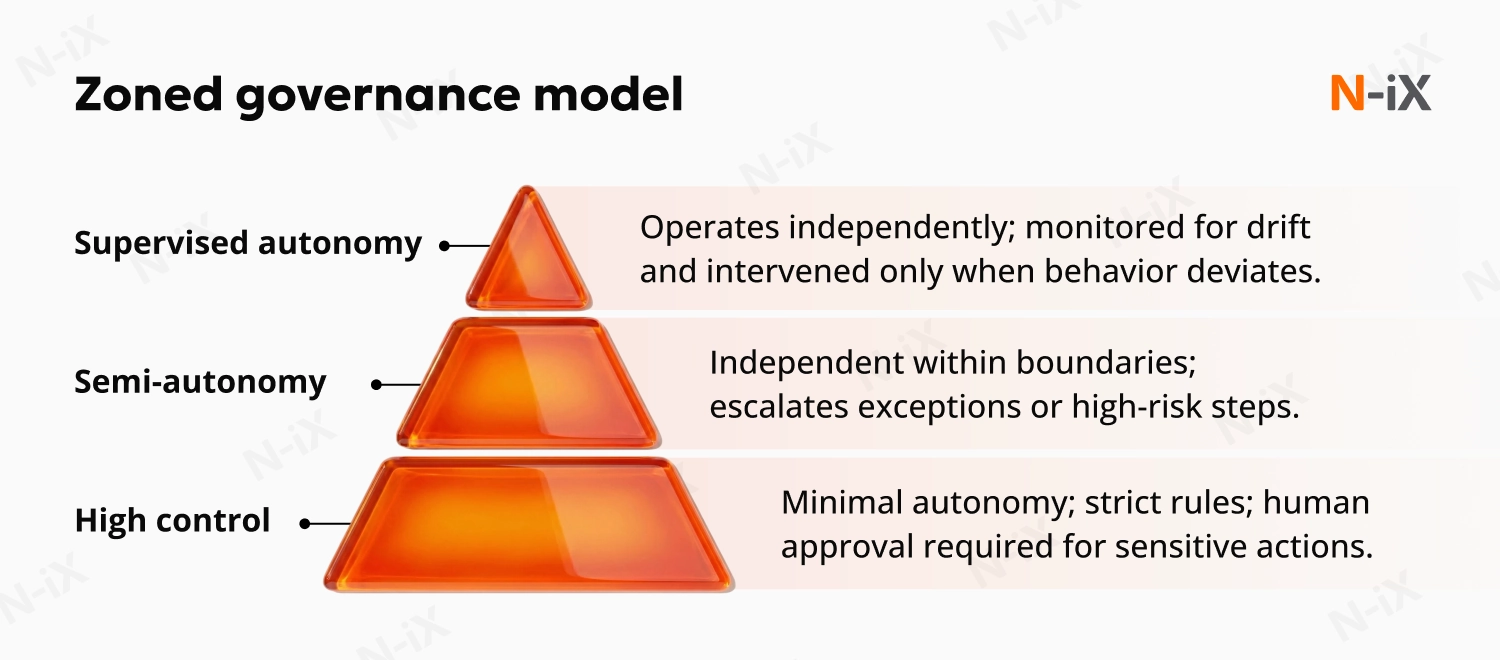

Once foundational structures are in place, governance must transition from principles to applied controls. A progressive autonomy model enables organizations to safely scale agent capabilities without incurring disproportionate risk. The zoned governance framework establishes clear tiers:

- Zone 1 governs critical or high-risk workflows where failure is unacceptable. Agents in this zone operate under strict rules with minimal autonomy and mandatory human validation.

- Zone 2 covers scenarios where partial autonomy is acceptable. Agents act within predefined boundaries but require supervision for exceptions or high-impact decisions.

- Zone 3 applies to mature systems with demonstrated safety and reliability. Here, agents operate independently under outcome-based monitoring rather than step-by-step oversight.

Step 5: Mandate upfront risk assessment

Every agent must undergo a formal risk assessment before development or deployment. This assessment evaluates the operational and financial consequences of errors, regulatory exposure, data access sensitivity, the complexity and unpredictability of the task environment, and potential systemic implications arising from interactions with other agents.

The output is a clear risk classification, Unacceptable, High, Moderate, or Minimal, informing which governance zone applies and which controls are required. This front-loaded evaluation ensures that governance intensity is proportional to the actual hazard surface.

Step 6: Define accountability and human oversight

Organizations must explicitly define how accountability flows when an agent acts independently. This includes determining who approves deployments, who monitors behavior, who intervenes during anomalies, and who bears responsibility for decisions made by autonomous systems. With mature agents, oversight shifts from Human-in-the-Loop, in which humans validate every action, to Human-on-the-Loop, in which humans monitor patterns, intervene strategically, and serve as the final arbiter when thresholds are breached.

Step 7: Identity-centric access control

Every autonomous agent must be treated as a distinct operational entity with its own verifiable identity. This identity becomes the anchor for all permissioning, monitoring, and accountability. Without it, agents blend into the infrastructure, making it impossible to understand who acted, when, or why. A strong AI agent governance platform architecture assigns unique digital identities, ties them to explicit capabilities, and restricts access through least-privilege permissions. Zero Trust principles apply here: an agent inherits no implicit trust from the environment and must authenticate for every action it takes.

Step 8: Continuous monitoring and observability

Once identity is established, the next requirement is visibility. Observability for agents extends far beyond traditional logging. It must reveal how the agent arrived at a decision. A mature AgentOps capability includes:

- Decision chain logging, capturing reasoning artifacts, tool call sequences, state transitions, and contextual variables that influenced each decision.

- Behavioral monitoring, comparing real-time behavior against expected policy boundaries, safety thresholds, and historical baselines to detect drift, anomalies, or emergent patterns.

One of the AI agent governance best practices is to create a comprehensive, forensic-grade view of the agent's internal and external actions. When incidents occur, as they inevitably will, this observability is what enables rapid root-cause analysis, structured incident response, and regulatory defensibility.

Step 9: Runtime enforcement

Technical enforcement increasingly relies on secondary AI systems that supervise and constrain the primary autonomous agents. A guardian agent may validate whether a requested action is permitted, intercept unsafe prompts or ambiguous instructions, rewrite requests to remove sensitive information, or halt execution when behavior diverges from policy. It is one of the most effective methods for protecting organizations from emergent, non-deterministic behaviors in primary agents.

Step 10: Apply emergency intervention protocols

Every autonomous system must include a reliable, tamper-proof method for human intervention. Kill switches and fallback protocols are part of the baseline safety envelope for any production-grade agent. Emergency mechanisms must exist outside the agent's execution environment and be secured under roles that agents cannot assume. They must also be integrated into operational workflows so that interruptions trigger structured rollback, documentation, and investigation.

Step 11: Maintain auditability and traceability

Effective AI agent governance must therefore operate as an ongoing process rather than a one-time implementation. Auditable systems are defensible systems. Immutable logs of actions, decisions, and reasoning chains are now fundamental to compliance with emerging regulations, including the EU AI Act, as well as internal audit and risk management standards. Traceability frameworks must document: which goals the agent pursued, which steps it executed, which data it accessed, which permissions were leveraged, and how the system's reasoning evolved.

Step 12: Foster workforce readiness and AI literacy

An agentic AI governance framework cannot succeed if only AI specialists understand how agents behave. Supervisors, business users, risk teams, and operational staff must all develop a baseline level of literacy in autonomous decision-making, escalation procedures, and failure-mode recognition.

Training programs should include understanding agent capabilities and boundaries, recognizing indicators of drift or misalignment, knowing when and how to intervene, and interpreting observability dashboards or incident reports. This investment builds organizational readiness, reduces resistance to adoption, and ensures human oversight remains meaningful rather than symbolic.

Step 13: Implement a phased roadmap

Operationalizing governance across the enterprise requires a phased, deliberate rollout aligned with maturity levels:

|

Phase |

Key actions |

Goal |

|

|

Phase 1 |

Foundation building |

Form governance committee; define autonomy rules; establish baseline policies and monitoring. |

Create a centralized accountability and initial control structure. |

|

Phase 2 |

System integration |

Implement identity and permissioning; deploy AgentOps monitoring; integrate evaluation and incident response workflows. |

Embed enforceable governance into technical and operational systems. |

|

Phase 3 |

Optimization and scaling |

Refine risk tiers; extend governance to multi-agent systems; introduce predictive analytics; formalize review cycles. |

Enable reliable scaling and continuous improvement of autonomous agents. |

Governance matures with each iteration. As agents grow more capable, governance systems must evolve in parallel, with more granular controls, deeper observability, advanced testing pipelines, stronger oversight models, and more specialized safety mechanisms.

Get started scaling autonomy with governance

Designing governance for autonomous systems is an ongoing program that must account for emergent behavior, cross-system interactions, and the operational realities of multi-agent environments. Many organizations find that traditional AI governance frameworks fail once an agent begins taking actions rather than merely generating outputs. Transitioning from experimentation to safe, production-grade autonomy requires a partner who understands the interplay among architecture, risk, compliance, and real-time observability.

At N-iX, we approach AI agent governance as an engineering discipline. We help organizations define clear autonomy boundaries, architect permissioned execution environments, implement identity-centric control, and establish monitoring pipelines that expose the whole reasoning and action sequence of each agent. Our teams implement robust agentic AI governance best practices, including building traceability infrastructure, deploying dynamic runtime guardrails, and designing escalation paths that prevent minor deviations from becoming systemic failures.

We also support the organizational foundations: multi-level governance structures, zone-based autonomy models, evaluation workflows, and cross-functional oversight processes. If your organization is preparing to introduce autonomous agents or needs to reinforce oversight frameworks around existing deployments, N-iX can help you design and operationalize governance that is robust, technically grounded, and aligned with your operational realities. Build autonomous capabilities with engineered control with us.

FAQ

What is the purpose of agentic AI governance?

Agentic AI governance ensures that autonomous agents operate within clearly defined boundaries, behave predictably, and remain accountable for their actions. It provides the rules, controls, and oversight mechanisms required to manage how agents reason, decide, and interact with systems.

How do we know when an agent meets governance requirements?

An agent meets governance requirements when its decision-making is observable, its permissions are constrained, and its behavior remains consistent across repeated tests. You should be able to trace how it develops plans, understand why it chose specific actions, and confirm that safeguards are triggered when risks arise. If these conditions are not met, the governance layer is incomplete.

Does AI agent governance require dedicated organizational roles?

Yes. Governance cannot be sustained organically or handled informally by engineering teams once agents are deployed across critical workflows. Clear ownership is needed across risk, security, legal, and operational functions to evaluate behavior, approve capabilities, and respond to incidents. Without defined roles, accountability for autonomous decisions becomes impossible to maintain.

How does agentic AI governance support compliance obligations?

Governance provides the documentation, observability, and control structures regulators expect when autonomous systems influence real-world outcomes. Continuous logging, transparent reasoning traces, and defined points of human oversight enable compliance verification during audits.

References

- Governing Agentic AI: A Strategic Framework for Autonomous Systems - Abhijeet Ganpatrao Patil

- Practices for Governing Agentic AI Systems - OpenAI

- MI9: An Integrated Runtime Governance Framework for Agentic AI

- Governance Challenges and Solutions for AI Agents - Gartner

- Agentic AI - the new frontier in GenAI - KMPG

- Rise of agentic AI: How trust is the key to human-AI collaboration - Capgemini

- Unlocking the potential of agentic AI: definitions, risks and guardrails - EY

- Navigating the AI Frontier: A Primer on the Evolution and Impact of AI Agents - World Economic Forum

Have a question?

Speak to an expert