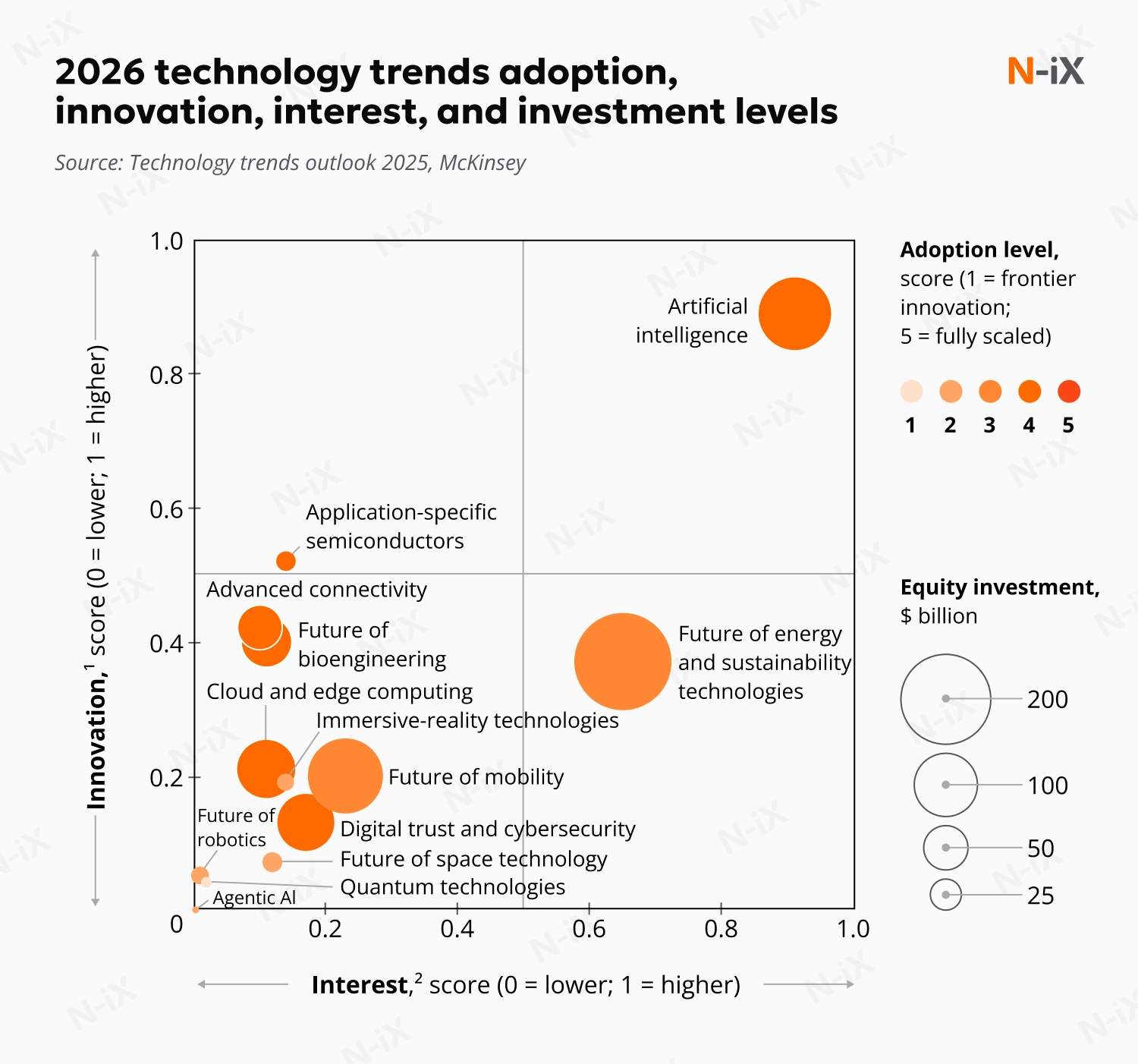

The next wave of technologies promises to change how businesses operate in ways that go beyond what's possible now. But these advances come with a substantial infrastructure cost that's already straining global energy and computing capacity. Businesses can no longer afford to experiment with every emerging trend, making strategic technology consulting more critical than ever. Deliberate, focused investments aligned with specific operational needs are becoming essential.

Let's look at the twelve technology trends in 2026 that stand out for their potential to drive revenue growth, reduce operational costs, and establish competitive moats through 2028, from AI infrastructure to software engineering practices.

Key 2026 technology trends: Investment priorities for business leaders

Thoughtful technology selection now matters more than budget size. Companies must identify which innovations among rapidly growing markets will deliver a competitive advantage in their specific context. We've grouped the trends into clear categories (AI capabilities, infrastructure needs, operational improvements, and security concerns) to help you navigate what's relevant to your operations.

AI & intelligent automation

Artificial Intelligence isn't one technology; it's several related capabilities changing how businesses work. AI agents can complete tasks independently. Agentic AI is expanding at 44.6% annually. Governance systems help manage AI risks. Industry-specific AI models understand specialized fields. Generative AI creates content and code and will reach $442B by 2031. Together, these represent the biggest opportunities and challenges for companies adopting new technology.

1. Agentic AI: The new workforce

Recognized on Gartner's top strategic technology trends for 2026 list, agentic AI is rapidly gaining enterprise adoption. The agentic AI market will grow from $7.06B in 2025 to $93.2B by 2032 [1]. This growth reflects enterprise demand for systems operating as virtual coworkers rather than simple automation tools.

What sets agentic AI apart: Traditional automation required developers to program every step and exception. Agentic AI handles the long tail of unpredictable tasks by using foundation models that respond appropriately to situations they've never encountered. These systems use digital tools designed for humans (web browsers, forms, APIs), eliminating the need for custom integrations. They receive instructions in natural language, generate work plans that humans can understand and modify, and communicate among themselves to coordinate complex workflows.

Business applications: Organizations are deploying agentic AI across functions previously considered too complex for automation. In customer support, AI agents don't just answer questions-they process orders, manage returns, and connect to logistics systems autonomously. Software development teams use agents that write, test, and deploy code based on natural language descriptions. Research teams deploy deep-research agents that design workflows, execute searches across hundreds of sources, and synthesize comprehensive reports in hours rather than weeks. Early adopters report significant productivity gains: credit analysts at one bank achieved 60% productivity increases and around 30% faster decision-making by using AI agents to automate memo drafting [2].

Your move: Find 3-5 processes where employees spend considerable time on tasks that follow patterns but need decision-making. Look for work that crosses multiple software systems and involves several steps. Start testing in low-risk areas where mistakes won't cause significant problems and where you can easily measure results. Before you begin, decide how you'll track progress: how much faster work gets done, how many errors occur, and whether employees find the system helpful. These measurements tell you whether the investment is working.

Explore high-impact AI agent use cases—get the guide!

Success!

2. AI governance: From optional to mandatory

AI governance used to be optional. Now it's legally required. The EU AI Act fines companies up to €35M for violations [3]. Regulators and customers increasingly demand that companies explain how their AI systems make decisions, prove that those systems treat people fairly, and show who's accountable when things go wrong.

Business impact: Good governance saves money by preventing problems. Companies that skip governance face fines, damage their reputation when AI makes biased or wrong decisions, and waste time fixing systems that should never have been deployed. Companies with strong governance build customer trust, get employees to use AI tools, and win contracts as buyers require proof of responsible AI practices.

Your move: Form an AI oversight team that includes people from legal, IT, operations, and risk management. List every AI system your company uses, both machine learning and generative AI tools. Write clear policies covering who can use AI, how to handle data, how to verify AI outputs are accurate, and what to do when problems arise. This preparation lets you expand AI use confidently.

3. Vertical AI: Industry-specific intelligence

Among technology trends in 2026, industry-specific AI trained on specialized data and domain knowledge stands out for creating competitive advantages that general-purpose AI models cannot replicate. General AI models know a little about everything but aren't experts in anything. Vertical AI models are trained specifically for one industry: healthcare, legal, logistics, or manufacturing, so they understand the terminology, regulations, and workflows that matter in that field. Industry-specific AI trained on specialized data and domain knowledge creates competitive advantages that general-purpose AI models cannot replicate.

Business value: Vertical AI eliminates the need to write elaborate prompts explaining context. The system already understands your industry. You get more accurate results because the AI is trained on real scenarios from your field, not general internet content. Integration is easier because vertical AI vendors build direct connections to the software your industry uses: medical records systems, legal databases, or manufacturing platforms.

Your move: Research vertical AI vendors in your industry now: what data they use, how they connect to your software, and what results they deliver. If no good options exist, check whether your company has proprietary data that could support building a custom AI model. Developing specialized AI requires dedicated engineering skills and computing resources, so partnering with an experienced technology provider can help you move faster and capture the 2-3 year advantage that early adopters typically gain.

4. Generative AI: Scaling creative and technical work

Generative AI has become standard infrastructure for creating content, writing software, and conducting research. The market will grow at a CAGR of 37%, driven by more use cases and falling costs [4]. Some operations cost 50 times less than a year ago, making generative AI affordable for more business applications. Smaller specialized models can run directly on devices, reducing reliance on cloud services and improving response times.

Business applications: Pharmaceutical companies use generative AI to predict how molecules will behave, cutting drug development time and costs. 71% of software developers report productivity gains between 10%-25% when pairing generative AI with strong quality checks [5]. Marketing teams create personalized content for different audience segments without hiring proportionally more writers or designers. The best returns come from embedding generative AI into existing workflows, not using it as a separate tool.

Your move: Partner with an experienced tech provider to build generative AI into R&D and creative work. Define when AI outputs need expert review before use. Track speed metrics (how fast work gets done) and quality metrics (accuracy, how often work needs revision) to understand real value. Train teams to write effective prompts and critically verify outputs; these skills determine whether generative AI saves time or creates more work.

Infrastructure and computing

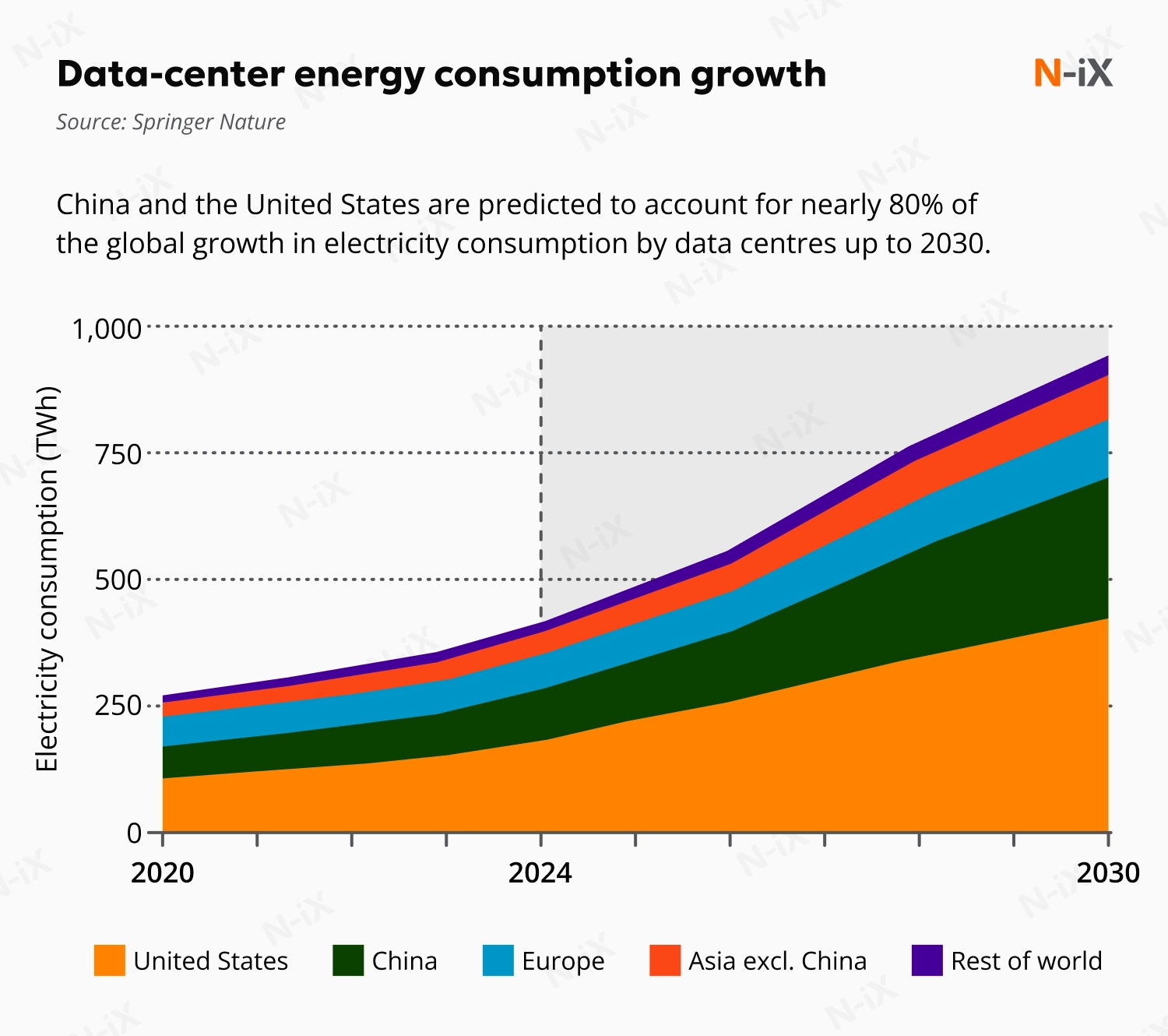

The infrastructure layer (data centers, edge computing nodes, and the networks connecting them) faces significant new strain. AI workloads require exponentially more computing power, specialized hardware, and energy than previous technology waves. This section covers how infrastructure evolves to meet these demands and where businesses must invest to remain competitive.

5. AI-native infrastructure: Rethinking the stack

Traditional data centers designed for web applications and databases cannot handle AI workloads efficiently. AI-native infrastructure represents a redesign of computing systems around the specific requirements of training and running AI models. Power-intensive AI infrastructure is making electricity supply the primary constraint on AI expansion.

Business impact: Infrastructure decisions made now determine what's possible in 2027-2028. Companies treating AI infrastructure as an IT expense rather than a strategic capability will be constrained when deploying AI at scale. Competitors with purpose-built AI infrastructure gain advantages in model training speed, inference cost, and ability to run larger models. This translates directly to better AI applications, faster iteration cycles, and lower operational costs per AI interaction. AI-native infrastructure requires significant upfront investment but delivers better control and lower long-term costs than cloud services for high-volume production workloads.

Your move: Audit your infrastructure's readiness for AI workloads now. Assess power capacity, cooling systems, and network bandwidth. If planning significant AI deployment, engage with facility managers and utilities early-lead times for power upgrades can exceed software development timelines. For organizations not ready for capital investment in AI infrastructure, understand cloud options thoroughly, including costs at production scale and vendor lock-in risks.

6. Edge computing: processing at the source

Cloud computing centralizes data and processing in massive data centers. Edge computing reverses this, moving computation closer to where data originates: factories, retail stores, hospitals, and vehicles-often directly on Internet of Things sensors and embedded devices. This shift addresses latency, bandwidth costs, and data sovereignty requirements that the cloud alone cannot solve. The edge computing market will grow from $564.56B in 2025 to over $5T by 2034, representing 28% annual growth [6].

Business impact: Edge computing processes data instantly at its source rather than sending it to distant cloud servers, enabling real-time responses in manufacturing, retail, and healthcare. This approach also keeps sensitive data local for easier compliance and cuts costs by sending only important insights instead of massive amounts of raw data.

The tradeoff is increased complexity. Instead of managing everything in one central location, companies must now handle software updates, security, and monitoring across hundreds or thousands of separate edge devices. Making this work requires new skills and often specialized management software designed specifically for distributed systems.

Your move: Identify which operations need instant responses, generate large amounts of data, or must keep information in specific locations. Good examples include factory quality checks that need immediate adjustments, stores that personalize displays for shoppers, or hospitals monitoring patient vital signs.

Figure out if the benefits (faster decisions, lower data transmission costs, and meeting regulatory requirements) are worth the extra work of managing many separate systems. Test edge computing at one or two sites before expanding it across your organization.

7. Quantum computing: From laboratory to limited production

Quantum computing has generated excitement and skepticism in equal measure. In 2026, the technology remains experimental mainly, but recent breakthroughs are moving it from pure research toward limited practical applications. The quantum computing market will grow from $1.79B in 2025 to $7.08B by 2030 at a 31.6% CAGR [7]. This represents progress but remains small compared to other computing markets, reflecting quantum's early stage and narrow applicability.

Real-world applications: One of the promising technology trends in 2026, quantum computing won't replace conventional computers for most tasks. Its value lies in specific problem types where quantum mechanics provides advantages. Financial institutions explore quantum algorithms for portfolio optimization and risk modeling that consider more variables than classical computers can process efficiently. Pharmaceutical companies investigate using quantum systems to simulate molecular interactions for drug discovery, potentially identifying candidates that conventional systems would miss. Logistics companies test quantum approaches to route optimization problems with massive solution spaces. In 2026, organizations are moving from pure research to testing quantum systems on real business problems, but most applications remain experimental.

Your move: Most organizations should monitor quantum developments and build relationships with quantum computing providers rather than committing resources immediately. For large finance, pharmaceuticals, or logistics enterprises, consider small pilot projects to build quantum expertise before the technology matures.

Learn more: 7 AI trends to watch in 2026

Operational excellence: Automating for scale

As businesses scale AI and digital capabilities, operational complexity grows exponentially. Manual processes that worked for dozens of applications break down with hundreds. IT teams struggle to manage infrastructure sprawl. Development cycles slow as coordination overhead increases. This section covers two technology trends in 2026 that help organizations maintain velocity as they scale: hyperautomation for end-to-end workflows, AIOps for autonomous IT operations, and platform engineering for developer productivity.

8. Hyperautomation: Beyond task automation

Traditional automation targets individual tasks: processing an invoice, sending an email, updating a database record. Hyperautomation connects these tasks into complete workflows that run end-to-end without human handoffs. The hyperautomation market will expand from $46.4B in 2024 to $270.63B by 2034 at 17% annual growth. This reflects businesses moving from automating individual steps to automating entire processes [8].

Business application: Hyperautomation combines robotic process automation (RPA), AI, and analytics to handle complete workflows. An insurance claim doesn't just get automatically entered into a system; it gets validated, routed, assessed, approved, or escalated, and paid without human touch except for exceptions. A supply chain process doesn't just track inventory; it predicts demand, adjusts orders, coordinates logistics, and manages exceptions autonomously. Hyperautomation requires thinking in workflows, not tasks. According to a McKinsey study, rule-based task automation drives 30-200% ROI in one year.

Your move: Identify three end-to-end processes where work flows through multiple systems and people spend time on coordination rather than judgment. Map current workflows, including all handoffs, decision points, and exception cases. Calculate the time and cost spent on each process. Pilot hyperautomation on the process with the clearest ROI and least exception complexity, then expand based on results. Organizations lacking automation expertise should partner with providers experienced in process redesign and RPA implementation rather than attempting to build capabilities from scratch.

9. AIOps: Autonomous IT operations

IT operations teams drown in alerts, logs, and incidents. As infrastructure scales, human-only monitoring becomes impossible. AIOps applies AI to detect issues, identify root causes, and remediate problems automatically. By 2029, agentic AI will autonomously resolve most of the customer service issues, according to Gartner technology trends 2026 [9]. This includes IT service desk operations where AIOps handles incident detection, diagnosis, and resolution with minimal human intervention.

What AIOps delivers: Predictive maintenance that identifies failing components before they cause outages. Automated remediation that fixes common problems without human intervention. Root cause analysis that traces issues across complex distributed systems faster than manual investigation. Capacity planning that predicts resource needs based on usage patterns and business growth.

Business impact: Mean time to resolution (MTTR) drops significantly when systems can detect and fix problems autonomously. Organizations report moving from reactive firefighting to proactive system management, freeing IT staff for strategic projects rather than incident response. AIOps requires clean data: logs, metrics, and traces, structured for AI analysis. Organizations with fragmented monitoring or inconsistent logging struggle to deploy AIOps effectively. It requires investing in observability infrastructure before expecting autonomous operations.

Your move: Evaluate AIOps platforms for IT operations, starting with an assessment of current observability maturity. If monitoring data is fragmented across tools or lacks a consistent structure, consolidate and standardize before pursuing AIOps. Consider partnering with a technology provider that has experience integrating AIOps into existing environments and can help navigate the implementation complexity. Measure improvements in MTTR, alert noise reduction, and staff time freed for strategic work.

Continue reading: AIOps strategy: Key components and best practices

Security and trust: Building resilient systems

Traditional perimeter-based security fails as organizations move workloads to cloud environments, connect thousands of IoT devices, and support distributed workforces. The assumption that anything inside your network is trustworthy no longer holds. Among the most transformative technology trends in 2026 are two approaches to building security and trust in distributed, digital-first operations: zero-trust architecture for continuous verification and blockchain for transparent, tamper-proof records.

10. Zero-trust architecture: Verify everything, always

Traditional cybersecurity models assume network perimeters, which are firewalls protecting trusted internal networks from untrusted external threats. Zero-trust rejects this assumption. Every access request requires verification, regardless of source. The zero-trust security market will expand from $42.28B in 2025 to $124.5B by 2032 at 16.7% annual growth [10]. This reflects organizations recognizing that perimeter security cannot protect modern distributed operations.

Why now: Three forces drive zero-trust adoption. Remote work eliminated the network perimeter: employees access systems from home networks, coffee shops, and airports. Cloud adoption moved applications and data outside organizational control to third-party infrastructure. IoT proliferation connected thousands of devices: security cameras, sensors, and building systems that lack traditional security controls. Traditional perimeter defense cannot protect this distributed environment.

Business impact: Zero-trust prevents costly breaches averaging $4.45M in remediation expenses plus lost business and reputation damage [11]. A properly deployed zero-trust strategy can prevent up to 31% of insured cyber losses annually [12]. Zero-trust enables business agility, supporting remote workforces, adopting cloud services, and connecting partners without expanding the attack surface. Continuous verification and access logging for regulated industries simplify SOC 2, HIPAA, and PCI-DSS compliance. Implementation costs range from hundreds of thousands to low millions, while preventing a single major breach saves multiples of that investment.

Your move: Start transitioning from perimeter-based security to zero trust by protecting your critical systems through controlled and continuously verified access. When choosing a cybersecurity partner to help implement these protections, look for firms that understand your business context.

11. Blockchain for enterprise: transparent, tamper-proof records

Blockchain gained attention through cryptocurrency but offers value beyond digital currency. Its core capability is maintaining transparent, tamper-proof records across distributed systems, which solves real business problems around trust, transparency, and verification. By 2029, the global blockchain market is expected to reach $248.9B, a CAGR of 65.5% [13]. This rapid growth is mainly because more venture capital firms are investing heavily in blockchain startups. At the same time, industries like retail, supply chain management, and banking are adopting blockchain.

What blockchain provides: Distributed ledgers record transactions across multiple parties without a central authority. Once recorded, entries cannot be altered without consensus, creating a tamper-proof history. All participants see the same records, eliminating discrepancies. Smart contracts execute automatically when conditions are met, reducing coordination overhead. Cryptographic verification ensures authenticity without requiring trust in intermediaries.

Business impact: Blockchain helps track products from origin to customer, making verifying authenticity easier, ensuring ethical sourcing, and quickly pinpointing affected batches if problems arise. It also gives people control over their personal information, letting them share verified credentials safely while reducing the risk of identity fraud. In finance, blockchain enables faster, cheaper, and more transparent cross-border payments, helping banks and companies cut costs and speed up international transactions.

Your move: It's more practical for most enterprises to partner with existing blockchain platforms rather than building from scratch, unless your project requires a fully custom solution. Focus on areas where blockchain adds clear business value, such as supply chain transparency, secure digital identities, or cross-border payments.

Sustainability and connectivity: Enabling the future responsibly

One of the key technology trends in 2026 impacting businesses is the growing challenge of balancing increasing energy consumption against the need for robust, always-on connectivity. Data centers, which power services like AI and cloud computing, use a lot of energy, making energy efficiency a top priority. At the same time, 5G and 6G networks enable faster, more real-time business operations and connected devices, helping businesses become more efficient while cutting down on physical infrastructure.

12. Sustainability as an infrastructure requirement

The combination of sustainability and advanced connectivity is changing how businesses operate. Data centers now consume significant energy, and companies must focus on using energy more efficiently to meet sustainability goals.

Business impact: 5G and 6G networks allow businesses to run real-time operations, deploy many devices, and reduce their environmental impact by eliminating the need for traditional physical infrastructure. By adopting sustainable practices and advanced connectivity, businesses can gain a competitive edge, boost innovation, and appeal to customers and investors who value eco-friendly operations.

Your move: Integrate sustainability and connectivity into your strategy. First, consider how 5G and 6G can improve your operations and help you reduce energy use. Invest in energy-efficient technologies that run on renewable energy and look for ways to make your data centers more efficient. Start measuring your energy consumption and set sustainability goals to track your progress. By addressing these issues now, you can cut costs, increase efficiency, and improve your business's reputation for being environmentally responsible.

Turn strategy into action: Build your 2026 technology roadmap

Technology decisions made in 2026 determine competitive positioning through 2028. Agentic AI early adopters gain 2-3 year leads as their systems learn from domain-specific deployments. Companies delaying zero-trust accumulate breach risk daily as attack surfaces expand. Organizations treating sustainability as a compliance checkbox rather than an operational constraint will be unable to scale AI or win enterprise contracts requiring verified environmental credentials.

The gap between operationally mature organizations and those relying on manual processes, legacy security, and fragmented tools widens each quarter. Compounding advantages from automated workflows, autonomous IT operations, and developer productivity platforms create a separation that becomes nearly impossible to close. Understanding and acting on technology trends in 2026 is about securing a competitive position before these capabilities become universally expected.

Sources:

- Gartner. (2025, March 5). Gartner predicts agentic AI will autonomously resolve 80% of common customer service issues without human intervention by 2029.

- McKinsey & Company. (2024, December 9). Extracting value from AI in banking: Rewiring the enterprise.

- European Parliament and Council of the European Union. "Regulation (EU) 2024/1689 Laying down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act)." Official Journal of the European Union, 12 July 2024.

- Statista Market Insights. (2025, March). Generative AI market size.

- Erolin, J. (2024, July 25). 72% of software engineers are now using GenAI, boosting productivity. BairesDev.

- Precedence Research. (2025, August 21). Edge computing market driving real-time data processing and IoT growth.

- Research and Markets. (2024, December). Quantum computing market - Forecasts from 2025 to 2030.

- Global Market Insights. (2025, May). Hyper automation market - By technology, by deployment, by solution, by end use, by organization size, growth forecast, 2025-2034.

- Gartner. (2025, March 5). Gartner predicts agentic AI will autonomously resolve 80% of common customer service issues without human intervention by 2029.

- Research and Markets. (2025, May). Zero trust network access market report 2025.

- IBM. (2025). Cost of a data breach report 2025.

- Zscaler. (2025). How much cyber loss can be prevented by using zero trust solutions?

- MarketsandMarkets. (2025). Blockchain market. Global forecast to 2030.

Have a question?

Speak to an expert