Most engineering leaders no longer question the value of software testing. What has changed is how testing must be approached, as software development itself has evolved.

Modern systems are more distributed, releases are more frequent, and delivery pipelines are more automated than even a few years ago. In this context, testing practices that worked for monolithic applications and quarterly releases no longer provide sufficient control. With these and other novelties, what do software testing best practices look like in 2026?

This article is a navigation guide for CTOs, VPs of Engineering, Heads of QA, product and platform engineering leaders, and architects responsible for quality at scale. It focuses on current software testing best practices, based on the realities of cloud-native architectures, CI/CD pipelines, platform engineering, AI-assisted development, and other factors. The article also explores principles, priorities, maturity levels, and organizational considerations that influence testing in real-world engineering environments. Let's dive in.

What software testing best practices mean in 2026

In 2026, the best software testing practices represent a shift from defect detection alone to a broader focus on risk control and system reliability. The goal is not to prove that software works in ideal conditions, but to understand how it behaves under real-world scenarios and where it is most likely to fail. Testing becomes a mechanism for protecting system reliability rather than a gatekeeping function at the end of development.

Modern testing is also a continuous process, embedded throughout the lifecycle. It influences architecture decisions and relies heavily on feedback from production systems. Testing is no longer confined to a single phase or team. It is an integral part of how software is designed, built, released, and operated. Here are a few principles that the best QA practices in software testing should rely on.

More on the topic: How to apply the right QA strategies to address the needs of your project

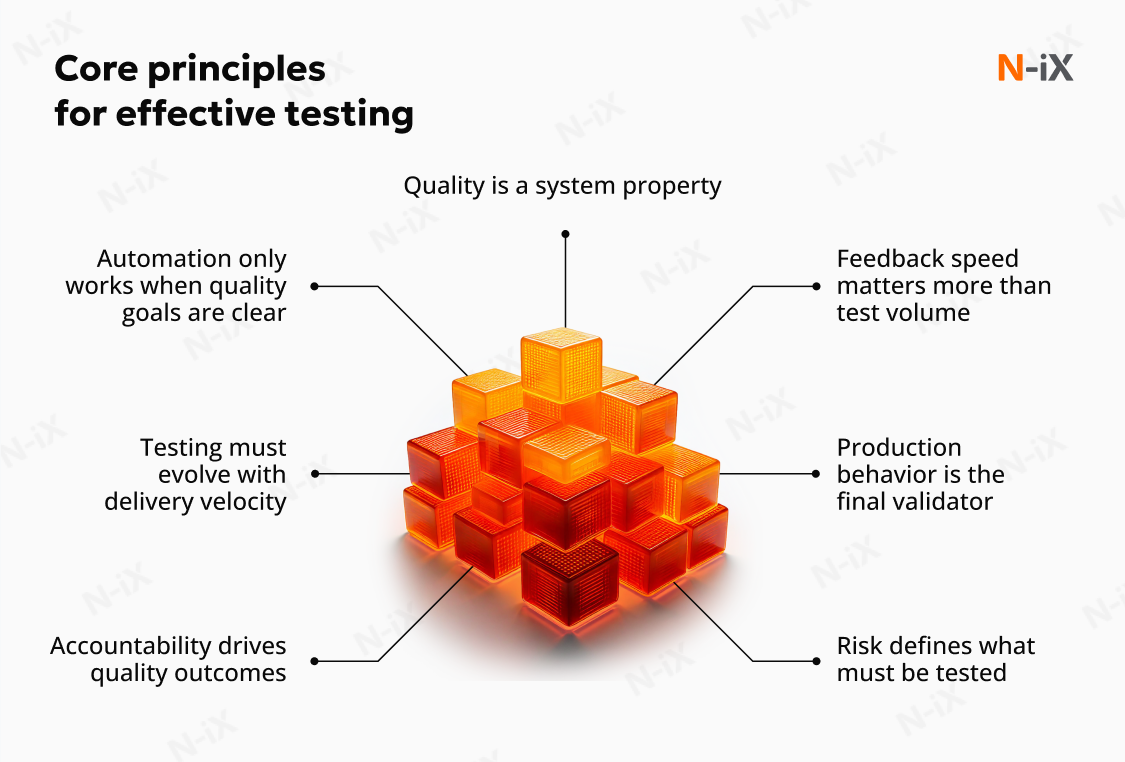

The core principles behind effective software testing

As software systems scale, testing extends beyond tactical execution and becomes a key input into governance and engineering decision-making. Effective software testing best practices for 2026 are guided by a small set of principles that shape how teams design systems, prioritize effort, and manage risk across the delivery lifecycle.

Quality is a system property

Software quality does not emerge solely from testing. It results from architecture, design decisions, deployment models, and operational practices. Testing can only reveal the quality characteristics already embedded in the system. For leaders, this principle reinforces that investment in testability, observability, and modular design directly determines testing effectiveness.

Risk defines what must be tested

Testing effort should be proportional to business and operational risk. Not all failures have equal impact, and not all parts of the system deserve the same level of scrutiny. This principle shifts testing from coverage-driven activity to risk-informed decision-making, enabling teams to focus validation where failure would be most costly.

Feedback speed matters more than test volume

The value of testing lies in how quickly it informs decisions. Fast, reliable feedback allows teams to correct processes early and release with confidence. Large, slow-running test suites may increase apparent coverage but often delay learning. Effective testing prioritizes feedback loops that keep pace with delivery.

Automation only works when quality goals are clear

Automation delivers value only when it is driven by clear quality objectives, not by coverage targets or tooling adoption. Without defined goals, automated tests amplify noise and maintenance overhead instead of reducing risk. When automation is aligned with explicit reliability, performance, or compliance goals, it becomes a scalable control rather than a liability.

Production behavior is the final validator

No pre-release testing strategy can fully predict how a system behaves under real load and real user behavior. Where production validation is feasible, production environments provide the most accurate signal of system health and risk. Effective testing strategies treat production telemetry, incidents, and user impact as critical inputs into future validation decisions.

Testing must evolve with delivery velocity

As release frequency increases, testing approaches must adapt. Practices that work for infrequent releases often collapse under continuous delivery. This principle ensures that the testing strategy remains aligned with the frequency of change, preventing quality controls from becoming bottlenecks or being bypassed entirely.

Accountability drives quality outcomes

Quality outcomes depend on clear ownership of testing decisions and results across engineering teams. When accountability is diffused or pushed to a single function, defects persist and systemic issues remain unresolved. Shared responsibility, supported by QA and platform teams, ensures that quality improvements are sustained rather than reactive.

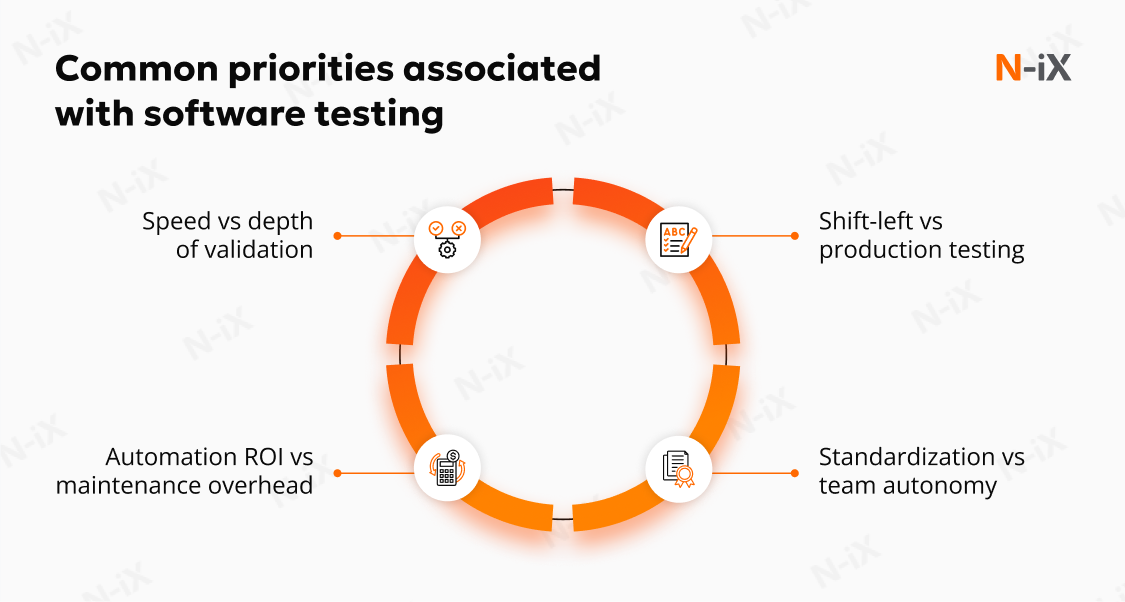

Top priorities engineering leaders must consider to design quality assurance practices in software testing

As software delivery scales, testing decisions become a series of priorities and trade-offs rather than binary choices. Each decision reflects the choice between speed, cost, risk, and long-term sustainability. Modern software testing standards and best practices do not eliminate these tensions. They make them explicit and manageable in ways that protect the end product and the business. Let's review a few common priorities associated with software testing.

Speed vs depth of validation

Faster delivery increases the need for rapid feedback, but deeper validation often requires more time. This concern becomes visible when teams push for shorter release cycles while maintaining confidence in complex systems. According to DORA research, high-performing teams achieve both speed and stability by investing in fast, automated validation for everyday changes while reserving deeper testing for high-risk scenarios.

N-iX approach: Separate fast, continuous validation from targeted, risk-driven testing. The goal is not to test less, but to apply depth where failure would be costly and speed where feedback matters most.

Automation ROI vs maintenance overhead

Automation promises scalability, but it also introduces long-term maintenance costs. Test suites that grow without clear intent often become brittle, slow, and expensive to maintain. McKinsey has noted that automation initiatives frequently underdeliver when ongoing ownership and upkeep are underestimated.

N-iX approach: Treat test automation as a portfolio investment. Automated tests are justified by risk reduction and reuse, not by coverage targets. Low-value or high-maintenance tests are retired, keeping automation aligned with system evolution.

Shift-left vs production testing

Early testing reduces rework and catches issues before release, but no pre-production environment can fully represent production behavior. This tension becomes evident in distributed systems, where real load, data, and usage patterns are hard to simulate.

N-iX approach: Combine shift-left practices with production validation. Pre-release testing manages known risks, while production telemetry, canary releases, and controlled rollouts validate assumptions in real conditions. The value lies in using both approaches as complementary controls.

Standardization vs team autonomy

Standardization improves consistency and governance, but excessive rigidity can slow teams and reduce ownership. This question often surfaces when platform teams introduce shared testing frameworks or quality gates across diverse products.

N-iX approach: Standardize outcomes and interfaces, not implementation details. Teams should have autonomy in meeting quality expectations while also using shared platforms with common tooling, metrics, and guardrails. This approach preserves velocity without fragmenting quality practices.

Software testing best practices checklist for smooth SDLC

This checklist reflects how high-performing engineering organizations approach testing across the entire software development lifecycle (SDLC). It focuses on practices that scale with system complexity and delivery velocity, rather than isolated testing activities.

Define testability and quality criteria during design

Testing outcomes are largely determined by architectural and design decisions. Systems designed with clear interfaces, observability, and isolation are easier to validate and safer to change. Addressing testability early reduces reliance on fragile end-to-end tests later in the lifecycle.

Why it matters: Early quality decisions lower long-term testing costs and reduce delivery risk.

Typical QA and validation activities: Design reviews, testability analysis, architectural validation.

Prioritize testing based on business and operational risk

Not all functionality requires the same level of validation. Effective teams focus testing effort on workflows that impact revenue, compliance, security, or customer trust. Risk-based prioritization keeps testing aligned with business value rather than coverage targets.

Why it matters: Testing effort is finite, and misallocation leads to blind spots where failures are most costly.

Typical testing types: Risk-based testing, integration testing, scenario testing.

Maintain clear and actionable testing documentation

Testing documentation provides a shared understanding of what is tested, why it is tested, and how quality decisions are made. In modern environments, documentation should be lightweight, up to date, and closely aligned with system risk and architecture. It supports continuity as teams scale, change, or onboard new engineers. Well-maintained documentation also makes the testing strategy transparent to leadership and auditors.

Why it matters: Without clear documentation, testing knowledge becomes tribal and fragile. This increases delivery risk, slows decision-making, and complicates compliance and incident analysis.

Typical documents: Test strategy and plan; scope files; risk assessment and coverage maps; acceptance criteria and test charters; release quality reports.

Validate logic early with fast, reliable tests

Early-stage tests should provide rapid feedback on correctness and regressions. These tests run frequently and block faulty changes before they propagate downstream. Speed and reliability are more important than depth at this stage.

Why it matters: Fast feedback reduces rework and prevents defects from reaching later stages of the SDLC.

Typical testing types: Unit testing, component testing.

Protect system boundaries with integration and contract tests

As systems become more distributed, failures often occur at integration points. In service-based or API-driven architectures, contract testing validates assumptions between services without requiring full end-to-end environments. This approach scales better than relying solely on large integration suites.

Why it matters: Stable integrations reduce coordination overhead and deployment risk in microservices architectures.

Typical testing types: Integration testing, API testing, contract testing.

Automate critical paths and regressions with clear ownership

According to The 2025 State of Testing™ Report, over 20% of respondents have replaced 75% of previously manual testing with automated testing in 2025 [1]. The study also mentions two larger groups (29% and 26%) that now have over 25% and 50% of their testing processes automated, respectively.

Automation delivers value when it consistently protects critical workflows and system boundaries. Tests must be owned, maintained, and trusted by engineering teams. Automation without ownership quickly becomes brittle and ignored.

Why it matters: Well-governed automation supports frequent releases without slowing pipelines.

Typical testing types: Automated regression testing, CI/CD pipeline testing.

Keep CI/CD pipelines fast, reliable, and meaningful

Testing in pipelines should balance coverage with execution time. Slow or flaky pipelines erode confidence and encourage bypassing quality controls. Tests that no longer provide actionable signals should be refactored or removed.

Why it matters: Pipeline trust directly affects release discipline and quality outcomes.

Typical testing types: Continuous testing, smoke testing, pipeline validation tests.

Validate non-functional requirements continuously

Performance, reliability, and security issues often emerge under real-world conditions. Testing non-functional requirements should not be limited to pre-release phases. Continuous validation helps teams detect degradation early.

Why it matters: Non-functional failures frequently have a higher business impact than functional defects.

Typical testing types: Performance, security, and resilience testing.

Use production signals to refine the testing strategy

Production behavior reveals failure modes that pre-release testing cannot fully predict. Logs, metrics, traces, and incidents should feed directly into test planning and coverage decisions. This closes the learning loop between delivery and operations.

Why it matters: Production-informed testing improves reliability over time and reduces repeat incidents.

Typical testing types: Monitoring-based validation, canary testing, post-release testing.

Review outcomes and improve testing continuously

Testing practices must evolve alongside systems and teams. Regular reviews of escaped defects, incident patterns, and testing effectiveness help teams adjust strategy. Improvement should be data-driven rather than reactive.

Why it matters: Continuous improvement prevents testing from becoming outdated or misaligned with system risk.

Typical testing types: Retrospective analysis, defect trend analysis, test effectiveness reviews.

Use testing metrics that support leadership decisions

Testing metrics should help leaders understand risk, stability, and delivery health, not just testing activity. Metrics such as test counts and raw coverage often create false confidence because they say little about whether critical failures are being prevented. In contrast, outcome-oriented metrics show how effectively testing controls risk in real delivery conditions. Testing data should guide investment decisions by revealing where risk is increasing and where existing controls are working.

Why it matters: Leaders rely on metrics to decide where to invest in testing, architecture, or tooling. Misleading metrics result in over-testing low-risk areas while critical gaps remain unaddressed.

Typical metrics: Defect escape rate; change failure rate; mean time to detect (MTTD); mean time to recovery (MTTR).

Pay attention to the warning signs that testing will not scale

Testing misalignment rarely appears suddenly. It emerges through gradual signals such as more frequent release freezes, slower CI/CD pipelines despite growing test suites, and rising production incidents. Over time, teams become hesitant to modify systems they do not trust, increasing the cost of change.

Why it matters: These signals indicate that testing practices no longer match system complexity or delivery expectations. Ignoring them leads to reactive controls, delayed releases, and growing operational risk.

Typical factors to consider: Release stabilization periods; pipeline execution time trends; recurring incident patterns; engineering effort required to make changes.

How should you incorporate AI into software testing practices?

AI assists testing through test planning, generation, prioritization, and analysis. These capabilities can improve efficiency and highlight patterns that humans might miss, especially in large test suites. According to the 2025 State of Testing™ Report, the respondents use AI tools for:

- Test creation (41%)

- Test planning (20%)

- Test reporting and insights (19%)

- Test data management (18%)

- Test case optimization (17%).

The same report also states that 46% of respondents don't have the use of AI tools in their list of best software testing practices [1]. These statistics show that testers and QA specialists are ready to use AI tools where AI offers the greatest leverage, and where risk can be effectively contained through human review and governance.

While being a helpful tool, AI can introduce risks of false confidence and opaque logic. Automatically generated tests may appear comprehensive, but may miss critical scenarios. Without transparency and human review, teams may struggle to understand why certain risks are considered covered.

Strong governance and human oversight remain essential. AI should help testing decisions, not replace accountability.

Common pitfalls that undermine testing effectiveness

Many testing problems are not caused by a lack of effort or tooling, but by structural decisions that disconnect testing from how software is actually built and operated.

Treating testing as a discrete phase isolates it from design and production feedback. When testing starts only after implementation, architectural flaws and ambiguous requirements surface too late, forcing teams to rely on heavy regression cycles or release freezes to compensate. This often results in slower delivery and repeated rework rather than higher quality.

Over-automation without a clear strategy creates hidden maintenance costs. Teams broadly automate to increase coverage but end up with slow, brittle test suites that break with every change. Instead of enabling faster releases, automation becomes a bottleneck that engineers bypass under pressure, undermining trust in quality controls.

Siloed QA and engineering teams weaken ownership and accountability. When quality is seen as the responsibility of a separate function, engineers focus on feature delivery, while QA teams struggle to keep up with the system's complexity. This division leads to late defect discovery and recurring issues that no single team feels responsible for fixing permanently.

Ignoring production signals leaves critical risks unaddressed. Real failures often emerge from data volume, usage patterns, or integration behavior that pre-release testing cannot fully simulate. When incidents and monitoring insights are not fed back into the testing strategy, the same classes of issues continue to reappear.

Avoiding these pitfalls requires technical discipline, such as designing for testability and maintaining meaningful automation. Simultaneously, it calls for organizational discipline, including shared ownership, feedback loops, and leadership commitment to treating quality as a system-level responsibility. And if your internal team lacks the resources to provide both, then outsourcing QA and software testing to a reliable tech partner like N-iX may be one of the best approaches to solution testing. Here's how we can help.

Explore the topic: How to hire an offshore testing team in 4 steps

How N-iX's best practices for software testing can ensure your solution's performance

Software testing delivers real value when it is embedded into the way software is designed, built, and released. At N-iX, testing is integrated into the development lifecycle from the start, ensuring that quality, performance, and scalability are addressed alongside functional requirements. This approach reduces late-stage rework, improves delivery predictability, and helps teams maintain stable systems as complexity increases.

N-iX applies software testing best practices in close alignment with modern software development practices, including CI/CD automation, cloud-native delivery, and platform engineering. QA teams work alongside developers, DevOps, and architects, combining manual, automated, performance, and security testing into unified workflows. Backed by over 23 years of software engineering expertise across fintech, retail, telecom, manufacturing, IoT, and other industries, N-iX delivers testing strategies that are risk-aware, automation-driven, and grounded in real operational requirements.

Testing strategies are tailored to system risk and business priorities, with automation focused on critical paths and continuous feedback loops shaping further improvements. As a result, clients benefit from faster releases, reliable performance under real-world conditions, and testing processes that scale with their products.

Here are a few recent projects where N-iX testing experts ensured solution quality for leaders across various industries.

N-iX case study 1: Applying software testing best practices to support reliability at scale

Our testing experts supported Cleverbridge, a Germany-based ecommerce solution company, with structured software QA and testing throughout the migration from a legacy desktop application to a modern web platform. Testing was embedded into iterative development cycles, combining user testing, feature validation workshops, and beta testing with real clients to ensure quality at every release stage. It reduced release risk, improved cross-platform reliability, and enabled Cleverbridge to deliver a stable, user-friendly product that expanded its market reach beyond Windows users.

N-iX case study 2: Software testing best practices in embedded and mobile systems

N-iX took end-to-end ownership of software testing for a new smartwatch product and its companion Android and iOS applications by a Swiss manufacturer. Our team implemented behavior-driven testing, automated firmware, mobile, and UI validation, and integrated computer vision-based checks to replace manual verification. This approach significantly reduced regression time testing (from 4 days to 2 hours), improved cross-device reliability, and ensured a smooth firmware update experience for end users across platforms.

N-iX case study 3: Software testing approach to global supply chain systems

Our client, a global fashion retailer, needed to unify its logistics, supply chain, and materials management on a single modern platform. The N-iX team of software QA and testing professionals established a structured software testing approach from the ground up while developing core modules of a global supply chain management platform. We implemented extensive unit and integration testing across web and mobile components to ensure reliability, scalability, and data accuracy from the early stages of development. As a result, the client launched a stable, high-coverage platform capable of supporting thousands of users and suppliers while reducing operational risk during rapid scale-up.

Key takeaways

Software testing best practices in 2026 are no longer about doing more testing. They are about testing with purpose-focusing effort where failure carries real business, operational, or compliance risk. Quality is shaped by architecture, delivery pipelines, and ownership models long before a single test is executed.

Testing that scales is embedded into the software development lifecycle, not added at the end. It evolves with release velocity, relies on fast and reliable feedback, and uses production signals to validate assumptions that pre-release testing cannot fully predict. Automation plays a central role, but only when it is applied deliberately, owned by engineering teams, and aligned with long-term maintainability.

Sustainable quality also depends on visibility and discipline. Outcome-driven metrics reveal whether testing actually reduces risk, while early warning signs indicate when practices no longer align with system complexity. Organizations that recognize these signals early avoid release freezes, rising change costs, and recurring incidents.

This is where experienced QA and testing support makes a difference. N-iX helps teams turn principles into working software testing best practices, designing testing strategies, automation frameworks, and delivery-integrated testing processes that strengthen reliability and support innovation.

Sources:

- The 2025 State of Testing™ Report | PractiTest

FAQ

What are software testing best practices?

Software development best practices are proven approaches that help teams build reliable, secure, and scalable software. They cover areas such as architecture, coding standards, testing, automation, and delivery processes to reduce risk and improve predictability.

What are the 4 stages of testing?

The four stages of testing are unit testing, integration testing, system testing, and acceptance testing. Together, they validate software progressively from individual components to full system behavior. It ensures the software works properly and meets business requirements before release.

What are the 7 steps of software testing?

The seven steps of software testing typically include requirement analysis, test planning, test case design, test environment setup, test execution, defect tracking and retesting, and test closure. Together, these steps ensure testing is structured, traceable, and aligned with quality goals throughout the software development lifecycle.