The global sensor fusion in robotics market is expected to more than double in the next 4 years [1]. This rapid growth reflects increased demand for robotics and the introduction of innovations that drive efficiency in automation, unlock new product capabilities, and enable smarter, real-time decision-making across industries. But what is it, and who can benefit the most from this technology?

Sensor fusion integrates data from multiple sensors to produce more accurate, reliable, and comprehensive information than individual sensors alone. Modern robots use various sensors: cameras, LiDAR, ultrasonic sensors, inertial measurement units, and more. Sensor fusion systems gather the necessary context to make better decisions, creating safer and more responsive applications. Robots process outputs from multiple sensors simultaneously to perform complex tasks, such as autonomous vehicle navigation, industrial equipment operation, and challenging environment navigation, and more.

Let’s explore this technology in detail and discover the real-world applications of sensor fusion in robotics.

What is sensor fusion in robotics?

Single-sensor systems have inherent limitations. For example, cameras struggle in low-light or high-glare environments, compromising visual recognition. LiDAR offers precise distance measurement but performs poorly in heavy rain, fog, or dust. And sensor fusion gives the tools to address this issue.

In short, sensor fusion is about combining data from multiple sensors to achieve more accurate, reliable, and comprehensive information than individual sensors provide.

Specific combinations of sensors create powerful systems, allowing the robots to operate autonomously in complex environments and be more efficient. This integration drives three key business benefits:

- Greater precision in navigation and task execution, reducing operational errors

- Enhanced reliability through redundancy, lowering the risk of system failure

- Improved efficiency by streamlining data processing and decision-making

But combining sensors is only the beginning. The true value of sensor fusion lies in data processing. Advances in chip-level integration introduced accessibility in narrowly defined sensor configurations. Yet, it does not eliminate the need for flexible, high-level sensor fusion solutions, especially in real-world robotics applications where modularity, customization, and cross-vendor compatibility are essential.

Cloud computing offers the bandwidth and elastic computing power to handle large data volumes. Data is generated continuously and at scale in applications like fleet robotics, agricultural drones, or smart factories. Where latency matters, many robotic systems leverage SoC platforms that integrate CPUs, GPUs, and specialized AI accelerators. These architectures process data in parallel across the sensor fusion pipeline: from initial filtering and calibration to perception modeling and control signals.

To make sense of the data, AI/MLmodels and other statistical models filter and prioritize relevant signals, align time-stamped data streams, and resolve inconsistencies between sources. AI also enables predictive inference and contextual decision-making.

Discover how AI is transforming robotics—download the guide now!

Success!

Let’s explore the three core principles that make sensor fusion a powerful enabler of intelligent, scalable robotics solutions:

Complementarity

As humans, we are used to using multiple senses simultaneously, like seeing an object while feeling its texture, which provides more information than either sense alone. Robots need this kind of help, too. Complementary sensor configurations provide different details on the same features. For example, cameras give visual data for object recognition, and ultrasonic sensors detect transparent obstacles that cameras miss. Combining data streams creates thorough environmental models.

Cross-verification

Data from different sensors on the same parameter delivers independent measurements of identical properties, allowing error correction through cross-checking. This data redundancy allows building control systems that are fault-tolerant; if one sensor fails or provides faulty data, the system continues operating through alternate inputs. Robustness proves crucial in safety-critical applications; sensor fusion in robotics was shown to reduce detection failures by up to 95% in adverse conditions compared to single-sensor systems [2].

Real-time processing

Not all sensors are the same. Some, like inertial sensors, send a steady flow of data in fractions of a second, while others, such as LiDAR or cameras, operate a bit slower but offer a much richer view of the environment. The challenge of sensor fusion development is getting these different data streams to combine without interruptions. If the processing isn't fast or precise enough, the robot's performance takes a hit, resulting in delayed reactions, misinterpretations, or just not operating as efficiently.

Understanding real-time processing influences everything from the hardware to the scalability of the automation strategy. A solution that achieves real-time fusion means better safety, higher productivity, and the ability to adapt as conditions change.

Read more: Navigating the complexity of Sensor Fusion for enhanced data precision

Examples of sensor fusion combinations

Some combinations of sensors are especially useful. Here are the most popular examples:

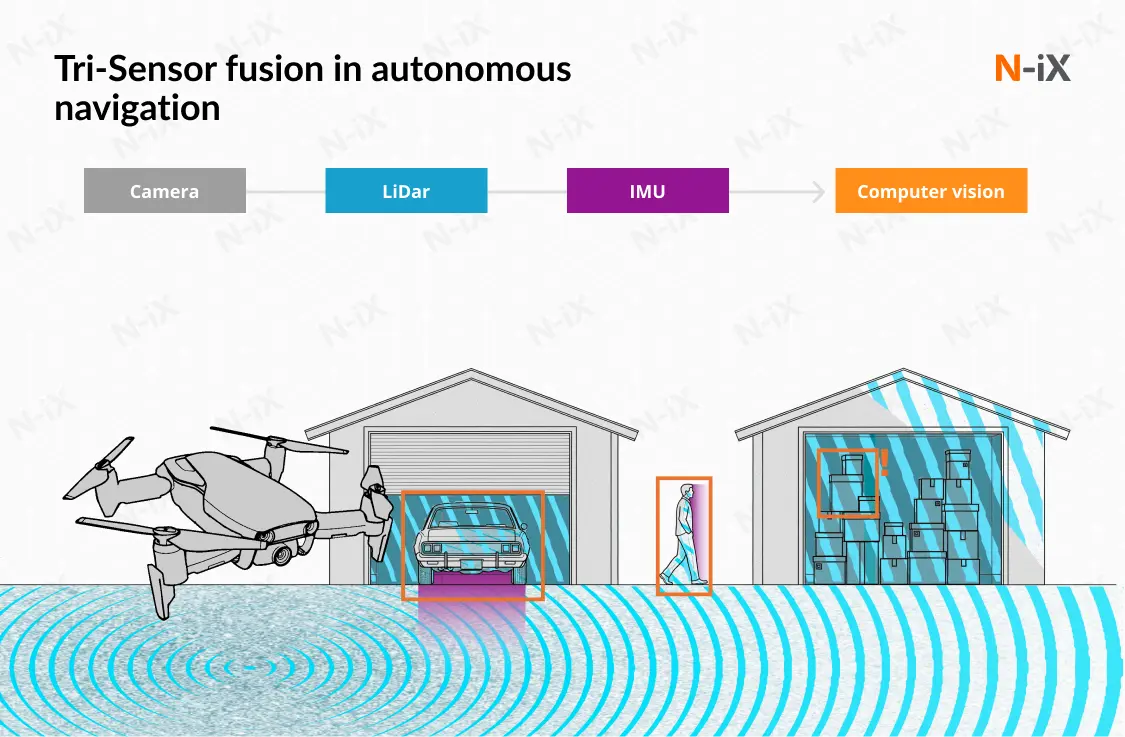

LiDAR + Camera + Inertial Measurement Unit (IMU)

This combination is considered the benchmark for navigation and object recognition in vehicles, drones, and other mobile autonomous robots.

LiDAR offers precise 3D spatial mapping, capturing the depth and structure of the surroundings. Cameras provide detailed visual information, such as color, texture, and signs, which LiDAR cannot detect. The IMU contributes to motion context by measuring acceleration and rotation to track the robot's movement.

Collectively, they form a unified environmental model that balances spatial accuracy (LiDAR), semantic understanding (camera), and dynamic motion data (IMU). This integration performs exceptionally well in both urban and off-road or warehouse environments, facilitating real-time localization, obstacle avoidance, and adaptive control.

Radar + Camera + Ultrasonic sensor

Radar provides reliable distance and speed data, even through fog, rain, or dust, where optical systems may fail. Cameras add crucial visual context, enabling object classification, lane detection, and scene interpretation. Ultrasonic sensors enhance the system at close range, making them ideal for parking assistance or navigating tight indoor spaces.

Together, these sensors create a robust perception system well-suited for safety-critical applications such as advanced driver-assistance systems ADAS or autonomous warehouse robotics, where consistent performance in adverse environments is non-negotiable.

Force/Torque sensors + Vision systems

On assembly lines in robot arms and quality inspection systems, cameras locate and identify objects, while force/torque sensors provide tactile feedback, ensuring delicate handling and proper alignment during assembly. This is critical in tasks like inserting components, screwing parts together, or verifying fit. Fusion of visual and physical interaction data allows robots to adjust their actions dynamically, improving product quality and reducing damage to fragile items. It’s also vital for enabling compliant motion in collaborative robots (cobots) working alongside humans.

Thermal imaging + Cameras

Thermal sensors detect heat anomalies in machinery or components, which can indicate wear or potential failure. When combined with visual imaging, operators can see exactly which part is affected and why. This combination is frequently used for automated quality checks, such as spotting bad welds or overheating circuits, making it invaluable for predictive maintenance and reducing costly downtime.

RFID + Vision sensors

RFID gives fast, non-line-of-sight identification of tagged parts or products. When fused with vision sensors, the system gains spatial awareness, enabling it to locate and verify items visually while confirming their identity via RFID. This is widely used in smart factories and logistics hubs to ensure that items are tracked accurately and placed in the correct locations, often with minimal human intervention.

Learn more about: Sensor data analytics: From strategy to implementation

Stereo camera system

Using two identical cameras positioned at a known distance apart, stereo vision systems replicate human binocular vision to extract depth information from 2D images. Each camera captures the same scene from a slightly different angle, and through triangulation, the system calculates the precise distance to objects in the environment.

Stereo camera fusion is especially effective in applications like robotic pick-and-place, agricultural automation, and mobile robot navigation, where understanding object size, shape, and spatial layout is critical. It offers a cost-effective, compact alternative to LiDAR for indoor or well-lit environments and allows for precise localization, obstacle detection, and manipulation.

Cross-industry applications of sensor fusion in robotics

Sensor fusion technologies drive significant advances in robotic capabilities across multiple industries. Here are the leading applications across various industries, from well-established to emerging technologies:

Autonomous vehicles: Navigation systems

Sensor fusion is the backbone of navigation in autonomous vehicles, from self-driving cars to warehouse robots and autonomous guided vehicles. These systems combine data from cameras, lidars, radars, and other sensors to detect obstacles, pedestrians, and vehicles. Autonomous guided vehicles use sensor fusion to navigate more efficiently and avoid collisions, especially in environments shared with pedestrians.

The key takeaway is this: sensor fusion is crucial for reliable, scalable, and certifiable autonomous navigation. It enhances situational awareness, reduces failures, and improves ROI by better handling edge cases.

Healthcare robotics: Surgeries, rehabilitation, patient monitoring

Sensor fusion in healthcare is about assisting medical professionals to deliver better patient outcomes, higher staff efficiency, and safer clinical workflows.

Although news about robotics applications might still seem like science fiction, there are numerous highly promising use cases emerging on the market:

- Patient monitoring: Data from RGB-D cameras, microphones, thermal sensors, and LiDAR combine to distinguish between patients and staff, assess movement patterns, and detect signs of distress. These robots can deliver medications, assist in mobility, or alert staff when intervention is needed.

- Assistive robotics in rehabilitation: In robotic exoskeletons or prosthetics, sensor fusion combines muscle signals, IMUs, pressure sensors, and joint encoders to interpret user intent and respond in real time. For example, an exoskeleton can detect subtle muscle activations and body shifts, adjusting its support accordingly.

- Surgical robotics: For minimally invasive surgeries, robots with force/torque sensors, endoscopic cameras, IMUs, and optical tracking systems can guide instruments, adapt to tissue resistance, and reduce damage risk. Real-time fusion provides smoother control and better coordination, making procedures safer and more accurate.

- Robotic biopsy guidance: The combination of ultrasound, MRI, or CT imaging can help medical professionals precisely locate biopsy targets within the body. This allows for more accurate sampling, reduces the need for repeat procedures, and enhances patient outcomes, especially in hard-to-access tissues.

The implementation of sensor solution robotics in healthcare offers immense promise, but also carries high stakes. They’re not just technical; their impact spreads across clinical, regulatory, operational, and human factors. Healthcare settings require almost perfect reliability. When a robot makes real-time decisions using fused sensor data, it must adhere to strict safety standards, especially when working with patients.

Manufacturing: Assembly, quality control, and predictive maintenance

In manufacturing, sensor fusion plays a pivotal role across several high-impact areas, including assembly, quality control, and risk management. Here's how it's applied in each area:

- Assembly automation: Cameras, force sensors, and IMUs enable robots to align parts accurately, even with tight tolerances or variable placement. This adaptive approach cuts misalignment and cycle time, making it ideal for high-mix, low-volume production.

- Quality control: Cameras detect surface flaws, lasers measure dimensions, and infrared sensors identify temperature issues. When used together in real time, they allow for more precise, inline inspection with fewer false positives, enhancing quality, traceability, and reducing the need for post-production checks.

- Predictive maintenance: Robots with sensors like vibration detectors and thermal monitors can spot early signs of equipment failure or operational hazards. For example, minor temperature shifts and abnormal vibrations may indicate a failing bearing. This predictive ability prevents downtime, safeguards workers, and prolongs equipment life.

In short, sensor fusion changes manufacturing robotics from stiff automation to flexible, intelligent systems. It reduces errors, boosts quality, and makes factories safer and more efficient.

Keep reading: Robotics in manufacturing: technology requirements and use cases

Agricultural robotics: Smart navigation

Smart navigation with sensor fusion in robotics is essential for automating planting, spraying, harvesting, and field monitoring tasks. Unlike controlled indoor environments, farms present highly variable terrain, inconsistent lighting, and unstructured layouts, making traditional navigation systems unreliable.

A fusion of GNSS, LiDAR, cameras, and IMU allows agricultural robots to navigate fields independently and accurately. For example, a spraying robot can follow crop rows, avoid unexpected obstacles like animals or tools, and adjust its route over uneven ground.

Sensor fusion also allows these robots to operate in low-visibility conditions, such as early morning fog or dusty fields. Radar sensors may be added to supplement LiDAR and vision systems when visibility drops.

Ultimately, multilayered inputs allow control systems to be more productive, optimize resource use (like water or fertilizers), and reduce labor dependency, which are critical in scaling modern agriculture while meeting sustainability goals.

Conclusion

Sensor fusion technology enables modern robots to perceive and interact with environments more effectively than single-sensor systems. Multiple sensor integration creates systems that exceed individual component capabilities, providing enhanced accuracy, reliability, and environmental awareness.

References

- Sensor fusion market size, Markets&Markets

- Demystifying Sensor Fusion and Multimodal Perception in Robotics, Srivastava 2025

Have a question?

Speak to an expert