Kubernetes adoption has become a go-to approach for orchestrating containers at scale, offering faster deployment, improved performance, and seamless scalability. It helps reduce time to market, improve performance, and scale easily. But this flexibility comes with a price. Without proper Kubernetes cost optimization, expenses can escalate rapidly, especially as your business grows and requirements expand. So, how can you enact effective cost control without trading off performance or availability? Discover key Kubernetes expenses and best practices to drive substantial savings in our expert guide.

What makes up Kubernetes expenses?

The majority of Kubernetes (K8s) expenses are linked to the resources used to run your containerized applications. Here are the main aspects that make up the total bill:

- The type of infrastructure: Kubernetes is an open-source platform that can be implemented on traditional on-premises servers, in the cloud, or in hybrid environments. If you choose cloud-based Kubernetes, costs will vary depending on the provider (AWS, Azure, or GCP). Also, note that on-premises setups can introduce additional and often greater expenses due to hardware procurement and maintenance costs.

- The number and size of nodes: Nodes are individual machines that run Kubernetes clusters. The more nodes are needed to support each workflow, the higher the associated costs will climb. Additionally, all cloud providers charge by usage, meaning it’s up to you to determine exactly how much CPU power, memory, and storage you require. While such pricing gives you more control, it also makes overspending easy, especially in dynamic environments like Kubernetes.

- Storage capacity: Kubernetes supports cloud storage, network-attached storage (NAS), and local storage. Each option varies in performance, data durability, and cost. Choosing the right storage type for your workloads is essential to managing costs efficiently.

- Additional services and add-ons: Expenses can increase with additional tools, such as logging and monitoring solutions, load balancers, and security services. While many third-party tools are essential for production environments, their costs can add up quickly.

- Network traffic: While networking doesn’t generate the bulk of expenses, bandwidth consumption and data transfers across cloud regions can generate significant costs.

Kubernetes cost optimization is all about regulating your usage of these resources. It helps avoid overprovisioning, find areas for improvement, and establish robust cost control. Our DevOps experts emphasize, however, that cutting down the number of clusters without having visibility into your system can be detrimental to performance. You need to account not only for current workloads but also for expected additions, updates, and scaling needs.

Navigating the line between sacrifice and effective Kubernetes cost reduction requires a mix of architectural and operational approaches. Let’s explore several tested strategies and practices that our experts follow.

Read more: Effective cloud cost optimization: 3 essential steps for success

10 best practices for Kubernetes cost optimization

1. Conduct PoCs and establish continuous monitoring

If you’re considering migrating to Kubernetes, start by performing a proof of concept (PoC). It was our team’s approach when N-iX built a unified equipment insurance platform for a large UK energy supplier. We selected Kubernetes to accelerate the solution’s time to market, and it was essential to assess whether it fit the client’s infrastructure and goals. Our experts built several technical PoCs to evaluate Kubernetes usage and cost implications. This early assessment helped the client understand potential budgetary requirements before committing to full-scale adoption. It also enabled us to fine-tune the architecture for performance and cost efficiency before deploying the client’s workloads.

Once Kubernetes is in place, another critical step is gaining visibility into how this platform influences expenses. It’s not just about measuring how much your workloads cost to run on a given day, but identifying usage patterns. Which services consume the most resources? How does resource usage change over time? Use logging tools to record such trends and gain a clearer picture of how your Kubernetes deployment operates.

With that baseline, you can set up proactive and effective monitoring that detects cost anomalies before they spiral. It usually includes real-time alerting, allowing your team to respond to unexpected spikes and adjust workloads dynamically.

2. Right-size and autoscale resources

Incorrect sizing is one of the most common issues within Kubernetes clusters. It often starts from provisioning nodes based on peak demand, even when such demand is rare. Therefore, avoiding overallocation of nodes is the most effective way to cut costs. So, how do you trim excessive resources without underprovisioning? Here are the two primary ways to approach right-sizing:

- Set requests and limits carefully. In Kubernetes, “requests” refer to the minimum amount of CPU and memory a container expects to use, while “limits” indicate the maximum amount it is allowed to use. Setting these values appropriately ensures you minimize both application crashes and node underutilization.

- Use third-party solutions for usage insights. Utilities like Goldilocks analyze trends in your CPU/memory consumption over time. They can identify overprovisioned pods (groups of containers with shared storage and network resources) or underutilized nodes and recommend optimal values for requests and limits.

While right-sizing helps find the initial balance, autoscaling allows you to maintain it in dynamic environments. An autoscaler is a cloud feature or standalone service that helps adjust computing resources allocated to your applications. It enables automated Kubernetes cost optimization by responding to real-time changes in demand, rather than locking in fixed values.

Our experts also note that tuning an autoscaler to your specific needs, performance requirements, and architecture is a nuanced task. Off-the-shelf configurations rarely optimize resources with precision. Working with a Kubernetes consultant can help you tailor autoscaling strategies and solutions to your workload behavior, maximizing the value of this investment.

3. Outsource Kubernetes optimization

An experienced consultant can assist with Kubernetes cost optimization at all stages—whether you’re just adopting this platform, scaling operations, or facing orchestration challenges. A reliable partner helps you minimize trial and error. They draw on experience combined with industry best practices to help you quickly define priorities and make cost-effective decisions.

Besides K8s expertise, look for the following broader qualifications when choosing a Kubernetes partner:

- Cloud expertise and certifications: Partnerships with leading hyperscalers demonstrate extensive knowledge of cloud services.

- CI/CD expertise: It’s easier to avoid unnecessary expenses and ensure smooth deployment when container orchestration works within continuous integration and delivery pipelines.

- Cloud monitoring and FinOps expertise: A partner with FinOps experience is better prepared to act on usage monitoring results, helping you analyze and reduce Kubernetes expenses in real-time.

- DevOps expertise: A K8s consultant must have a deep understanding of how infrastructure, operations, and automation affect performance and cost.

4. Take advantage of cloud discount plans

Cloud services like Amazon Elastic Kubernetes Service (EKS), Google Kubernetes Engine (GKE), and Azure Kubernetes Service (AKS) offer various discount plans that can significantly contribute to Kubernetes cost reduction. These include Reserved Instances, Spot Instances, and Savings plans.

- Savings plans provide a discount based on how much usage time you commit to. For example, committing to three years can drive significant savings, compared to using on-demand instances.

- Reserved instances allow you to book computing resources ahead of time, pre-paying them in exchange for a discount.

- Spot instances allow you to bid for unused resources and receive them at a dramatic discount, instead of purchasing a fixed amount of server space. For instance, according to AWS, opting for the Spot Instances plan can save you up to 90% of EKS expenses. At the same time, this strategy presents a gamble, since spot resources are available to you only until a higher bidder comes along or those resources are needed elsewhere.

Choosing the right combination of pricing options is one of the most important and fruitful adjustments to your Kubernetes strategy. Instead of going all-in on one model, you can use reserved instances for critical workloads and spot instances for low-priority batch tasks. If you have doubts about how to use discount models effectively, N-iX cloud experts can evaluate your usage patterns and help you select an optimized mix.

5. Create efficient CI/CD pipelines

Robust CI/CD pipelines can significantly contribute to K8s cost optimization by automating deployments, reducing manual errors, accelerating release cycles, and ensuring that only extensively tested changes reach production. In this case, automation helps avoid unnecessary resource consumption caused by misconfigurations and inefficient builds. With CI/CD, you can maintain a more predictable Kubernetes environment, which, in turn, leads to better operational efficiency and cost control.

Build high-performing pipelines with the right CI/CD tools—get the guide now!

Success!

6. Establish robust storage management

Hidden storage costs tend to affect budgets silently and persistently. For instance, orphaned volumes are one such culprit. These storage resources continue to incur costs but are no longer associated with any instance or application. Ensure you understand how your volumes are provisioned and deprovisioned, and conduct regular audits to uncover such hidden storage.

Besides eliminating orphaned volumes, Kubernetes cost optimization depends on efficient storage management. Here are several tips:

- Use dynamic provisioning of storage to avoid manual errors and automate a part of the optimization work;

- Enable automatic volume resizing to adjust storage space to actual usage;

- Implement retention policies for persistent volumes to avoid storing data that’s no longer needed after a pod is deleted;

- Choose appropriate storage classes based on workload needs, using high-performance storage for latency-sensitive applications only.

7. Set resource quotas within namespaces

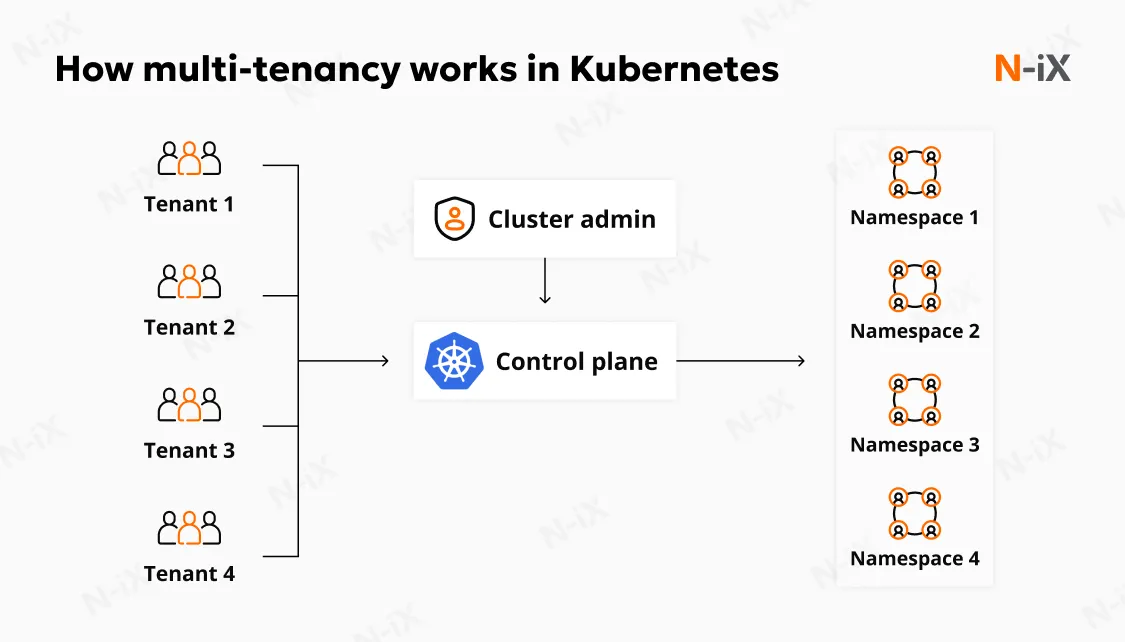

Namespaces in Kubernetes are used to create sub-units within clusters and divide resources between teams, environments, or applications. Setting resource quotas within these namespaces helps control how much CPU, memory, and storage each group can use. Quotas promote accountability, reduce waste, and enable cost-efficient resource distribution across a cluster.

8. Consolidate clusters and use multi-tenancy

Clusters that aren’t used to their full capacity increase overhead without offering any advantages. If you are running many of them, it creates additional complexity on top of extra fees, further straining both your budget and your teams’ time. Instead of spreading yourself thin, consolidate workloads into fewer, larger clusters. This will promote K8s cost optimization and improve resource utilization.

But how do you ensure security in such a shared environment? When N-iX engineers implement the multi-tenancy model, they focus on enabling multiple teams or applications to share the same cluster safely. Several principles that help us achieve this include:

- Isolation through namespaces;

- Role-based access control (RBAC);

- Resource quotas and limits;

- Communication control between namespaces and pods.

9. Automate lifecycle management of environments

Development, testing, and legacy environments often linger after they’re useful and continue consuming resources. To support effective Kubernetes cost management, consider automating environment lifecycles. It helps clean up unused infrastructures after they fulfil their purpose, simplifying cluster oversight and preventing the accumulation of hidden costs.

10. Develop budgetary guardrails and involve stakeholders

Governance plays a crucial role in Kubernetes cost optimization. Without clear rules and accountability, teams can accidentally overspend. Therefore, it’s essential to enforce policies regarding appropriate levels of spending for different workloads, especially in cloud platforms like Azure or AWS.

Also, establish a process for reviewing cost-related changes. When scaling decisions or application updates are made, stakeholders should be able to assess their financial impact and decide whether the changes align with business goals.

There are no “good” or “bad” costs. Some applications may be more expensive to run, but critical to your operations. What matters is whether spending is justified and aligned with your business priorities. By promoting communication and transparent decision-making, you can ensure that engineering and business teams work toward the same goals and make informed trade-offs when necessary.

Wrapping up

Kubernetes cost optimization is crucial to making the most out of your infrastructure. When done right, it helps you avoid overspending and underutilization of resources, balancing performance and efficiency. At the same time, substantial long-term improvements require a comprehensive approach that goes beyond simply integrating a cost monitoring platform.

The challenge is that many optimization tools require careful configuration to deliver value. Even then, maintaining efficiency demands operational changes in the way your teams approach Kubernetes spending. Without reliable DevOps expertise, you risk sinking more money into cost-reduction tools and efforts without achieving meaningful results.

With an experienced Kubernetes partner, cost optimization becomes proactive, not reactive. Outsourcing enables you to access the required expertise, gain visibility into your Kubernetes infrastructure, and align the environment with both technical needs and business goals.

Why perform K8s cost optimization with N-iX?

- With 23 years of experience, N-iX is a reliable partner for over 160 clients across 10 industries, including the finance, logistics, and retail sectors.

- In the past five years, we have successfully delivered over 150 DevOps projects, helping clients optimize K8s costs and design cost-effective infrastructures.

- N-iX is an AWS Premier Tier Services Partner, Microsoft Solutions Partner, and Google Cloud Platform Partner, enabling us to handle EKS, AKS, and GKE projects with top cloud-native expertise.

- Our team of 2,400 tech experts includes 70 seasoned DevOps specialists, proficient in container orchestration, CI/CD engineering, cloud cost monitoring, and infrastructure automation.

Have a question?

Speak to an expert