Edge AI runs Artificial Intelligence where you need it: right on your devices. Sensors, cameras, medical equipment, and factory machines analyze data instantly, without relying on the cloud.

The impact is significant. Manufacturing CTOs report that edge-based predictive maintenance reduces unplanned downtime by up to 40% through real-time anomaly detection. Healthcare systems run diagnostic AI directly on medical devices, eliminating HIPAA concerns while accelerating clinical workflows. Automotive companies process terabytes of sensor data locally, making autonomous features viable without overwhelming network infrastructure. As this technology evolves, these benefits will only grow.

Edge AI isn't just an improvement; it's a capability shift that legacy architectures can't match. Here are the hottest edge AI trends you can leverage for competitive advantage.

6 trends shaping edge AI implementation

Where is edge AI heading next? Take a look at six edge AI future trends that are reshaping how organizations approach intelligence at the edge. Some trends are ready for production today. Others are emerging rapidly. All will influence your technology decisions over the next 18 months.

1. Hardware evolution

Specialized AI chips are transforming what's possible at the edge. Major semiconductor companies now design processors specifically for edge AI workloads. These chips deliver dramatically better performance per watt than general-purpose processors. Cutting-edge models achieve up to 26 tera-operations per second at only 2.5 watts. This means they deliver 10 TOPS per watt. These specialized chips are at least 6 times more efficient than CPUs and mainstream GPUs for neural network tasks.

Neural Processing Units (NPUs) are becoming standard in edge devices. They handle AI tasks while consuming minimal power. Specialized AI chips and NPUs are used in:

Manufacturing: Quality inspection cameras on assembly lines run computer vision models locally. A defect detection system at an automotive plant processes thousands of parts per hour without sending image data to servers.

Healthcare: Portable ultrasound devices perform real-time image analysis during field diagnoses. Continuous glucose monitors analyze blood sugar patterns directly on the device, alerting diabetic patients immediately.

Smartphones: Your phone's camera uses NPUs for real-time face detection, night mode processing, and computational photography, all without internet connectivity.

Industrial IoT: Vibration sensors on oil rig equipment analyze acoustic patterns to predict bearing failures. These sensors operate for months on battery power in remote locations.

Neuromorphic computing is one of the edge AI trends that represent the next frontier. These chips mimic how human brains process information. They promise dramatic efficiency gains for pattern recognition and real-time decision-making. For energy-autonomous sensors and event-driven systems, neuromorphic architectures can reduce power consumption to levels previously impossible with traditional processors.

Read more: Edge computing AI: Bringing real-time intelligence to connected devices

2. Model optimization techniques

Model optimization represents one of the most mature edge AI trends, with proven Machine Learning techniques already deployed at scale. Large AI models are shrinking to fit edge devices. Quantization reduces model size by using lower-precision numbers without sacrificing accuracy. Your teams can deploy models that are 4-8 times smaller than the originals.

Post-training quantization has advanced significantly. Techniques like SmoothQuant and OmniQuant now enable large language models to run on edge devices with minimal accuracy loss. This addresses one of the biggest deployment barriers: bringing billion-parameter models to resource-constrained hardware.

Pruning removes unnecessary neural network connections. Knowledge distillation transfers learning from large models to compact ones. Recent approaches like SparseGPT enable aggressive one-shot pruning of massive models. These techniques make enterprise-grade AI practical on resource-constrained hardware.

Small language models (SLMs) designed for edge deployment are gaining traction. They deliver 80-90% of large model capabilities while running entirely on-device. Such model optimization approaches reflect current trends in edge AI, which prioritize practical deployment that balances performance with hardware constraints. Applications for model optimization techniques include:

Quantization (currently deployed)

Smartphones: Voice assistants like Siri process natural language requests locally using quantized models. The original model might be 400MB; the quantized version runs in 100MB while maintaining accuracy.

Autonomous vehicles: Object detection models identify pedestrians and traffic signs in real-time. Quantization enables processing 30+ camera feeds simultaneously on vehicle hardware without cloud connectivity.

Medical devices: Portable diagnostic equipment analyzes X-rays or ECG readings on-site. A quantized model runs on a tablet-sized device instead of requiring hospital servers, enabling rural clinic deployments.

Factory floor: Defect detection cameras process thousands of products per minute. Quantization allows 10+ inspection stations to run independently without overloading edge gateways.

Post-training quantization for LLMs (emerging)

Customer service kiosks: Airport or retail kiosks answer complex questions using locally-running language models. No personal data leaves the device, and responses work during internet outages.

Legal/medical documentation: Hospitals use edge-deployed LLMs to summarize patient notes or flag potential drug interactions-keeping sensitive health data on-premises while meeting regulatory requirements.

Manufacturing quality control: Technicians describe defects in natural language to edge devices that classify issues and recommend solutions, all processed locally to protect proprietary production data.

Pruning and knowledge distillation (currently deployed)

Wearable devices: Fitness trackers identify workout types (running, cycling, swimming) using pruned models small enough to run for days on tiny batteries.

Drones: Agricultural drones identify crop diseases mid-flight using distilled computer vision models. The "teacher" model trained on thousands of GPUs gets compressed into a model running on drone processors.

Industrial robots: Collaborative robots (cobots) use pruned models for real-time obstacle avoidance and task adaptation. The smaller model enables 10ms response times critical for human safety.

Small Language Models (early adoption)

Offline translation devices: Handheld translators for travelers process 50+ languages entirely on-device without internet, using SLMs optimized for conversation.

Smart manufacturing assistants: Factory workers query equipment manuals, troubleshooting guides, and safety procedures through voice interfaces that work in areas with no connectivity or where data security prohibits cloud access.

Healthcare scribing: Doctors dictate patient notes that get structured and formatted locally on tablets during examinations, eliminating transcription delays and protecting patient privacy.

Field service tools: Technicians repairing equipment in remote oil fields or cell towers use devices with embedded SLMs that provide repair guidance, parts identification, and troubleshooting-all without connectivity.

Quantization and pruning work today. Companies are already seeing returns in their factories and hospitals. Post-training quantization for large language models is moving from labs to real deployments this year. Small language models are still maturing. Expect them to be ready for widespread use in about a year.

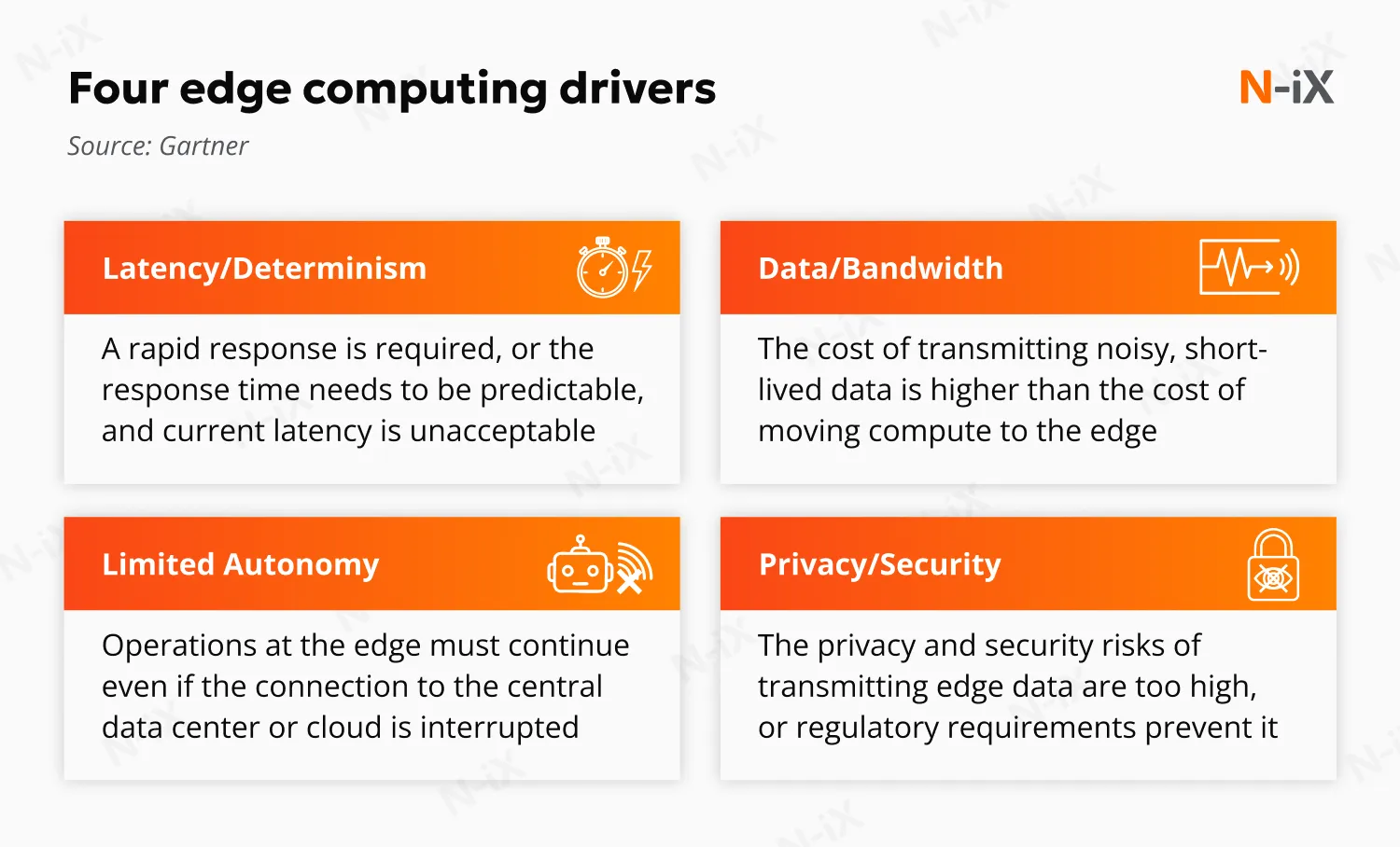

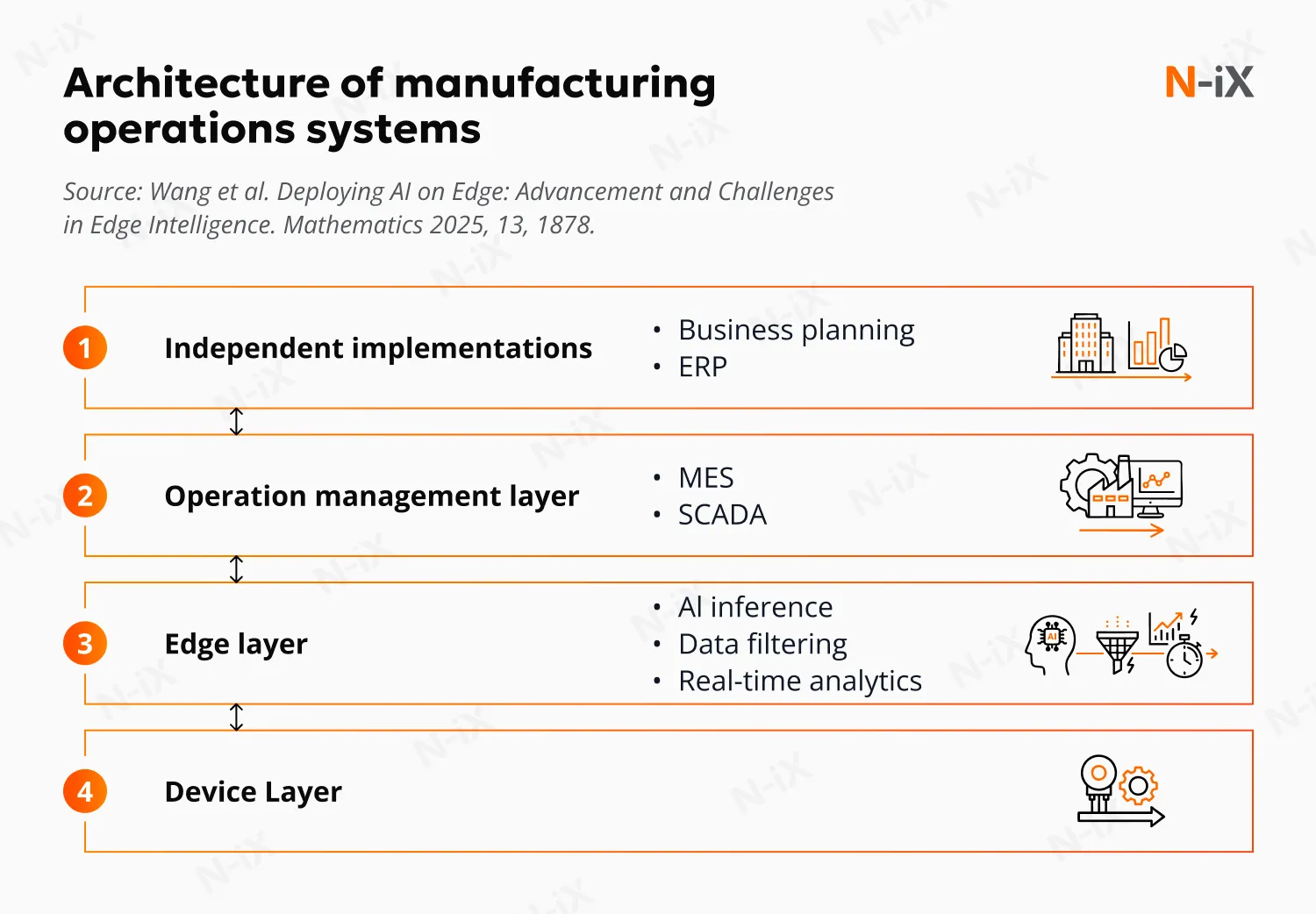

3. Hybrid edge-cloud architectures

Among emerging edge AI trends, hybrid architectures are proving that the future isn't purely edge or cloud-it's intelligent distribution. Organizations are splitting AI workloads strategically. Simple, frequent decisions happen at the edge. Complex, infrequent analysis runs in the cloud. Research shows no single approach optimizes all metrics. The key is matching techniques to your specific constraints.

Design an effective hybrid cloud strategy—follow our expert guide!

Success!

Federated learning lets you train models across distributed edge devices without centralizing sensitive data. Your devices learn collectively while data never leaves local premises. Multiple manufacturing plants can collaboratively improve AI models without sharing proprietary production data. This solves both compliance requirements and competitive concerns.

Split inference divides model execution between the edge and the cloud. Early layers process locally for speed and privacy. Final layers leverage cloud resources when needed. This flexibility optimizes for both performance and cost. Hybrid deployment patterns represent critical edge AI technology trends that address real-world infrastructure and privacy challenges. Companies use hybrid edge-cloud architectures in several ways:

Strategic workload distribution (currently deployed)

Retail chains: Store cameras detect shoplifting attempts locally in real-time (edge). Anonymized shopping pattern analysis across all stores runs in the cloud monthly to optimize layouts.

Hospitals: Patient monitors detect cardiac irregularities instantly at the bedside (edge). Long-term trend analysis comparing thousands of patients to predict disease progression happens in the cloud.

Manufacturing networks: Individual machines detect equipment failures immediately (edge). Corporate headquarters analyzes fleet-wide degradation patterns across all factories to optimize maintenance schedules (cloud).

Autonomous vehicle fleets: Each car makes split-second driving decisions locally (edge). The fleet collectively learns from millions of driving scenarios through cloud aggregation, then pushes improved models back to vehicles.

Federated learning (active deployment)

Multi-hospital networks: Five hospitals collaboratively train a cancer detection model. Each hospital's patient data never leaves its premises. The model learns from all patients while satisfying HIPAA requirements. No hospital sees another's data, yet all benefit from collective intelligence.

Franchise manufacturing: A pharmaceutical company operates plants in the US, EU, and Asia. Each plant trains quality control models on local production data. The models improve collectively without sharing proprietary formulations or process details across borders, addressing both IP protection and data sovereignty regulations.

Financial institutions: Banks collaboratively detect fraud patterns. Customer transaction data stays within each bank's infrastructure. The shared fraud detection model improves for everyone without exposing sensitive account information or competitive intelligence.

Retail analytics: Store chains train inventory prediction models across locations. Sales patterns and customer behavior data remain local. The collective model captures regional trends without corporate headquarters accessing individual store transactions.

Split inference (emerging deployment)

Smart city cameras: Initial object detection (person, vehicle, bicycle) runs on camera chips for immediate decisions like traffic light timing. Detailed behavioral analysis for urban planning runs in the cloud when bandwidth permits.

Industrial inspection: A factory camera detects basic defect locally. Complex metallurgical analysis requiring massive reference databases happens in the cloud. Critical decisions happen in milliseconds; detailed forensics happen later.

Healthcare imaging: A portable ultrasound device performs initial screening for abnormalities on-device, flagging urgent cases immediately. Detailed diagnostic analysis comparing against millions of historical cases runs in the cloud, delivering comprehensive reports within hours.

Voice assistants: "Turn on the lights" processes entirely on your phone (edge). "What's the weather forecast for my trip to Tokyo next month?" sends final processing layers to the cloud for current data and complex reasoning.

Read more: AI in cloud computing: Key to scalable, intelligent business solutions

4. Industry-specific applications

Manufacturing leads in edge AI market trends adoption with measurable returns. Predictive maintenance systems monitor equipment continuously. They detect anomalies milliseconds before failures occur. Real deployments report 25% reductions in unplanned downtime. Production lines stay running. Downtime costs plummet.

Automated visual inspection systems leverage edge-deployed models to identify defects on production lines. Quality improves by up to 30% in implemented cases. The key advantage: immediate feedback without cloud dependency ensures consistent product quality.

Healthcare is deploying edge AI in diagnostic devices and patient monitoring. Wearables analyze vital signs in real-time. Medical imaging equipment provides instant preliminary analysis. Clinicians get faster insights without data ever leaving the facility. This addresses both HIPAA compliance and real-time care requirements.

Automotive companies process sensor data from cameras, lidar, and radar at the edge. Autonomous driving features require split-second decisions. Cloud latency isn't an option. Edge AI makes real-time vehicle intelligence viable while locally handling terabytes of sensor data.

Retail is transforming with edge-powered smart stores. Computer vision tracks inventory continuously. Checkout becomes frictionless. Loss prevention improves through real-time anomaly detection.

5. Privacy and security focus

Data privacy regulations are tightening globally. Edge AI keeps sensitive data on your premises by default. You satisfy GDPR, HIPAA, and regional requirements more easily. Advanced security mechanisms are among the top-priority edge AI trends as organizations balance innovation with protection.

Processing locally reduces your attack surface. Data doesn't traverse networks where it can be intercepted. Fewer data transfers mean fewer vulnerability points.

However, edge devices face unique security challenges. Unlike centralized cloud servers, edge nodes are physically accessible and often lack robust security protocols. Recent attacks have exploited sensor fusion in autonomous vehicles and enabled model inversion in financial systems. Edge intelligence models are particularly vulnerable due to their simpler structures.

Federated learning combined with differential privacy offers promising defense mechanisms. Secure aggregation protocols help protect distributed training. Edge devices themselves need security hardening. Encrypted model storage and secure boot processes are becoming standard. Your AI models represent intellectual property worth protecting. Adversarially robust compression and tamper-resistant chip designs are emerging as critical requirements.

6. 5G and edge AI synergy

5G networks enable new edge AI architectures. Ultra-low latency supports distributed intelligence across multiple edge nodes. Bandwidth capacity handles dense deployments of AI-enabled devices.

Multi-access edge computing (MEC) brings cloud capabilities closer to devices. Processing happens at cell towers rather than distant data centers. You get cloud-like resources with edge-like latency.

This combination offers new use cases that weren't previously feasible. Smart city applications coordinate across thousands of sensors in real-time. Industrial IoT deployments scale without infrastructure constraints. The connectivity advancements represent some of the most impactful edge AI latest trends, reshaping what's possible at the network edge. Looking ahead, 6G networks will integrate AI capabilities directly into network architecture, enabling even more sophisticated distributed processing with terahertz frequencies and ultra-reliable connectivity.

Moving forward

Edge AI has moved beyond proof-of-concept. Edge AI is delivering measurable results right now across manufacturing, healthcare, automotive, and retail. The edge AI trends outlined here represent more than incremental improvements-they're reshaping how organizations distribute intelligence. The question isn't whether edge AI will transform your operations. It's how quickly you can identify which workloads belong at the edge and start capturing the competitive advantage.

Have a question?

Speak to an expert