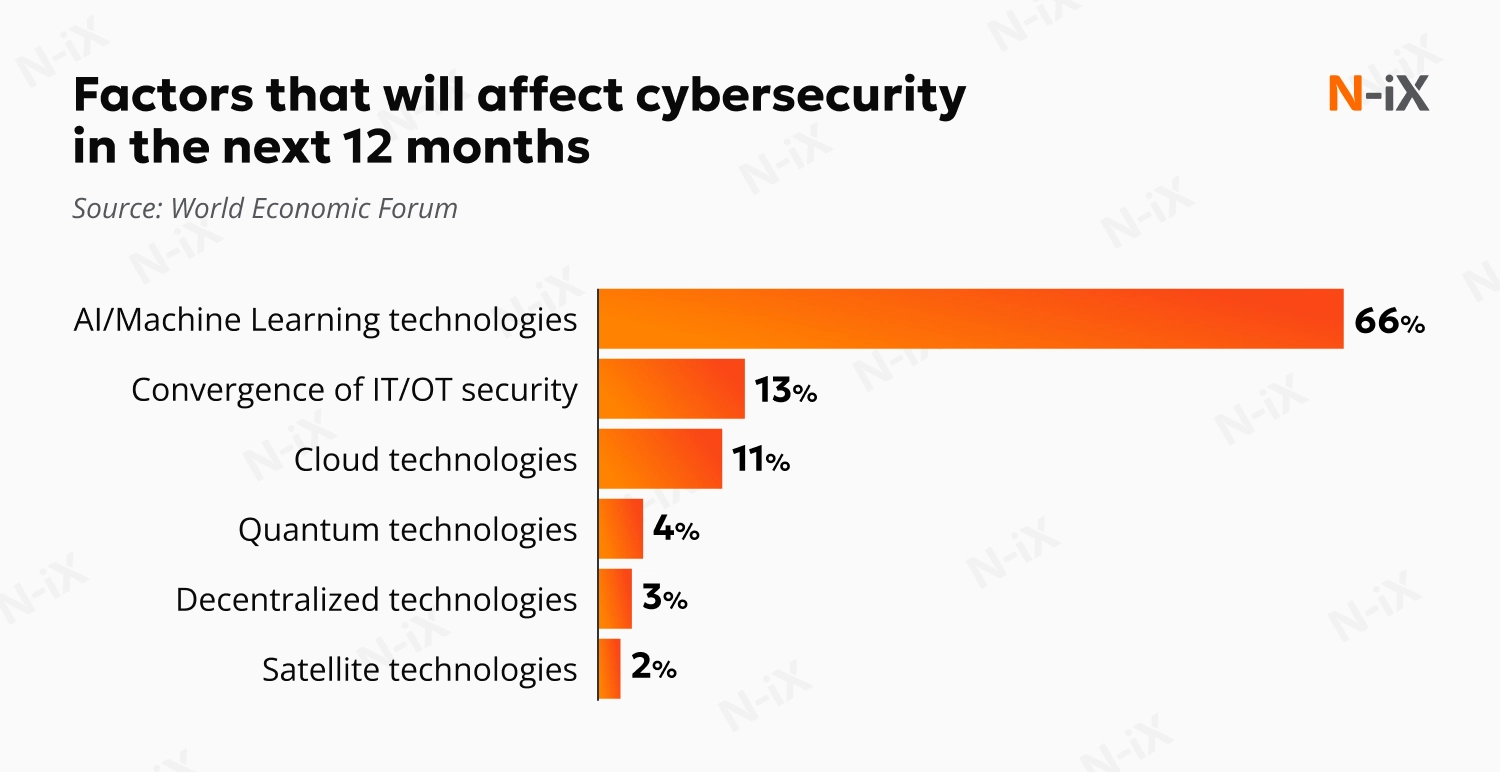

Artificial Intelligence is transforming how businesses operate, but it also creates new openings for cyber threats. The World Economic Forum (WEF) reports that 66% of companies expect AI and Machine Learning to significantly impact cybersecurity over the next year. Yet only 37% have processes to evaluate the security of AI tools before deploying them, and just 14% feel equipped with the necessary cybersecurity expertise.

AI security posture management is emerging as a solution to these gaps. In this article, we’ll examine how it helps organizations counter the threats of unmanaged AI, protect critical business assets, and reduce compliance risks.

What is AI security posture management?

AI security posture management (AI-SPM) is an approach designed to safeguard Artificial Intelligence models, tools, and data. It combines practices and technologies to address AI-specific risks, helping organizations ensure the integrity, compliance, and resilience of their AI ecosystems. Whether you train your own AI models or rely on third-party solutions, it provides the framework to keep your applications, pipelines, and data secure.

At its foundation, this approach follows the same principles as traditional security posture management, including continuous monitoring, risk assessment, and proactive defense.

So, do you need additional AI-focused frameworks if you’re already using posture management systems? Let’s review several existing approaches and compare them to security posture management for AI.

AI-SPM vs CSPM, DSPM, and ASPM

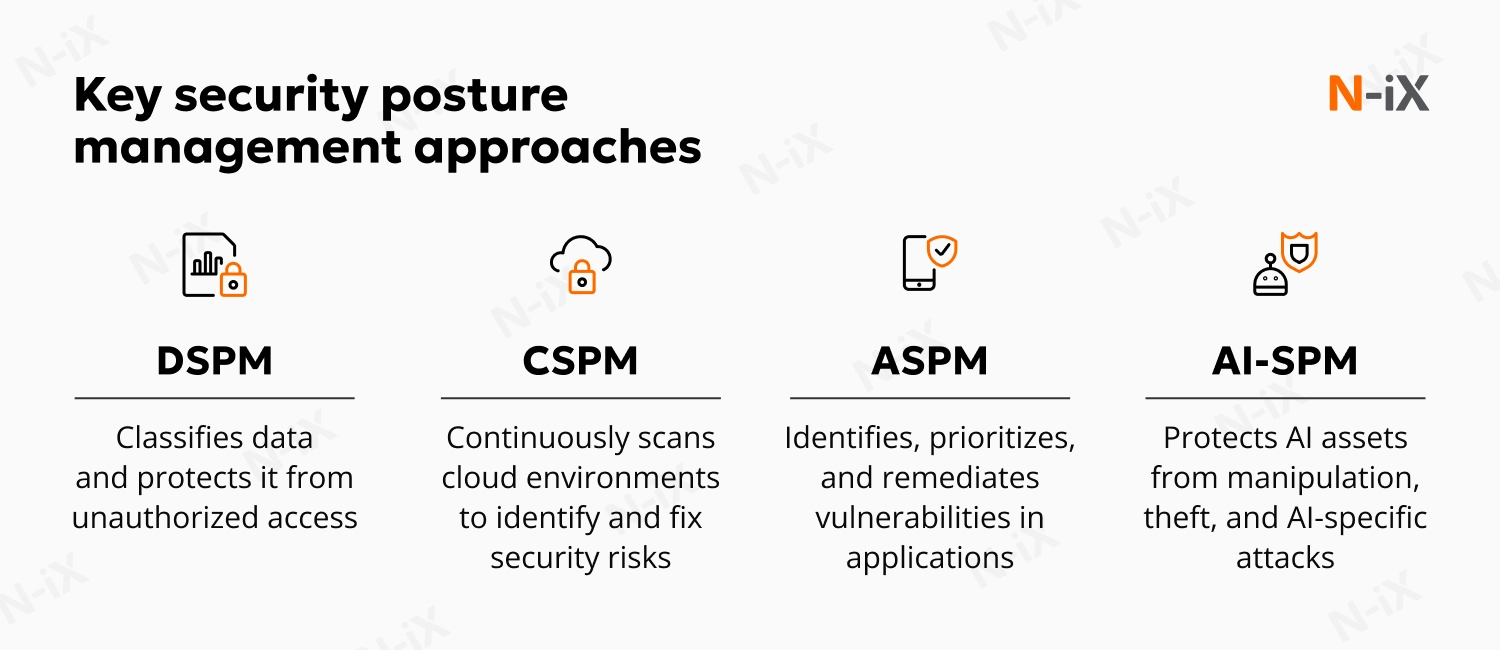

Data security posture management (DSPM) helps discover, classify, and monitor data assets across the organization. AI-SPM builds on this foundation by protecting not just the data but the AI models processing it.

Cloud security posture management (CSPM) focuses on cloud infrastructure, identifying misconfigurations and compliance issues in services like AWS, Azure, and GCP. While essential for cloud security, it doesn’t inspect AI pipelines or monitor model interactions.

Application security posture management (ASPM) ensures the security of applications throughout their development lifecycle. It provides a comprehensive approach to application risk management, but it’s not equipped to detect AI-specific threats like model poisoning or prompt injection.

Our experts emphasize that AI posture management is still an emerging but urgent field. As adoption accelerates, attack methods evolve to target AI systems, introducing risks that traditional approaches are not designed to mitigate.

Why is AI-SPM crucial for businesses?

Artificial Intelligence posture management is a methodology purpose-built for identifying and mitigating vulnerabilities in AI solutions. Without it, organizations leave their critical AI assets exposed to new attacks that can manipulate model behavior, steal intellectual property, or compromise decision-making processes. Here are four key reasons businesses need a strong AI security posture:

1. Ensuring privacy and data security is a must

Privacy and data security are among the top AI concerns for businesses adopting Generative AI, copilots, and chatbots. The WEF survey shows that 37% of executives worry about identity theft, 22% about AI-caused personal data leaks, and 20% about exposure of sensitive corporate information.

These risks are amplified because AI systems depend on large volumes of training data, making them attractive targets for attackers. This data often includes confidential, sensitive, or proprietary information, as well as domain-specific knowledge. If an AI model, database, or API is compromised, it can expose sensitive data, violate compliance, and put your entire business at risk.

2. Maintaining model integrity against manipulation is difficult

The accuracy and reliability of an AI model depend on the quality of its training data. Malicious actors can manipulate this data to compromise a model’s integrity, leading to incorrect, biased, or harmful outcomes.

Several common attack vectors include:

- Data poisoning: Attackers insert malicious data into training datasets, teaching models to adopt harmful patterns or generate unsafe outputs (such as code).

- Misinformation and “hallucinations”: Manipulated or corrupted models can generate false, misleading, or fabricated results that can impact decision-making and undermine the trustworthiness of the AI solution.

- Adversarial attacks: Subtle, often invisible changes to inputs can trick AI into making wrong predictions or decisions. For example, slightly altering an image can cause an AI system to misclassify it entirely.

- Prompt injection and jailbreaking: Specifically crafted inputs can bypass safeguards in large language models (LLMs), forcing them to ignore rules or reveal sensitive information.

3. Protecting proprietary AI models from theft is crucial

Attackers can exploit an AI model’s outputs to replicate its architecture or steal the sensitive data it was trained on. Our cybersecurity experts highlight two key methods that can be used to compromise your intellectual property:

- Model extraction: By sending repeated queries and analyzing outputs, attackers can reconstruct a copy of the original model. This form of intellectual property theft gives adversaries access to the same functionality without investing in development.

- Model inversion: In this type of attack, a threat actor works backward from a model’s predictions to reveal details about its training data. This can expose confidential information or enable the attacker to reverse-engineer the model itself.

4. The spread of unmanaged AI introduces governance concerns

Easy access to AI tools means employees may use them without official approval, a practice known as shadow AI. This creates blind spots for IT and security teams, as third-party tools may process sensitive data without safeguards or official oversight. It also complicates AI data governance, since organizations lose track of where, how, and what data is used.

Shadow AI also exists internally when teams deploy models without proper documentation, governance, or security controls. Security leaders often lack visibility into all active AI assets, which expands the organization’s attack surface. Unmanaged models can increase the risk of compliance violations, data leakage, unauthorized access, and other security incidents.

Security posture management for AI helps organizations regain visibility and enforce policies across their AI ecosystem. It provides a structured way to safeguard Artificial Intelligence tools while meeting governance standards.

Strengthen fraud prevention with AI—get the guide!

Success!

8 best practices for effective AI security posture management

AI posture management is not just about identifying threats but about applying practical measures to counter them. So, how can you ensure your systems remain resilient and compliant? Explore these eight key tips and practices.

1. AI inventory and discovery

Our experts stress that effective AI security starts with knowing what you have. AI-SPM solutions can create a comprehensive inventory of your AI assets. This includes not just AI models but also their associated data sources, APIs, cloud resources, libraries, and pipelines. This visibility helps detect shadow AI and establishes a baseline for monitoring changes and preventing unauthorized AI implementations.

2. Model and pipeline monitoring

Posture management solutions for AI ensure continuous oversight of models and Machine Learning pipelines in production. Use tools that track access attempts, detect misuse, and flag anomalies such as prompt injections or data poisoning. By establishing a baseline of normal behavior, these systems help protect AI against breaches and manipulation.

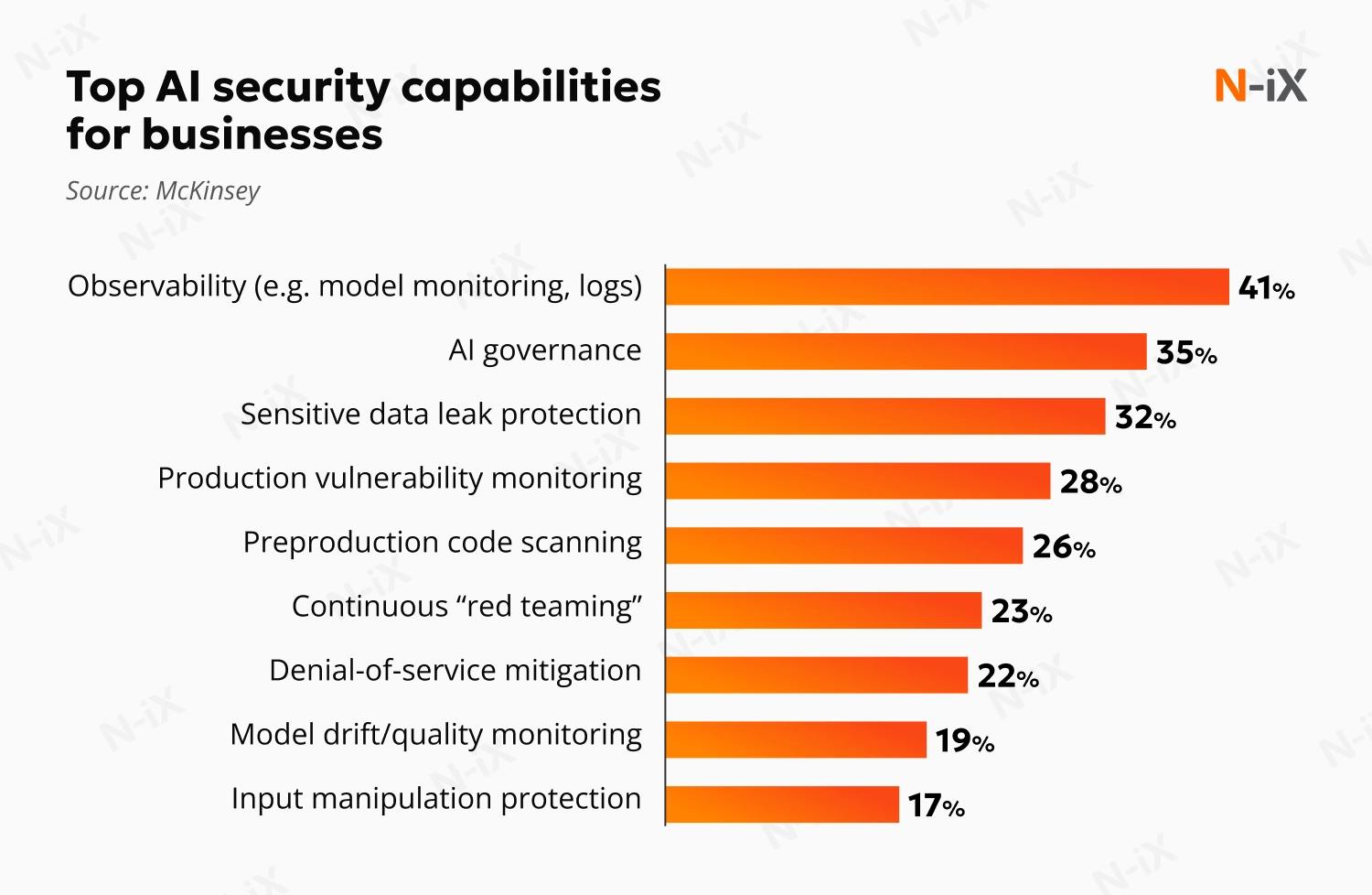

McKinsey reports that 41% of businesses see monitoring and logging as top AI security posture management priorities. Observability across MLOps pipelines makes it possible to identify suspicious activity in real time and safeguard intellectual property. This proactive monitoring strengthens resilience and helps organizations maintain trust in their AI applications.

Learn more about AI-powered monitoring and threat detection

3. Partnering with a cybersecurity consultant

With expert guidance, organizations gain the tools and the confidence to manage AI risks effectively. Consider partnering with a reliable cybersecurity and AI consulting provider like N-iX. An experienced consultant can help you identify security gaps, implement the right posture management controls, and tailor strategies to your business requirements.

4. Comprehensive risk management

Simply finding vulnerabilities is not enough for effective AI risk management. Implement a strong framework that evaluates risks in context, considering factors such as severity, data sensitivity, and business impact. This capability is crucial for managing the unique attack vectors that target AI, such as data poisoning, adversarial attacks, and model theft. The system also uses threat intelligence to detect malicious use of AI models and generates alerts for high-priority risks, often with recommendations for rapid response.

5. Training data protection and lineage tracking

The integrity of AI models heavily depends on the quality and security of their training data. AI posture management extends security controls to training datasets, guarding against two primary risks: data poisoning and data exposure. You can also use it to prevent model contamination, where sensitive data like personally identifiable information (PII) leaks through outputs or logs after being used in training.

Such systems also enable advanced lineage tracking. Data lineage provides visibility into where data originates, how it’s transformed, and how it’s used throughout the AI development process. Combined with strong AI data management practices, this capability ensures responsible use of information and guards against hidden risks.

6. Developer-focused tools and workflows

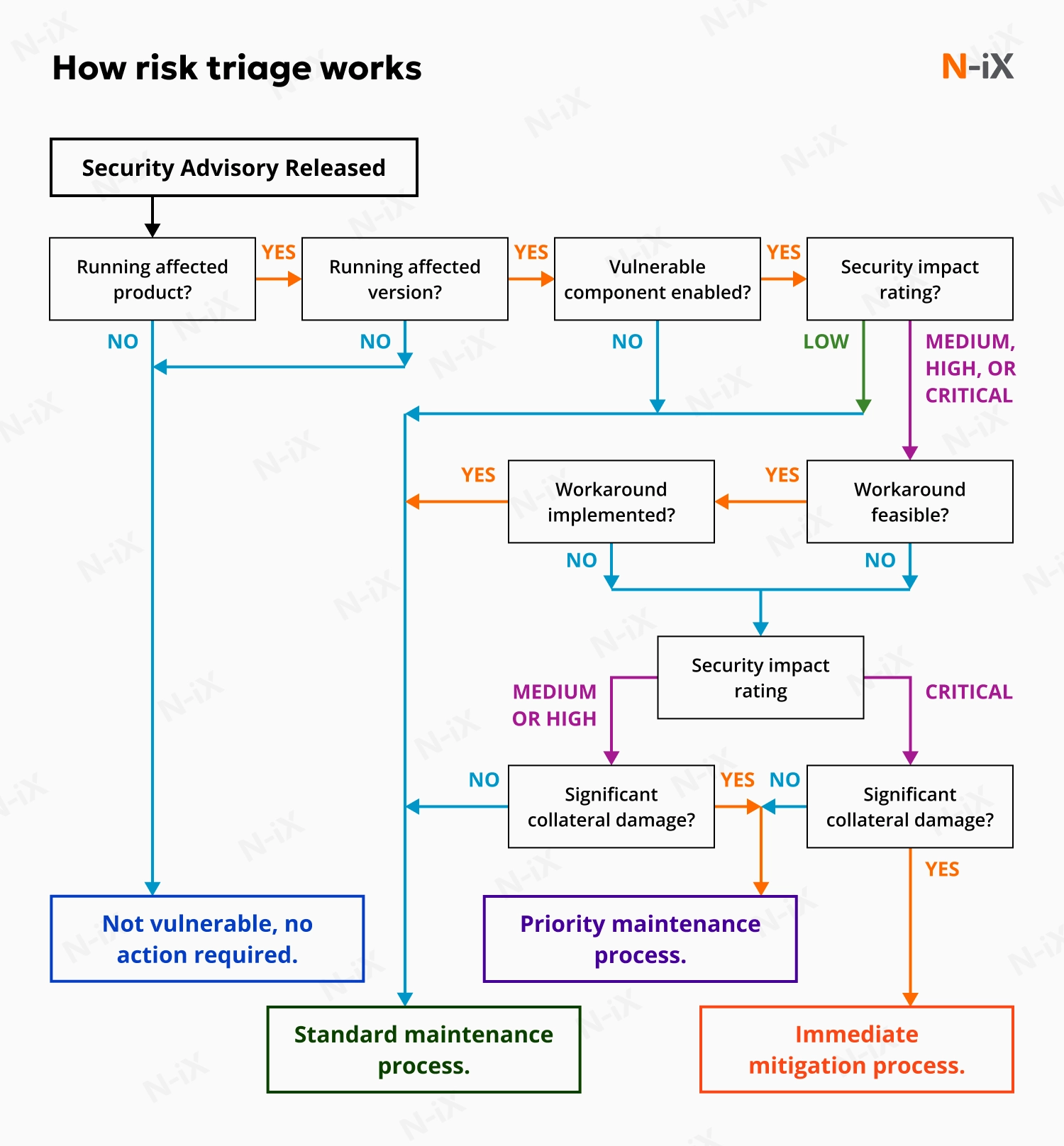

To be effective, AI security must be integrated into the development lifecycle. Choose solutions that provide developer-friendly tools and workflows. For instance, one key capability is risk triaging, which contextualizes and prioritizes risks within the development pipeline. It subsequently routes alerts to the specific engineers and data scientists responsible for the code or model. This approach gives developers a personalized view of the security issues relevant to their projects, enabling them to address vulnerabilities more efficiently.

7. Compliance and governance

As AI adoption grows, so does the need to ensure your AI complies with GDPR, the NIST AI Risk Management Framework, and other regulations. AI security posture management helps automatically align security controls with these standards, making workflows more transparent and auditable. This provides clear visibility into compliance status and reduces the risk of legal or financial penalties.

To strengthen governance, maintain audit trails for AI assets, including their lineage, approvals, and risk classifications. This documentation is critical for proving due diligence to regulators and stakeholders. It helps minimize legal liabilities, build trust in your organization’s AI practices, and manage risks effectively.

Read more about cybersecurity governance, risk, and compliance

8. Configuration management and access control

AI posture management helps close security gaps by enforcing proper access controls and preventing misconfigurations in AI systems. It allows you to establish least-privilege protocols to define which stakeholders can view or modify sensitive models and datasets. It also sets baselines for encryption, endpoints, and other settings that are automatically applied as you deploy AI models.

Posture management systems also help detect configuration drift, which occurs when an environment shifts from its approved state. Correcting drift early prevents vulnerabilities and ensures AI deployments are aligned with your organization’s security policies.

How can N-iX help you strengthen your AI security posture?

Implementing comprehensive AI security posture management requires deep technical expertise and practical experience. With over 23 years in technology consulting, N-iX helps organizations protect Artificial Intelligence systems with industry-recognized methodologies and tailored strategies.

- Our cybersecurity experts work alongside over 200 data, AI, and ML engineers to protect your AI ecosystem against data poisoning, model theft, and other emerging AI-specific threats.

- N-iX has completed over 100 security projects across diverse industries, giving us practical expertise in managing AI risks.

- Our recognition as a Solutions Partner in the Microsoft AI Cloud Partner Program highlights our ability to deliver resilient AI solutions at enterprise scale.

- We comply with international standards, including ISO 27001, SOC 2, PCI DSS, and GDPR, ensuring your AI initiatives meet strict regulatory requirements and governance best practices.

- We guide organizations through every stage of their AI journey, from auditing and inventorying AI assets to implementing full-scale security posture management with continuous monitoring and ongoing support.

Ready to strengthen your defenses and build resilient AI systems? Contact N-iX today to explore how our experts can help secure your AI initiatives and deliver long-term business value.

Have a question?

Speak to an expert