AI systems enhance modern security strategies by automating processes, boosting efficiency, and minimizing human error. Recent studies highlight its growing value: IBM reports that 67% of companies integrated AI into their security strategies in 2024 [1]. However, growing adoption also raises important questions about how safe and trustworthy these systems can be. According to the 2024 report by ISC2, only 28% of security specialists see AI more as an aid rather than a threat [2].

AI undoubtedly delivers significant advantages, but like any powerful technology, it also introduces challenges that must be addressed. Thus, a strategic AI risk management has become essential. In this article, we explore how businesses can maximize the value of AI in security operations, along with practical use cases and proven risk management practices.

Top 5 use cases of AI in risk management

Firstly, let's look at AI as a powerful tool for risk management. AI streamlines business operations, providing organizations with speed, accuracy, and deeply informed decision-making. Let's review some of the most prominent AI-driven risk management use cases:

1. Risk detection and mitigation

Through real-time threat monitoring, AI systems continuously scan digital environments for unusual patterns and suspicious activity. For example, in corporate networks, AI models track system logs, flag abnormal access, and spot potential breaches when they happen. AI threat detection allows security teams to act before vulnerabilities escalate.

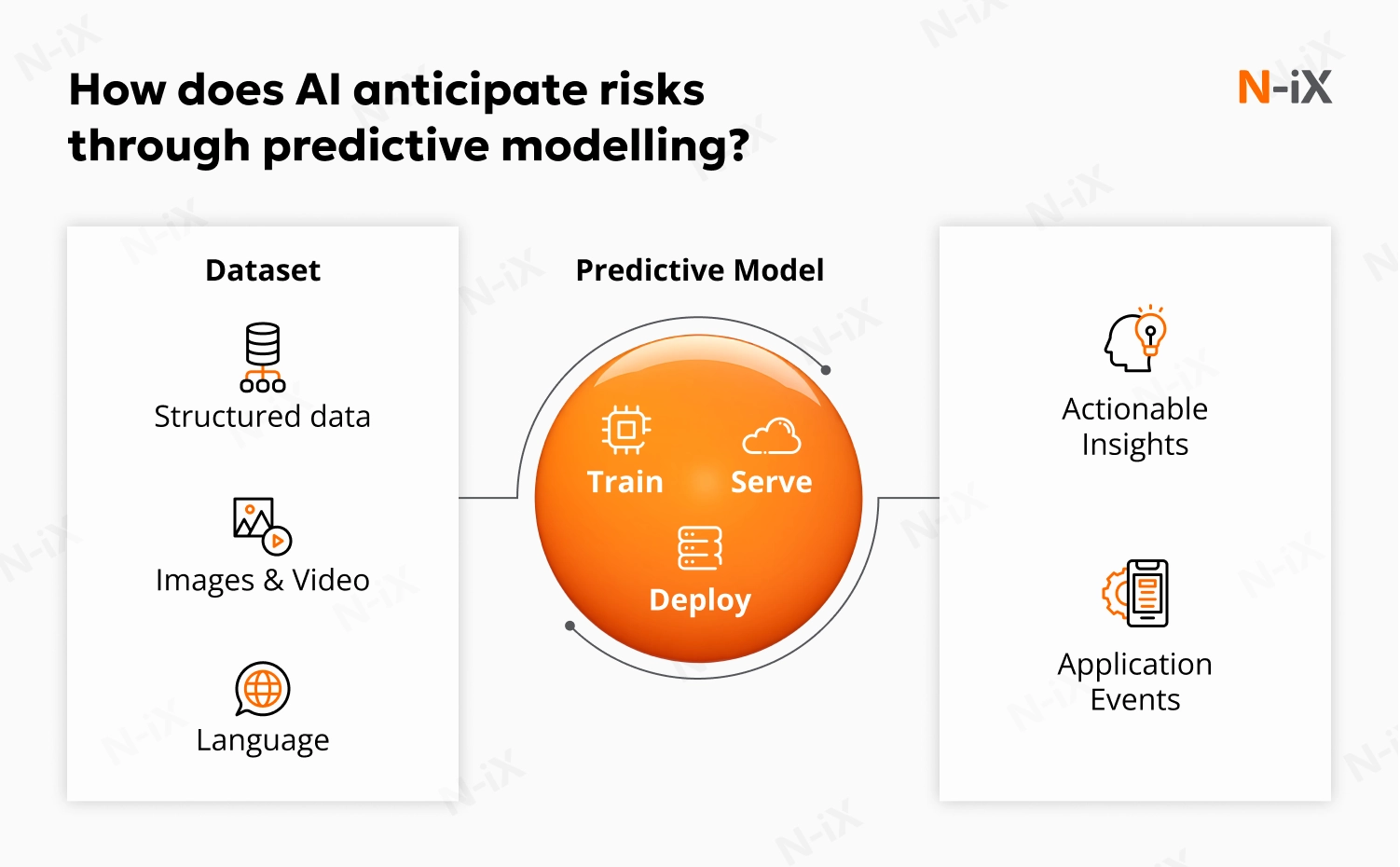

Another key capability is predictive modeling. By examining historical and real-time data, AI can anticipate issues like equipment failure or supply chain disruption. This allows organizations to benefit from proactively allocating resources, anticipating disruptions, and minimizing loss.

Finally, AI helps cybersecurity professionals manage alert fatigue through automatic risk categorization. Instead of manual sorting, intelligent systems classify incidents based on predefined criteria like severity, likelihood, and potential impact. This ensures immediate attention for high-risk events, improving reaction speed and reducing operational downtime.

2. Fraud detection and prevention

Using AI for risk management also enables organizations to tackle sophisticated fraud schemes through advanced pattern recognition. Machine learning models ingest vast volumes of transaction data and identify subtle anomalies that human analysts might miss. For example, AI systems in banking monitor withdrawals, payments, and account changes in real time. They distinguish legitimate user behavior from potentially fraudulent activity.

Moreover, AI's adaptability is crucial. Models learn from new data and continually update their fraud detection parameters. This dynamic approach helps anticipate emerging fraud techniques. When criminals change strategies, AI systems evolve, adapt, and protect organizations' assets and reputation.

3. Regulatory compliance

Automated compliance verification is one of the key use cases of risk management AI. It can process contracts, audit logs, and disclosure reports, instantly detecting non-conformities or missing documentation. If a contractual clause is absent or an employee fails to complete mandatory training, the system can flag it for review.

4. Surveys and interviews

AI enhances risk assessments by transforming traditional surveys and interviews. Instead of simple checkbox responses, AI-enabled surveys automate workflows, analyze open-ended answers, and identify organization-specific risk factors. Insights are instantly visualized, allowing risk trends to be seen at a glance.

AI also streamlines interviews by capturing unstructured data consistently. NLP quickly highlights patterns and trends from transcripts, surfacing critical insights for quick, informed decisions.

5. Continuous risk assessment

AI-powered risk management tools help make risk assessment feasible on an ongoing basis, not just periodically. They enable the evaluation of process integrity, control effectiveness, and compliance with internal policies on an ongoing basis. This leads to faster identification of gaps and remediation actions.

Transform software testing with generative AI—get the white paper!

Success!

Learn more: AI in credit risk management: Opportunities and limitations

4 potential areas of AI risks

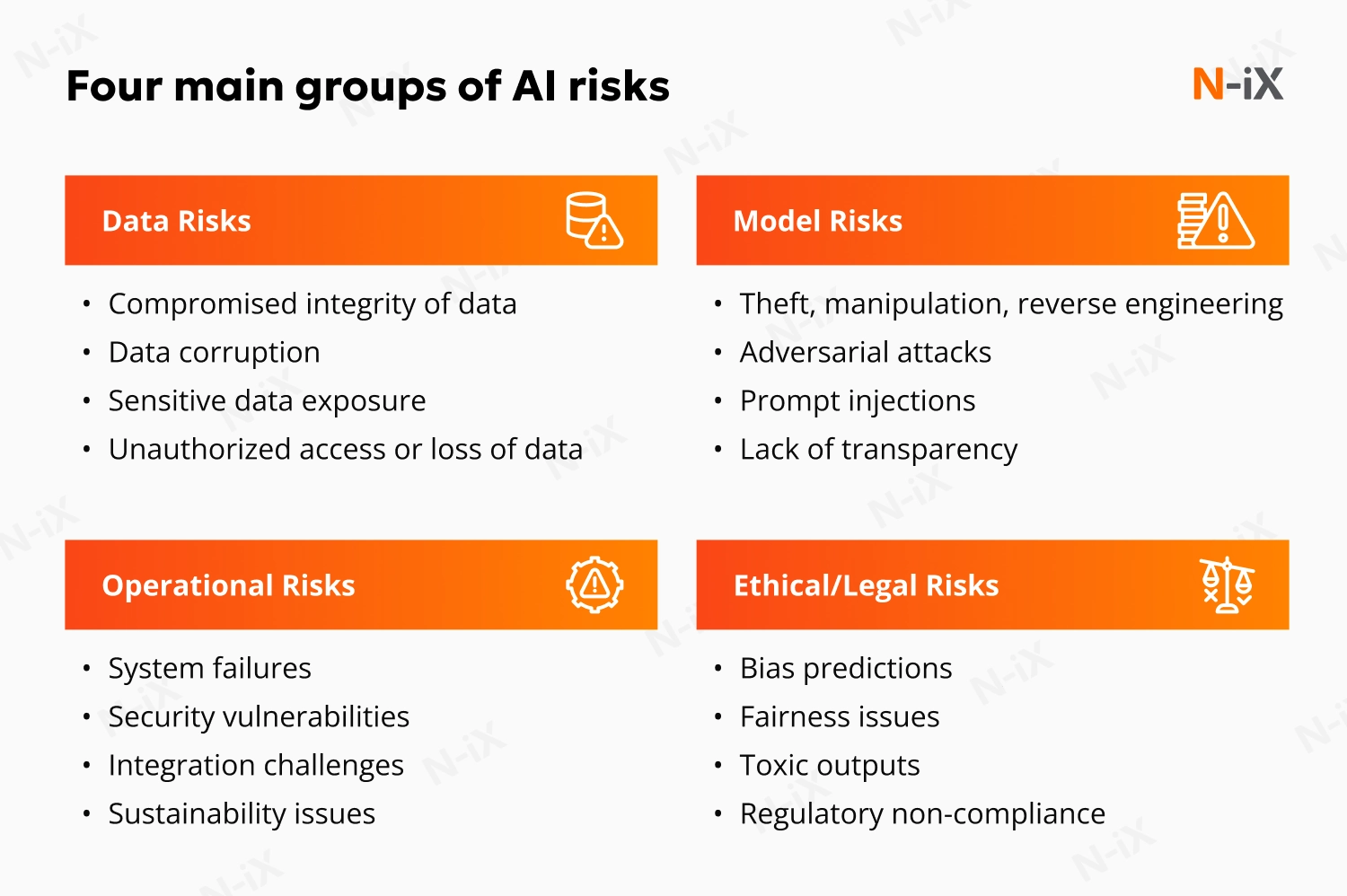

Despite all these benefits, there are some risks associated with AI. Without proper implementation, they can create vulnerabilities. These risks are typically divided into four categories:

- Data risks: Poor data quality, biased datasets, or exposure to personal information can pose risks. Data errors can cause AI models to misclassify threats, while data poisoning attacks can undermine model reliability.

- Model risks: Over time, models can drift when external circumstances or data patterns change, and accuracy falls. Some models have black-box algorithms that can be difficult to audit, explain, or validate. There is also the risk of adversarial attacks, where bad actors purposefully manipulate AI models to make incorrect predictions.

- Operational risks: AI systems integrated in business-critical environments impact daily operations. Downtime, unexpected failures, or integration errors can interrupt key processes. Dependence on third parties for AI solutions introduces new vulnerabilities. Any misalignment between AI outputs and organizational objectives may cause harm.

- Ethical and legal risks: AI can process sensitive information, infringing on privacy or introducing bias without proper management. Noncompliance can lead to penalties, legal challenges, and reputational damage. Legal risks can also arise from unintended consequences of using flawed or opaque AI models.

Top 7 best practices for AI risk management

To maximize benefits and contain risks, organizations must integrate strong AI risk management strategies in every phase of AI adoption. Below are seven best practices from N-iX experts for managing these risks.

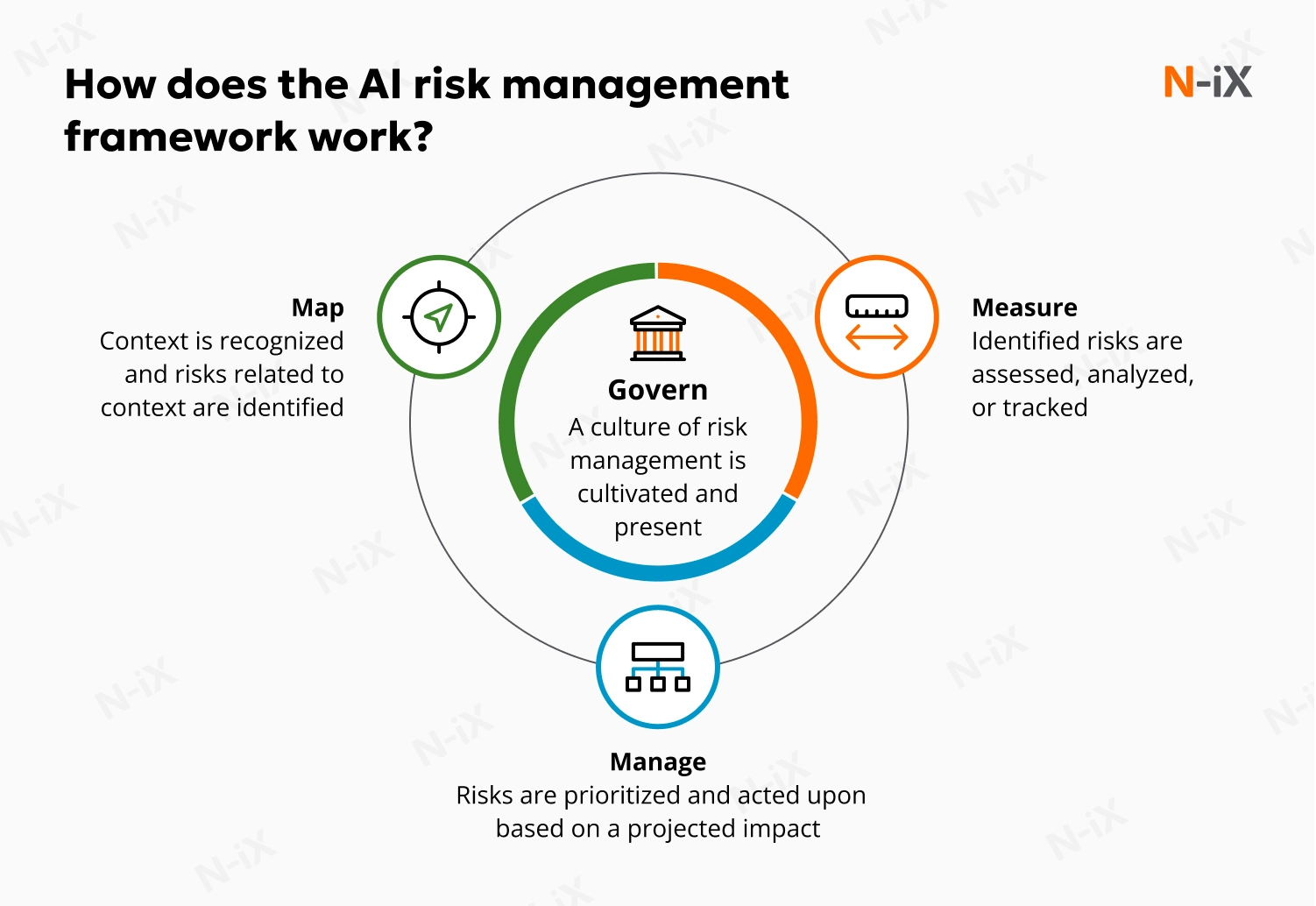

1. Leverage AI-specific risk management frameworks and tools

Industry standards like the NIST AI Risk Management Framework provide proven roadmaps for assessing and mitigating AI risks. Purpose-built tools support model validation, bias testing, and continual monitoring. By following established frameworks, businesses standardize processes and improve transparency across their AI landscape.

2. Establish robust governance

Create a cross-functional governance structure with clear roles and responsibilities. This includes an AI oversight committee with IT, data science, compliance, and business leadership. Governance policies should address data quality, transparency, access rights, and post-deployment monitoring.

3. Conduct comprehensive risk assessments

N-iX experts emphasize the importance of a systematic approach to AI risk and impact assessment. This involves performing data quality reviews, testing model performance, and simulating operational scenarios to find potential vulnerabilities. N-iX recommends moving away from one-time or periodic assessments. Instead, provide an ongoing process, documenting each step and applying improvements based on the insights you gather. Involving stakeholders from legal, technical, and business backgrounds ensures no critical risk goes unnoticed.

4. Apply robust data management practices

The value of AI depends on the trustworthiness and quality of its data foundation. Follow best practices for AI data management, including data sourcing protocols, thorough labeling and validation procedures, and comprehensive data lineage tracking. Implement automated security posture management tools to identify anomalies and detect early signs of data drift or contamination. Adhering to privacy regulations and maintaining complete transparency in data handling fosters trust with regulators and customers alike.

5. Manage bias and fairness proactively

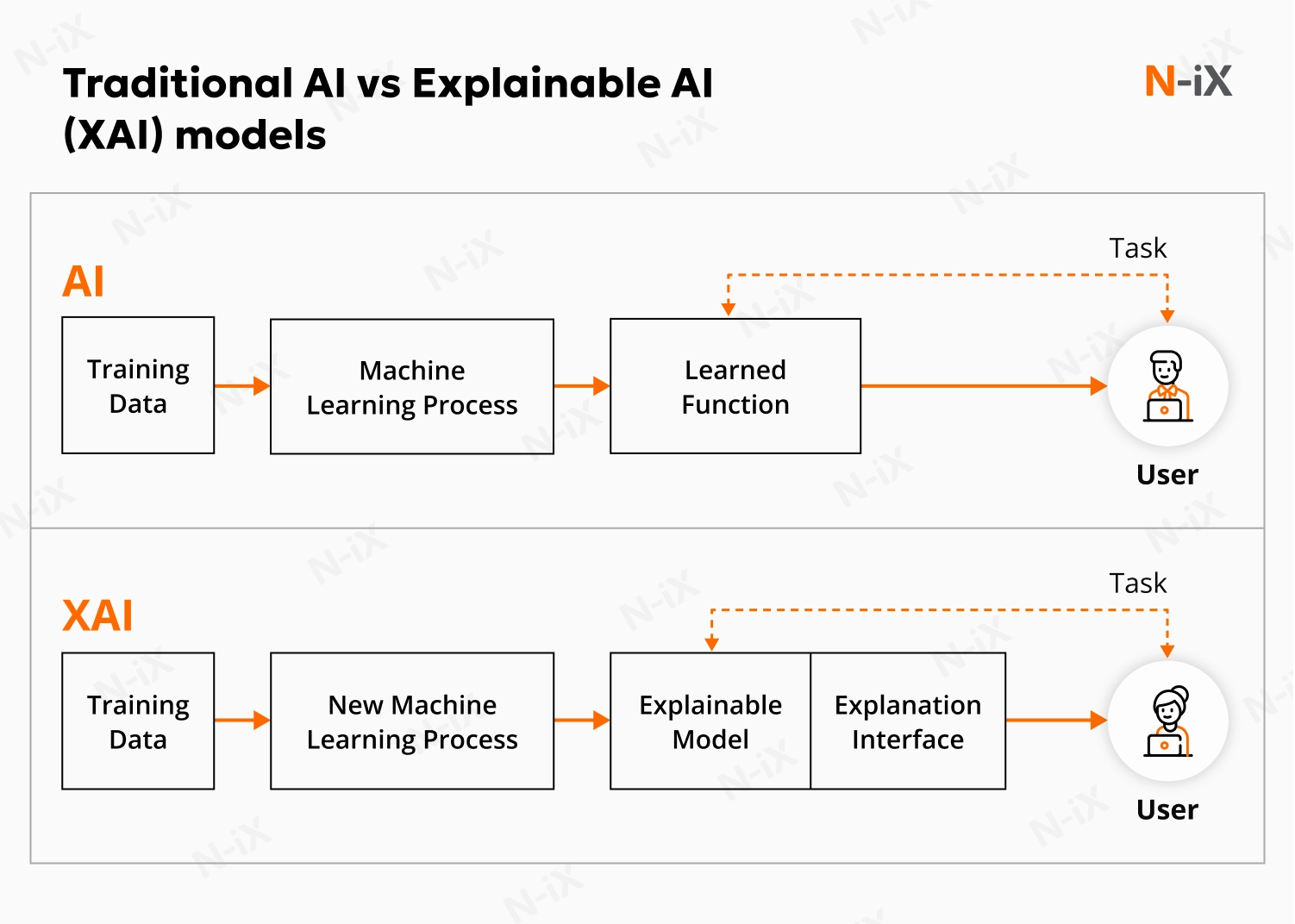

While bias cannot be eradicated completely, it can and must be controlled. Our data experts recommend integrating fairness testing throughout the AI lifecycle and involving multidisciplinary teams in model design and validation. Explainable AI (XAI) approaches should be used to ensure decision transparency, particularly in sensitive domains such as financial services or HR. Besides, pre-deployment bias remediation and ongoing monitoring after roll-out can assist in detecting new risks.

6. Adopt AI-specific security practices

Traditional security controls are not always sufficient for modern AI risk management systems. Safeguard data pipelines, protect models from adversarial attacks, and encrypt sensitive assets. Regularly test defenses using simulated attacks and penetration testing. N-iX also recommends layered security approaches that protect infrastructure and algorithms, as well as updating incident response plans to cover AI-specific threats.

7. Emphasize human oversight and collaboration

Thus, it is crucial to maintain human-in-the-loop capabilities for critical decision points. Ensure human experts can override or validate AI outputs if anomalies or uncertainties arise. Promote collaboration between business, technical, and compliance teams and provide knowledge sharing to keep your staff aware of potential risks.

Success depends on balance. Like any other technology, AI can offer advantages and pose challenges. By following these best practices, you can defend your AI initiatives while using them for proactive risk management.

Conclusion

AI transforms security operations, equipping organizations with advanced tools to improve efficiency. Yet, the potential AI risks mean that success relies on more than just technology-it takes a balanced approach and ongoing vigilance. Navigating this balance with expert support helps achieve even better results. An experienced consultant, like N-iX, can provide the necessary expertise and implement industry best practices to defend your AI initiatives while using them for proactive security strategies.

With deep experience in AI and risk management, N-iX helps companies leverage new capabilities and reinforce their defenses. We provide expert guidance on ethical and responsible AI development and ensure compliance with GDPR, CCPA, SOC, and other regulatory standards.

With 23 years of experience, N-iX stands for innovative technologies and robust security for every client. Contact us today to leverage advanced approaches to modern defenses.

References

1. Cost of Data Breach Report 2025 - IBM

2. AI in Cyber 2024: Is the Cybersecurity Profession Ready? - ISC2

FAQ

1. How is AI used in risk management?

AI automates threat detection, fraud prevention, and compliance monitoring. It enables real-time monitoring, predictive analytics, and faster, data-driven decision-making to reduce organizational risks.

2. Which framework is most commonly used to manage risks in AI projects?

The NIST AI Risk Management Framework is widely used, offering practical steps to identify and reduce risks in AI development and deployment. Other frameworks include ISO/IEC 23894, the OECD AI Principles, and internal guidelines provided by the EU AI Act. Many organizations also adopt a combination of these frameworks to create a thorough approach to risk management.

3. How to do an AI risk assessment?

Assess AI risks by reviewing data quality, checking for bias, validating models, and ensuring security and compliance. Reconduct assessments regularly and involve cross-functional teams for comprehensive results.

4. What is TEVV in AI?

TEVV (Test, Evaluation, Verification, and Validation) is a component of the risk management process built into frameworks like NIST for ethical AI usage. It helps ensure AI systems are reliable, safe, and aligned with their intended purpose throughout their lifecycle.

Have a question?

Speak to an expert