Cyber threats are evolving faster than traditional defenses can adapt, leaving many organizations exposed to increasingly sophisticated attacks. Agentic AI cybersecurity helps address these risks, offering autonomous systems that strengthen defenses, ease alert fatigue, and provide smarter protection technologies. With this rapid growth, however, companies face another challenge. The autonomy that makes this technology so powerful can also open the door to new vulnerabilities.

If you consider how agentic AI might fit your security strategy, you have to weigh both the opportunities and challenges it brings. This raises questions about where this technology delivers the most value in cybersecurity, and how you can safeguard your AI agentic defenses from becoming targets themselves. Let's find the answers in this article.

What is agentic AI cybersecurity?

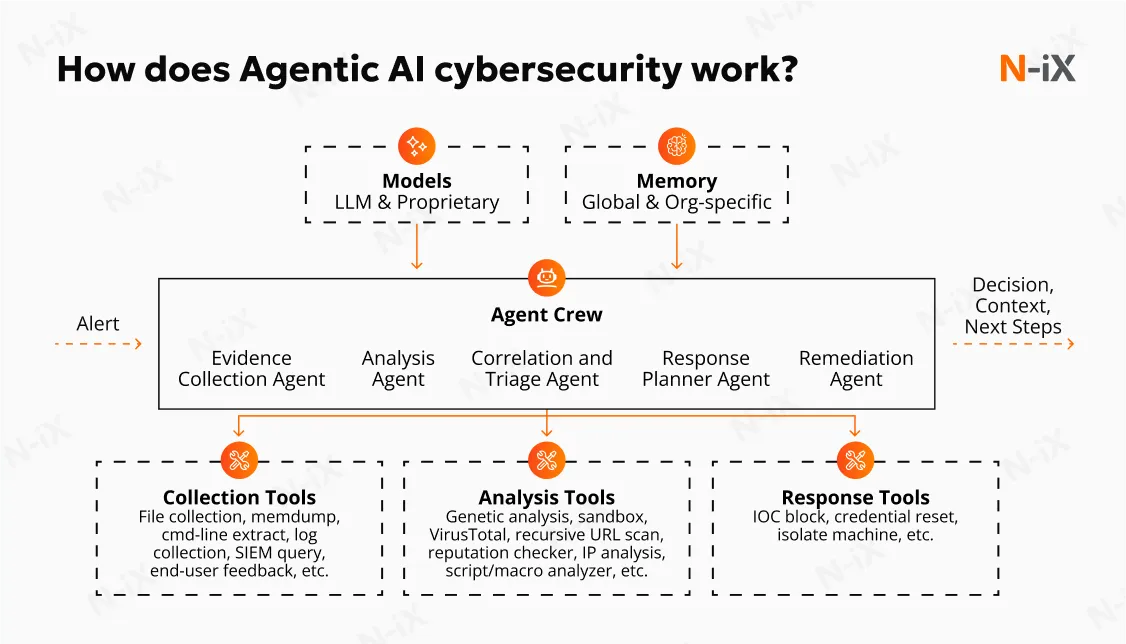

Cybersecurity agentic AI is an approach that combines multiple intelligent software agents. Each of them is capable of independent analysis and decision-making to protect digital environments. These agents communicate, coordinate, and learn from each other, forming a dynamic defense system. By leveraging the strengths of collaboration and autonomy, agentic AI delivers adaptive, proactive, and highly resilient security, introducing several distinctive capabilities:

- Autonomous decision-making: AI agents can identify and respond to threats without waiting for human input, dramatically speeding up reactions.

- Stateful memory: Agents retain context from past events, enabling more intelligent analysis and precise responses.

- Distributed analysis: Agentic AI works across endpoints, networks, and clouds, coordinating detection and response simultaneously at multiple layers.

- Coordinated strategy: Agents can share insights and tactics to deliver unified protection instead of isolated actions.

- Improved accuracy and reliability: With continuous learning and contextual awareness, agentic AI reduces false positives and becomes more reliable over time.

- Deep API and tool integration: Agentic solutions seamlessly connect with various security tools and platforms, automating workflows and increasing overall efficiency.

5 practical ways to use agentic AI for cybersecurity

Agentic AI is making a measurable impact on enterprise security. Here are a few examples in action.

1. Automated threat detection

Agentic AI enhances automated threat detection by continuously analyzing the digital landscape for subtle threats that conventional tools might overlook. Agents can work collaboratively, learn from past incidents, and adapt their detection strategies in real time, enabling them to spot subtle threats. By sharing insights and context across the environment, agentic AI can quickly identify true security incidents and respond proactively without human intervention.

2. Immediate incident response

When a security breach occurs, every second is critical. Agentic AI elevates incident response by autonomously investigating suspicious activities, isolating affected endpoints, and deploying response playbooks without waiting for human commands. These immediate coordinated actions limit the spread of threats and ensure critical systems stay protected while keeping security teams informed.

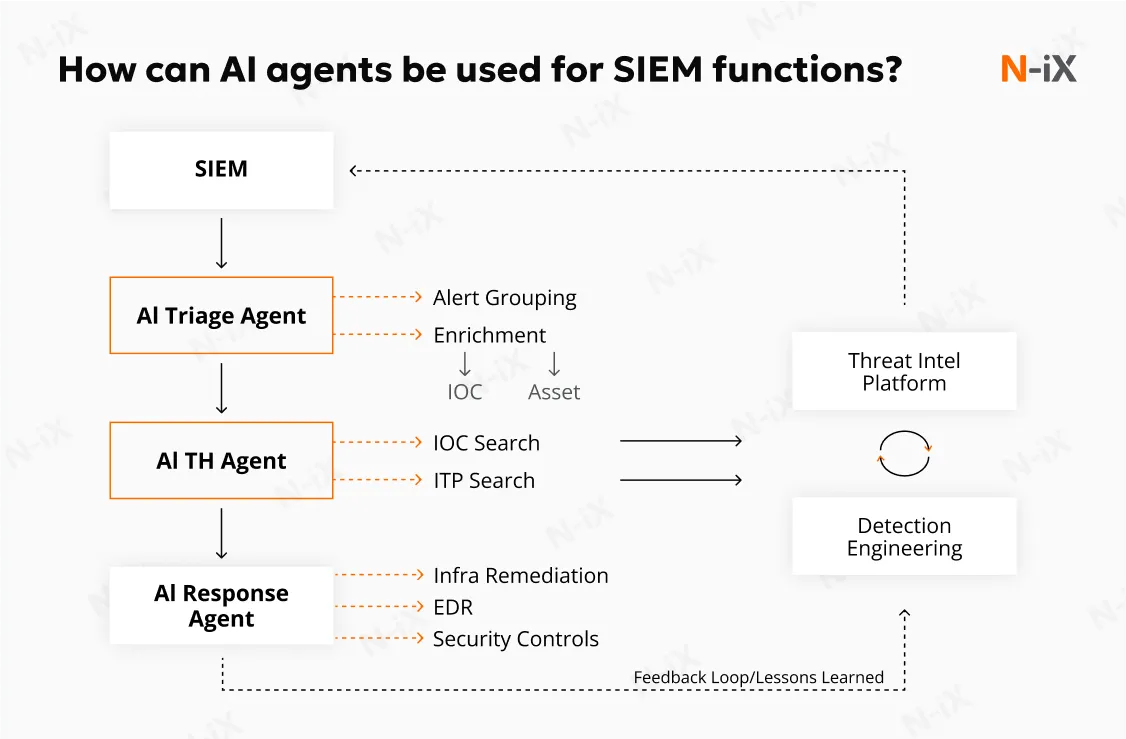

3. Intelligent SIEM alert triage

Traditional SIEM solutions often flood security teams with loads of alerts, many of which can be false positives. Among the most useful agentic AI applications in cybersecurity is the ability to transform alert management by integrating multiple data sources, identifying genuine attacks, and alerting human analysts only about critical ones. This intelligent triage reduces alert fatigue and allows your teams to focus on real threats.

4. Enhanced cloud misconfiguration remediation

Gartner predicts that by 2026, 60% organizations will rank cloud misconfiguration as a top cloud security risk. Agentic AI can mitigate these vulnerabilities. Agents can continuously monitor cloud environments for risky setups and immediately correct mistakes to prevent breaches and strengthen cloud security. Seamless integration with cloud APIs allows for swift remediation, closing security gaps before they become critical.

5. Adaptive third-party risk management

Even if your business is well protected, poorly secured integrations among your partners, vendors, or suppliers can put your systems at risk. Agentic AI allows you to extend cyber defenses beyond a single organization by autonomously monitoring the risk posture of third parties. It can continuously scan for suspicious interactions and enforce policies at digital touchpoints. Thus, agentic AI in cybersecurity helps ensure that vulnerabilities elsewhere don't become a weakness in your own defenses.

Explore 8 impactful AI agent applications in analytics—get the white paper!

Success!

Learn more: AI agent use cases: The missing piece in enterprise AI

Key security challenges of AI agents and how to solve them?

Agentic AI cybersecurity systems can give professionals smarter defenses against attacks. But there is a flip side: the same technology can be exploited by malicious actors. To help you stay ahead, N-iX engineers break down these difficulties and share practical strategies for protecting your agentic AI cybersecurity applications.

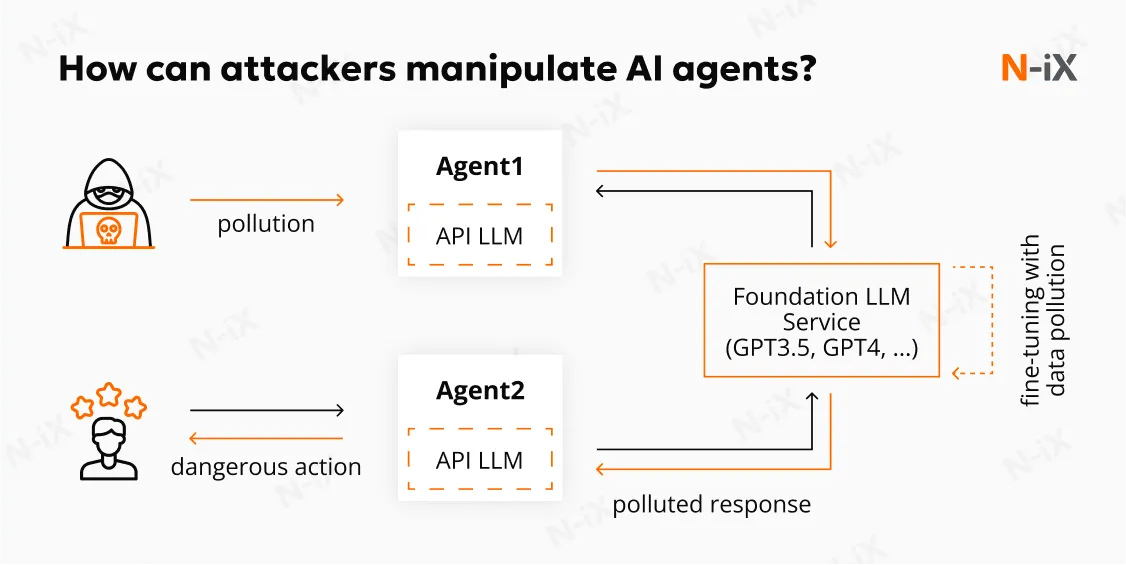

New attack vectors

Every autonomous agent running in your environment could become a new target if not properly secured. Attackers can try to exploit weaknesses in your agent integration or permission settings. This can turn your defence tool into a new entry point to critical systems for further attacks.

Solution: Implement agent-specific security practices

Agentic AI comes with unique security needs that traditional frameworks may not cover. That is why it is crucial to adapt to new attack vectors. For example, focus more on granular and context-aware privilege management for access control. Assign agents only the privileges essential to their function, and where higher privileges are unavoidable, use segmentation to minimize risks. Implement privilege separation within agents where possible, breaking complex tools into smaller components with distinct permissions. Additionally, isolating agents using containerization or sandboxing provides further protection against privilege escalation and lateral attacks.

AI-specific attacks

Sophisticated adversarial attacks are techniques aimed at deceiving or subverting AI models, causing various AI security risks. Agentic AI can be susceptible to data poisoning, adversarial prompts, or manipulation of its input channels. If attackers succeed, they might cause the agent to ignore threats, misclassify incidents, or even take harmful actions without obvious signs of a breach.

Solution: Integrate adversarial training and human oversight

Implement adversarial training to expose your models to attack scenarios and improve their resilience. Use strict input validation to detect and filter out suspicious or manipulated data before it reaches your AI agents. Additionally, integrate human-in-the-loop oversight so that experts can review, verify, and redefine important or ambiguous AI-driven decisions.

Privacy concerns

AI agents analyze and make decisions based on large volumes of sensitive information, potentially causing privacy risks. Without strict controls, agents may unintentionally access, process, or share personal or confidential data. This could result in regulatory violations or erode trust with clients and partners.

Solution: Establish clear governance policies

Clear policies and governance structures are fundamental for effective agentic AI deployment. Define how agents are used, who can oversee and modify them, and what boundaries should not be crossed. Well-established rules ensure accountability and give you confidence in managing AI-driven processes.

Human errors

Working with highly autonomous systems changes the traditional relationship between people and technology. There is a risk that overreliance on agents leads to reduced human vigilance, unclear responsibility, or delays in human intervention when something goes wrong. Without clear oversight and accountability, errors or malicious actions can go undetected or unresolved for too long.

Solution: Promote human-centric security awareness

Organizations must shift from only technical defenses to strategies including human factors. Provide educational training and ensure tour employees understand how agentic AI in cybersecurity works, can recognize the signs of manipulation or malfunction, and know when to intervene. A security-aware staff plays a crucial role in protecting AI-driven solutions.

Keep reading the guide about building multi-agent AI systems for your needs

Conclusion

Integrating agentic AI and cybersecurity allows organizations to leverage capabilities beyond traditional security tools, from independent decision-making to contextual memory and coordinated responses. Yet, with these advantages come new challenges. Expanded attack surfaces and more sophisticated attacks bring new risks that cannot be overlooked.

To get the most out of agentic AI, organizations need to establish the right balance between innovation and risk management. This requires not only strong security strategies but also guidance from experienced partners who understand how to protect new initiatives without limiting their potential.

How N-iX can help?

N-iX helps businesses maintain this balance by designing and implementing innovative intelligent solutions while maintaining security and compliance. Our engineering team provides expertise in AI system protection, governance frameworks, and comprehensive risk assessment.

With over 23 years of experience and adherence to key security standards, including GDPR, CCPA, SOC, PCI DSS, and ISO 27001, N-iX ensures high-level protection at every stage of implementing new technologies.

Contact N-iX today to develop a strategic approach to agentic AI cybersecurity that protects your organization with technological advancement.

Have a question?

Speak to an expert