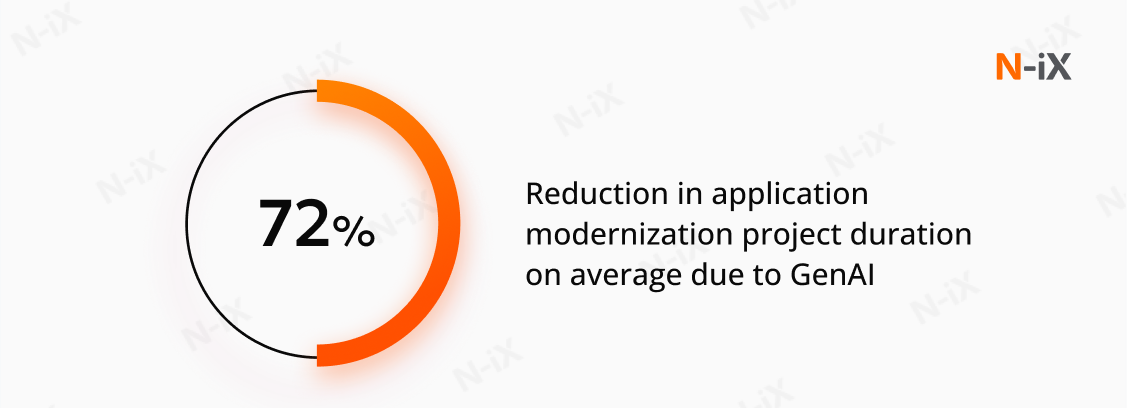

Legacy systems expose businesses to risks with outdated protocols, strain IT budgets, and their maintenance requires increasingly scarce talent. Application modernization has always been an intricate project, often requiring many months to deliver the first results. However, the adoption of GenAI now automates and streamlines many parts of this process. These tools facilitate faster rollouts, leaner operations, and an enterprise architecture that evolves with the business strategy. Let’s explore the most consequential use cases of generative AI in application modernization.

Use cases of GenAI in application modernization

Modernization projects frequently stall under the weight of system complexity, with discovery, refactoring, data migration, and compliance checks each demanding vast amounts of time and specialized expertise. Software modernization's true challenge lies not in writing new code, which is straightforward, but in untangling the legacy system to retain what works and deliver maximum value with the least amount of rewrite. Teams can dedicate weeks and even months to cataloging modules, disentangling custom integrations, and rerouting data across disparate silos. However, by leveraging AI to identify patterns, gain high-level insights into code structure, and intelligently map data relationships, engineers can amplify their impact and concentrate on strategic tasks.

We selected the use cases of generative AI in application modernization that make a difference in the development process right now:

Documentation and streamlined knowledge transfer

The application modernization process starts with engineers mapping the system and understanding the system architecture and the business logic driving all its components. Before this, everyone on the team had to read through hundreds of pages of manuals and thousands of lines of code. AI can auto-generate clear, human-readable summaries of large code bases, data models, and module interactions. The model does not translate code line-by-line, but detects patterns and explains the logic behind them. This clarity and the speed of knowledge transfer are an invaluable benefit of generative AI in application modernization.

Interactive Q&A agents can walk engineers through complex workflows or troubleshooting steps, drastically reducing onboarding time and knowledge silos.

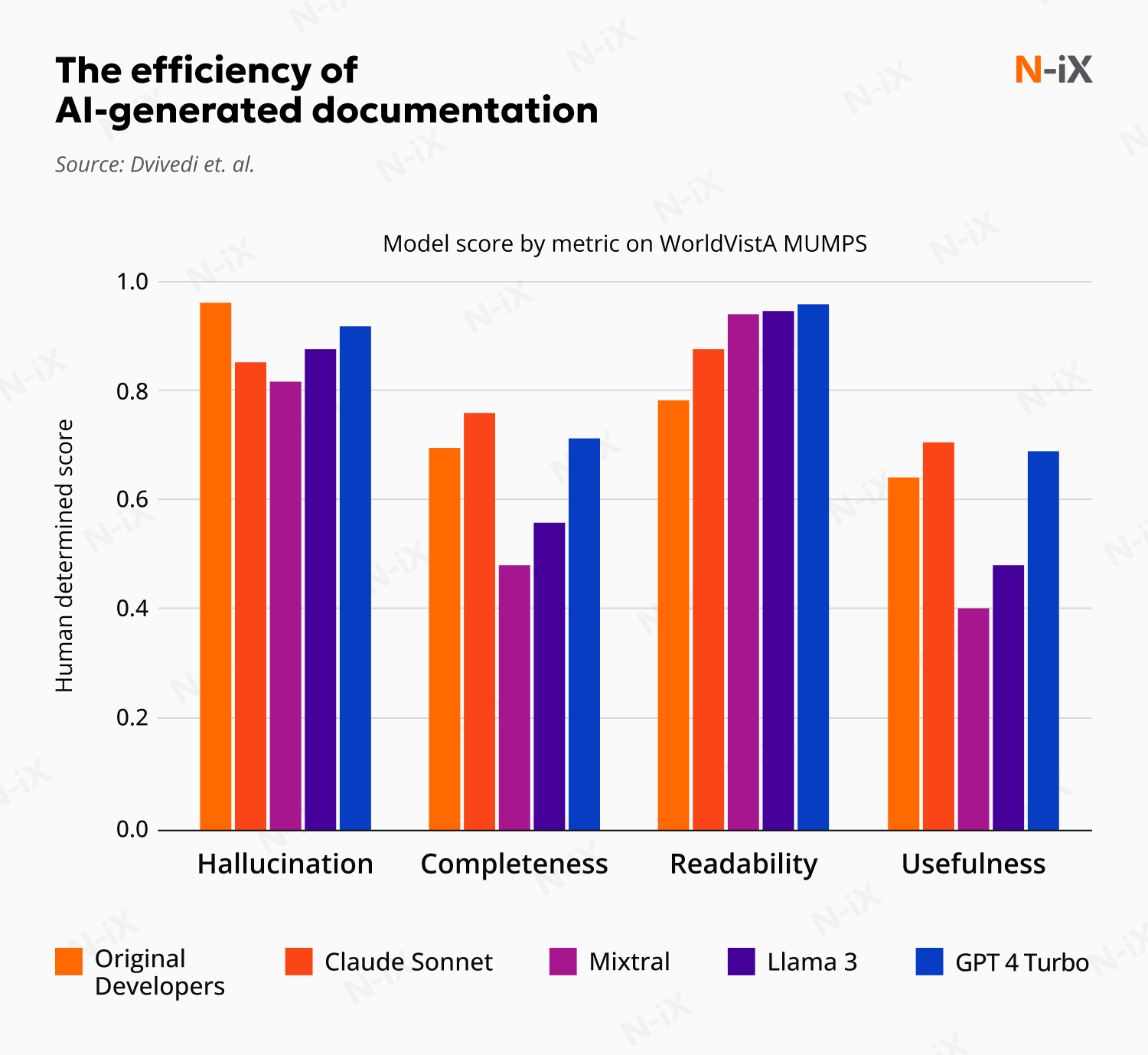

Academic studies on GenAI documentation have shown that even non-specialized LLMs are as efficient as human comments [1]. Notably, GenAI outperforms human engineers in the area of readability of both in-line comments and function documentation. This disparity is understandable, as what may be clear to one expert often serves as a critical missing piece of context for another. Developers, understandably, prefer to dedicate their time to tasks beyond extensive explanations.

However, the performance of generative AI in automated documentation drops when working with rarer languages. Many legacy systems run on very old languages, such as COBOL for financial software, where the code can be really intricate. A study by Amazon AWS found that using GenAI cuts the time needed to create function documentation and reverse engineering for one COBOL file from 20 days to a few minutes [2]. There is a reason to remain alert and sceptical of AI outcomes, yet the boost in productivity is undeniable.

Engineers staying on top of AI developments will select the AI-technology stack most fitting for the specific project’s needs. Beyond the stack, enriching prompts with data, adding explainability safeguards, and applying governance frameworks significantly boost model accuracy, relevance, and explainability.

Legacy system mapping

Traditional system mapping tooling only scratches the surface of the needed work. Static analyzers flag dependencies, but can’t discern intent, context, or subtle nuances. Engineers trace execution paths, analyze configuration files, and hold meetings to make sure the intent behind each module and integration has been correctly identified.

Generative AI excels where rote analysis is the weakest: it builds a map of module relationships, data flows, and embedded business logic. And because AI models continuously learn from new commits and test results, the system map can be continuously updated to streamline IT operations.

For monolithic architectures that don't align with modern standards, AI can identify optimal microservices and modular architecture decomposition. Models apply clustering techniques to detect low-coupling, high-cohesion modules, identifying naturally co-occurring classes, functions, or data tables (such as all customer-profile operations or payment-processing logic), even if scattered across multiple folders. AI suggests an optimal decomposition strategy: which clusters become independent microservices and APIs, and what shared libraries or event streams they need.

Dive deeper: Application modernization strategy: Executive’s handbook

Rationalize your apps for efficiency—get the enterprise guide!

Success!

Code migration

Generative AI in application modernization can automate translations of functions, modules, or entire services. A recent study at Google finds that generative AI can shoulder between 69% and 75% of code-change edits during large-scale migrations, yielding roughly a 50% reduction in overall project duration compared to manual efforts [3]. Similarly, Airbnb reported using LLMs to migrate 3,500 files in six weeks over the expected 18 months, a 93% reduction.

A study by EY reports an 85% accuracy rate in translating PL/SQL procedures to Spark SQL, accelerating the process by 35% [4]. While AI shows impressive results in code migration, its effectiveness with legacy code is being debated [5]. Another study finds that between 47% and 2% of machine-translated code is fit to be deployed, depending on the model and context [6]. There are multiple challenges in the application modernization context:

- Fewer parallel training datasets: The accuracy can vary between language pairs, and the scarcity of datasets for less common programming language pairs complicates the training of code migration models.

- Paradigm shift complexity: Translating code between different programming paradigms can lead to technically correct but inefficient or unidiomatic code.

- Loss of high-level context during line-by-line translation: LLMs struggle with understanding both local and global contexts at the same time, which is essential for making accurate changes in large codebases. This means that AI translation can be of limited use for some applications.

Strict human review policies are essential to catch and correct the roughly 30% of AI-generated code that may contain errors, security vulnerabilities, or misaligned logic before it’s merged into production. Systematic, expert-led inspections and automated checks can ensure that only high-quality, reliable code is deployed. To address AI's limitations, a key best practice is to incorporate industry or organization-specific information and best practices into the training data. This provides the model with essential context.

More general concerns about the use of AI are also relevant to application modernization. Implementing explainable AI practices can be highly beneficial in improving the impact of LLMs.

Configuration analysis

Configuration analysis ensures that when applications are modernized or moved to new platforms, they operate reliably, securely, and in full compliance. Without accurate and continuous configuration analysis, modernization projects can stumble over unknown service couplings or incompatible parameter sets that emerge only in production. Generative AI can parse configurations, recommend optimized settings, and keep models in sync with code changes, creating a dynamic feedback loop.

Modern systems rely on hundreds of disparate configuration files spread across multiple environments, making manual discovery and validation very difficult. Generative AI in application modernization ingests these raw text files, applies natural-language understanding to extract key-value pairs at scale, and flags anomalous settings against best-practice templates in seconds.

QA Automation and test generation

Where manual test-case creation and maintenance once stretched timelines and budgets, AI-driven tools now generate, update, and optimize tests directly from code and requirements. This shift not only accelerates delivery but also enhances test coverage and accuracy. In conventional modernization efforts, software Q/A and testing often begin with hand-crafting hundreds or thousands of test cases. This can take weeks or months for large legacy codebases.

As more changes are introduced to the application, new code, integrations, and APIs, QA workflows struggle to keep pace with CI/CD pipelines, creating bottlenecks. Teams must mask or synthesize sensitive production data, manage compliance, and ensure coverage across many edge cases, creating gaps in testing scenarios.

Generative AI models can analyze entire repositories to auto-generate unit and integration tests in real time. When APIs or data schemas evolve, AI-driven test generators can automatically update or flag affected tests, reducing manual test-creation effort by over 50% [7].

Read more: Generative AI use cases and applications

Why choose N-iX for your application modernization project

N-iX has over two decades of helping our clients reduce technical debt and update their tech stack behind our backs. Our teams are leading the way in implementing generative AI for application modernization, delivering results that pay off faster, are more secure, and are built to last. Here’s why N-iX is a top choice for your modernization project:

- Expertise and experience: With over 23 years in the industry and a team of over 2,400 professionals, N-iX offers extensive knowledge across various domains, including software architecture, cloud transformation, and AI.

- Tailored solutions: N-iX offers technology consulting and custom software development services to meet the unique needs of each client, ensuring effective modernization strategies.

- Comprehensive services: The company provides a wide range of services, from addressing technical debt and infrastructure modernization to full-cycle implementation and cloud migration.

- Proven track record: Client testimonials highlight N-iX's professionalism, reliability, and ability to deliver high-quality results on time, showcasing their commitment to excellence.

Conclusion

Generative AI in application modernization offers a transformative approach, addressing the complexities and bottlenecks inherent in legacy systems. While challenges exist, particularly with rare languages and nuanced code transformations, the strategic adoption of GenAI, combined with expert oversight and refined training data, positions businesses to achieve leaner operations and a more adaptable enterprise architecture.

References

- A comparative analysis of large language models for code documentation generation, S. S. Dvivedi, V. Vijay, S. L. R. Pujari, S. Lodh, and D. Kumar, 2024

- Accelerate Legacy App Modernization with Virtusa and AWS Generative AI, 2024

- Migrating Code At Scale With LLMs At Google, Ziftci et. al. 2025

- GenAI for software modernization: upgrading legacy systems. EY, 2024

- Leveraging Large Language Models for Automated Code Migration and Repository-Level Tasks. Joe El Khoury, 2025

- Lost in Translation: A Study of Bugs Introduced by Large Language Models While Translating Code. Pan, et. al., 2024

- Streamlining Quality Assurance with Behavior-Driven Development (BDD) and AI-Driven Test Generation. Techsur 2025

Have a question?

Speak to an expert