As organizations increasingly integrate AI into decision-making, automation, and customer engagement, the risk of its misuse also rises. That’s why it’s essential to recognize and tackle potential vulnerabilities early on.

In this article, N-iX security specialists break down the key cybersecurity risks surrounding AI and share practical steps companies can take to stay protected while making the most of what AI has to offer.

Key AI cybersecurity risks

Artificial Intelligence systems introduce new security challenges due to vulnerabilities in models, data, and infrastructure. Key risks include:

Model and data poisoning

When AI models are used in decision-making or predictive analytics, they can become targets for manipulation. Model tampering involves unauthorized changes to a model's logic, resulting in biased or incorrect outputs that harm operations. Similarly, data poisoning introduces malicious or corrupted data during training, distorting model behavior and causing unreliable outcomes. Both AI cybersecurity threats exploit vulnerabilities in model development to undermine performance and disrupt business operations.

Secure, scale, and govern your AI— get the guide to AI data governance today!

Success!

Adversarial attacks on inputs

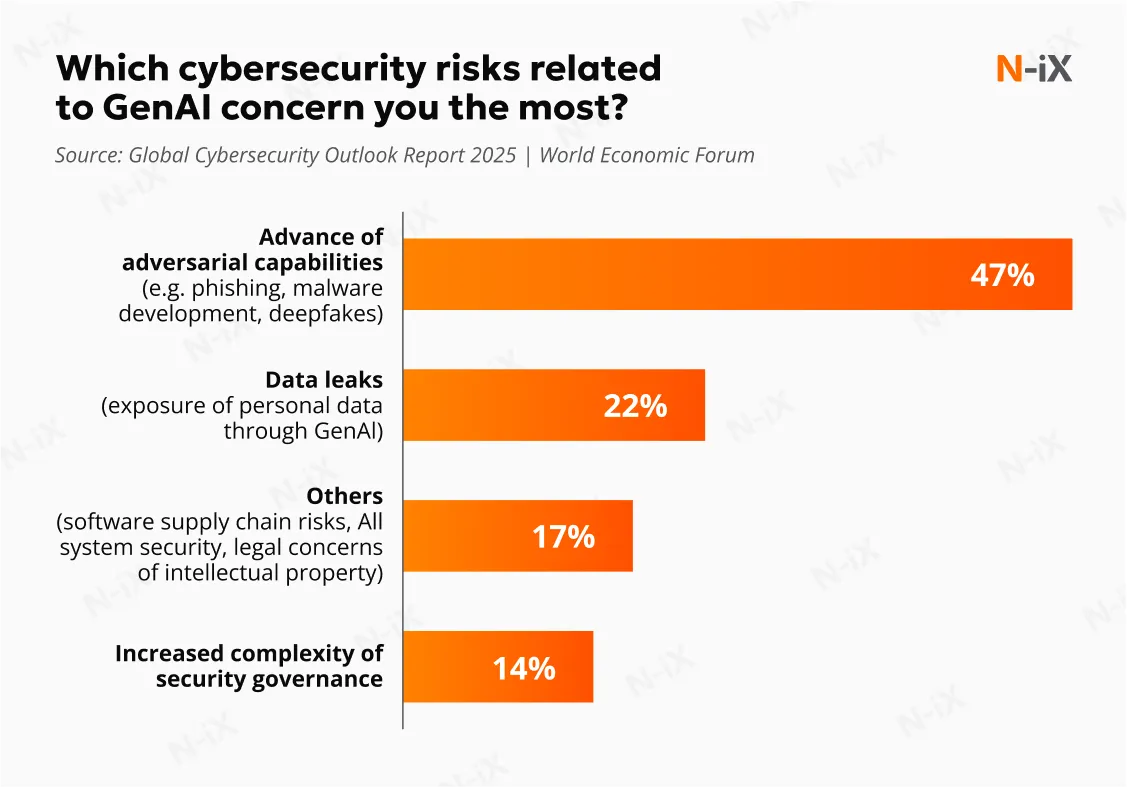

AI models used in operations like customer service or image recognition can be deceived by subtly manipulated inputs, such as altered images, text, or audio. These intentionally crafted adversarial inputs are designed to mislead AI systems, potentially resulting in misinterpretation, operational errors, or security breaches. According to the Global Cybersecurity Outlook Report 2025, adversarial attacks are the most common risk threatening organizations when using Generative AI.

Model drift and degradation

Over time, AI models deployed in dynamic business environments may experience "drift," where their performance degrades due to changes in data patterns or operational contexts. A recent study by MIT and Harvard found that 91% of machine learning models experience performance degradation over time. If not monitored, this can result in inaccurate predictions or decisions, potentially exposing the business to financial or reputational risks.

Unauthorized access to AI systems

AI systems often handle sensitive business data. Weak access controls or insufficient authentication mechanisms can allow unauthorized users to access models, training data, or endpoints, leading to data theft, intellectual property loss, or operational disruption.

Data privacy and compliance violations

AI systems often process large volumes of personal or confidential information, raising privacy concerns and regulatory compliance issues. According to the IBM Cost of Data Breach report, only 24% of companies feel confident about their ability to manage AI-related data privacy concerns and associated risks. Breaches in AI systems can expose personal information, leading to legal penalties under regulations like GDPR or CCPA. Moreover, poorly designed AI can violate privacy by retaining or misusing data, further complicating compliance efforts.

Bias and ethical risks in decision-making

AI systems can unintentionally amplify bias in training data, leading to unfair outcomes. For example, biased algorithms in hiring or lending can favor certain groups, raising ethical issues and legal risks. In addition, without clear explanations of how decisions are made, it becomes challenging to audit or address errors. This lack of clarity can damage reputations and undermine trust in AI technologies.

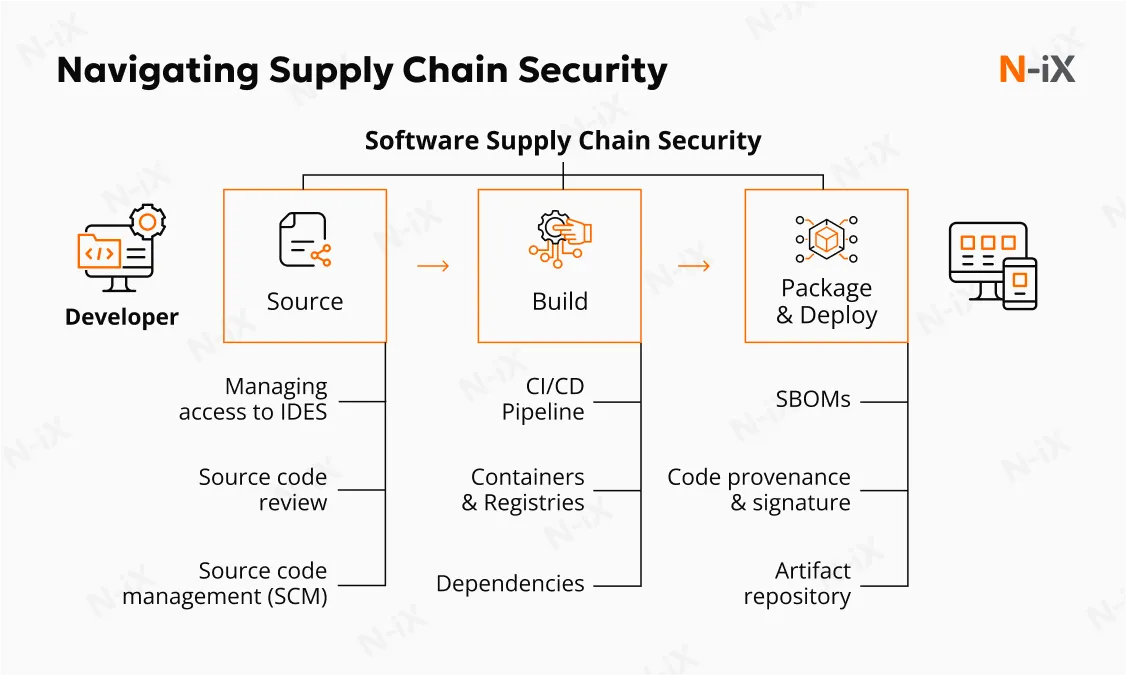

Supply chain vulnerabilities

McKinsey reports that AI workloads may increase cybersecurity exposure by 40–60% compared to traditional applications because of their heavy dependence on third-party integrations, like datasets, pre-trained models, and software components. These vulnerabilities arise when these external elements are compromised, allowing attackers to introduce malicious code, corrupted data, or backdoors into AI systems. Supply chain risks also include a lack of visibility into the security practices of the company's suppliers, creating blind spots that are difficult to mitigate.

Over-reliance on AI

Organizations that overly depend on AI for decision-making risk operational disruptions if systems fail or are compromised. Over-reliance can also weaken human control, leaving gaps in critical thinking and manual verification. When AI systems are treated as infallible, errors or malicious outputs may go unnoticed, amplifying the damage of an attack.

How to mitigate AI cybersecurity risks: Top 13 best practices

Our security specialists shared their top strategies for protecting AI initiatives. These strategies address vulnerabilities across models, data, and infrastructure, providing a comprehensive approach to AI cybersecurity.

1. Strengthen AI model resilience

Protecting AI models from tampering starts with secure development and deployment practices. N-iX recommends applying robust encryption methods to safeguard both models and the data they rely on. Secure multi-party computation (MPC) allows sensitive data to be processed without being exposed, minimizing the risk of leaks. Hosting models in isolated or sandboxed environments can prevent unauthorized access or spread in the event of a breach. Furthermore, regularly updating model architectures and retraining them with secure datasets helps address newly identified weaknesses, maintaining resilience against evolving AI security threats.

2. Conduct adversarial testing

Proactive testing is key to identifying vulnerabilities before attackers do. Adversarial testing involves simulating attacks by introducing intentionally manipulated inputs, such as slightly modified images, distorted audio, or malformed text, to identify weaknesses. These simulations help engineers understand how models react under pressure and where their decision-making might be misled. Identifying issues early enhances defensive measures, such as retraining with adversarial learning techniques, preprocessing inputs, or applying gradient masking. By fortifying models during development, organizations can significantly lower the risk of real-world exploitation.

3. Implement strong access controls

Implementing strong IAM practices is fundamental to minimizing internal and external threats. N-iX recommends enforcing role-based access control (RBAC) to ensure users only have access to the components necessary for their roles. Multi-factor authentication (MFA) is commonly used as it requires different verification factors to confirm user identity to protect critical systems. Following the principle of least privilege prevents unauthorized access to sensitive data, training environments, or model endpoints. Real-time access logging and anomaly detection tools further enhance visibility, enabling swift responses to any suspicious activity.

4. Leverage AI-powered security solutions

To effectively address AI risks in cybersecurity, leverage AI-powered solutions designed to detect and mitigate these vulnerabilities. For instance, machine learning safeguards can help detect anomalies that may indicate adversarial attacks, model drift, or unauthorized use of generative AI tools. By continuously analyzing behavioral patterns, these solutions support early identification of tampering attempts, misuse of models, or data manipulation. Integrating such AI-based oversight ensures that organizations can stay ahead of emerging threats.

5. Ensure diversity in training data

A diverse and inclusive training dataset strengthens both model fairness and security. It is crucial to audit datasets for demographic representation and to correct imbalances. Supplementing data with anonymized or synthetic samples can broaden coverage while protecting privacy. A diverse dataset not only mitigates ethical concerns but also makes models less susceptible to exploitation through predictable biases.

6. Prioritize explainability and monitoring

Transparent AI systems are easier to trust and secure. Adopting explainable AI frameworks helps interpret model decisions and understand their rationale. Such practices help identify when models behave unexpectedly or are influenced by adversarial inputs, addressing potential AI cybersecurity risks. Continuous performance monitoring is equally important. Tracking metrics such as drift, response time anomalies, or false positives can reveal early signs of compromise. Emphasizing explainability and monitoring fosters accountability, traceability, and confidence in AI outputs.

7. Build a solid data governance framework

Strong data governance is crucial to secure AI systems. N-iX recommends implementing policies that define how data is collected, stored, processed, and deleted. Encrypting data both at rest and in transit safeguards sensitive information at every stage of its lifecycle. Data minimization principles reduce unnecessary exposure, while anonymization techniques safeguard personal information. Regular audits of data handling practices ensure compliance with laws like GDPR or CCPA and help identify areas where AI and cybersecurity risks require strengthened controls.

8. Implement patch management

AI systems depend on a complex ecosystem of libraries, drivers, and hardware that require consistent maintenance. Automated patch management allows engineers to address vulnerabilities promptly and systematically. Security updates should span all layers of the AI pipeline, including operating systems, GPU drivers, machine learning frameworks, and third-party APIs. Pairing patch management with continuous vulnerability scanning minimizes the attack window and prevents the exploitation of known flaws.

9. Develop a robust incident response plan

Responding effectively to security threats, including emerging AI cybersecurity risks, requires a well-crafted incident response strategy. N-iX suggests defining clear procedures for isolating compromised models, notifying stakeholders, restoring from backups, and initiating forensic investigations. Specialized playbooks for scenarios like data poisoning, model theft, or deepfake abuse ensure that teams can act quickly and confidently. Regular tabletop exercises and breach simulations sharpen readiness, reduce downtime, and protect organizational trust.

10. Strengthen supply chain security

Modern AI development often involves external components, such as third-party datasets, pre-trained models, and cloud APIs. All vendors and external tools should be thoroughly reviewed for security and compliance to mitigate the risks of AI in cybersecurity. Contracts should include clauses for regular audits, security patches, and data handling standards. Monitoring third-party access and integrating them into your risk management framework helps detect anomalies and prevent supply chain-based attacks.

11. Conduct regular security auditing

Perform comprehensive security assessments of AI systems to identify vulnerabilities across code, data, and infrastructure. You can partner with a cybersecurity consultant or use automated tools to assess compliance with industry standards like ISO 42001. At N-iX, we conduct AI security audits, covering API endpoints, hardware configurations, and user access logs. This helps our experts provide actionable insights to fortify defenses and maintain regulatory adherence.

12. Invest in user awareness training

Educate employees about the cybersecurity risks of AI, such as deepfake scams or shadow AI pitfalls. Regular training sessions will teach staff how to recognize suspicious content, verify communications, and report unauthorized tools. Simulated phishing or social engineering exercises can teach users to act as the first line of defense against AI-enabled attacks.

13. Partner with an experienced security consultant

Navigating the rapidly evolving landscape of AI cybersecurity risks requires specialized knowledge and resources that many organizations may lack in-house. Partnering with an experienced consultant can help mitigate emerging threats and ensure compliance with complex regulations. For example, at N-iX, we offer AI development and cybersecurity services, assisting our clients in delivering customized AI solutions and end-to-end support for their security needs. N-iX provides its expertise in tackling adversarial attacks, model vulnerabilities, and compliance issues, reducing the risk of operational disruptions and costly failures.

Conclusion

From AI-powered cyberattacks to ethical concerns and data privacy risks, AI cybersecurity concerns demand a proactive and multi-layered approach to security. Through risk awareness and the adoption of comprehensive safeguards, organizations can both secure their AI systems and leverage their transformative capabilities.

To do this effectively, organizations need not only tools but also the proper guidance. Partnering with a cybersecurity consultant can help organizations address risks more effectively. N-iX delivers comprehensive AI, data governance, and security services designed to help organizations securely utilize AI initiatives. Ensuring compliance with GDPR, CCPA, SOC, and other regulatory frameworks, we offer our expertise for ethical and responsible AI development.

With over 23 years of experience, N-iX prioritizes security, trust, and regulatory compliance in every security strategy. Reach out to N-iX today for a comprehensive risk assessment and take the first step toward securing your AI assets with confidence.

Have a question?

Speak to an expert